-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Very wavy cloud #1375

Comments

|

What resolution are you using and at what distance are you? |

|

Resolution should be 1280x720 according to this wiki. The distance is about 13ft off the floor (so a bit further to the middle, and further still to the top of the image). The acceptable distances according to the specs at the intel site should be rated for up to 10m (32ft) so I'm hoping that's not the issue. |

|

The error scales up with distance squared. The error will be much bigger at 4m for sure. Can you tell us what this object size is you are looking at? We can tell you whether this is what you should expect to see or whether there is something wrong. Also, can you try turning off lights in room? That way we can test whether this is due to SNR and texture issue. |

|

"The error will be much bigger at 4m for sure." "Can you tell us what this object size is you are looking at?" "Also, can you try turning off lights in room?" |

|

Compared to Kinect V2, realsense D series is not suitable for volume/dimension measurement applications. There are quite significant fluctuations in depth data. See the following threads: Also see the Kinect v2 vs realsense D415 comparison in following thread: https://communities.intel.com/thread/121880 On the other hand, I believe it is much better for robotic and gesture applications with shrinked size, less power requirements and better outdoor performance. |

|

Yeah I'm coming to the same conclusion, but we just don't have any other good alternatives since Kinectv2's aren't being made anymore. I'm more confused as why the wavy noise seems to be built in though, because an averaging filter would remove any normal fluctuation. They seem to be consistently wavy in those spots for some reason. |

|

One option to consider is whether you can bring cameras closer to the objects to be measured if accuracy limits are the issue. If FOV then becomes a problem, then use two cameras to increase FOV. The cameras dont interfere with each other, so they are well suited to be used in multiples. The SDK supports it. Also, they can be HW synced if you need that. |

|

Okay, so what I gather then is that the HQ presets are really just for close range scanning, whereas further ranges are best served with defaults? I wish I knew what all of these parameters did so I could figure out exactly what's happening rather than generally knowing when to use which presets. My next goal will be applying a generous bilateral filter (or a mix of some others) to see if I can't suppress some of those waves without losing the important edges. |

|

Looks good. Yes a bilateral filter should help as well. I found it slightly more computational intensive than the domain transform filter. Also, if you are measuring an object on a floor, and your usage can afford to add a mat underneath, you should try something textured, instead of a white background. |

|

@michalstepien Ehmm. What are these couple of algorithms? |

|

@michalstepien I'm curious about the algorithms too, but making a wavy plane flat isn't too bad if you know you're looking at a plane. My concern is dealing with more general objects, where smoothing filters will degrade important characteristics. I've also noticed that increasing exposure time by a factor of 3 improves results (my scenes are mostly static, hence the use of an averaging filter as well). |

|

@agrunnet "If FOV then becomes a problem, then use two cameras to increase FOV." |

|

@jjoachim yes you can use multiple cameras on same USB hub. If you go beyond two cameras we recommend using a powered HUB. We found that we can get stable streaming as long as you stay below about 2200mbps on USB3. Some have connected 8 cameras but this was for depth only at lower resolution. In general do the math and use 16bit for depth calculation and 16bit for RGB (it is using yuy2). |

|

@agrunnet Perhaps I should ask this in a separate question, but how do I ensure that all realsense postprocessing disabled? We're attempting to integrate simultaneous cameras, but the stream has slowed down to a crawl. We have 3 cameras on 2 USB controllers. We were expecting 1 fast stream and 2 slow ones, but we got 3 very slow ones. We also noticed our CPU was being maxed out (400%). I loaded custom configs that I created with one of the realsense tools, but I specifically disabled all of the postprocessing since we have our own engine that does the filtering we want. In spite of that, we get less than 1 fps on all cameras and maxed out cpu usage. I disabled our filtering altogether and it didn't help very much; the pipe.wait_for_frame() is what's taking so long. Our configs (hand copied, so some typos may be present): EDIT: We also noticed that the config creation tool does not save RGB camera information, which we had also set to 15fps at max resolution. Instead, we had to specify these things in the config::enable_stream function for RS2_STREAM_COLOR. EDIT2: My apologies! It turns out the bottleneck is our pointcloud construction. A size of 921600, or 1280*720, appears to be to big. I'll post multi-camera results when we get performance issues worked out. |

|

@jjoachim I am also very interested to hear about multi-camera results. Did you get any accuracy improvement when using 3 cameras? Are you merging point-clouds to one? |

|

What we've noticed that having 2 D415 looking to the same target (with the laser on) improves depth measurements on uniform (and not only) surfaces, since the second laser enriches the IR images, so there are more correspondences. |

|

@vladsterz in what orientation did you place sensors? Or are they simply side-by-side? |

|

@bellekci 90 degrees apart. |

|

[Realsense Customer Engineering Team Comment] |

New link for this document: |

I wonder if there are pending updates related to the D455 model, for this important and extensive guide to optimizing depth perception. |

|

@michalstepien I have also the same problem in realsense D435. |

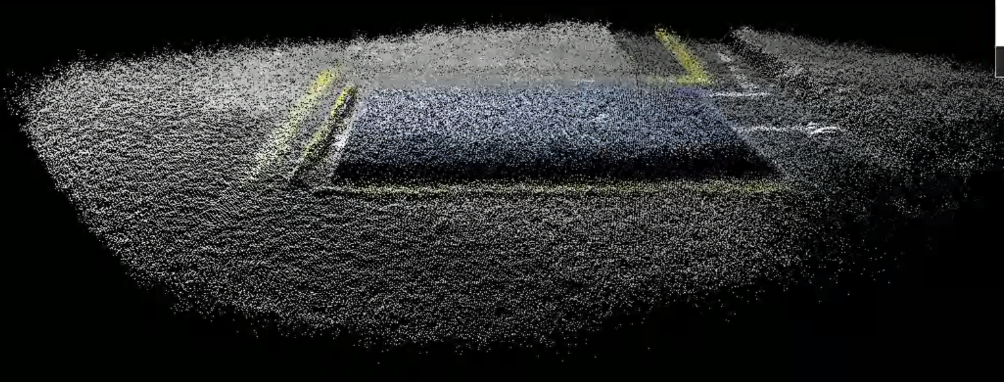

I took a static scene consisting of a very flat floor and flat scale on top (emphasis on flat) from a static camera. I averaged 10 frames together and used both default and high quality/high res json options. The camera is angled towards the ground at maybe 45 degrees. The result was a bit concerning; both the raw frames and the averaged frames look rather wavy for having a flat scene. I was hoping the averaging would remove the waves (assumed to be noise), but it seems the waves are caused by something more consistent. Any thoughts as to what this might be, or how to mitigate it?

Expectation (via Kinectv2, slightly different scene):

The text was updated successfully, but these errors were encountered: