-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Inconsistent frame queue when pulling frames from recorded bag file #7067

Comments

|

Hi @anguyen216 Rather than calculating the end of the bag based on length and FPS, a more accurate method to prevent the bag from looping back to the start after it reaches the final frame may be to add a repeat_playback=False condition: cfg.enable_device_from_file(filename, repeat_playback=False) Could you test please whether there is a positive difference to the number of frames extracted when using the above command? |

|

Hi @MartyG-RealSense. This works regarding getting the same results as what is displayed by the viewer application. There's a positive difference to the number of frames extracted when using the above command when compared to the methods mentioned above. Additionally, even with the above method, if alignment is done at the same time within the initial loop, the result would be about 100 frames less from what is output by performing alignment after the pipeline is closed |

|

I thought very carefully about your latest question and re-read the case from the start. As an alternative to doing the alignment and extraction in Python, I wonder if an alternative would be to use the SDK's rs-convert bag conversion tool to convert the bag to a ply point cloud file. https://github.com/IntelRealSense/librealsense/tree/master/tools/convert A member of the RealSense support team stated in another case that if a bag contains a depth and color stream, then the ply file extracted by rs-convert will be aligned. https://support.intelrealsense.com/hc/en-us/community/posts/360033198613/comments/360008034794 The Windows version of the RealSense SDK has a pre-built executable version of rs-convert that can be launched from the Windows command prompt interface. Further details about this can be found in the link below: |

|

@MartyG-RealSense Thank you for looking carefully into this case and the useful suggestions. I'll definitely check out the rs-convert conversion tool. For the most part, though, the reason I'm using Python because I'm not too familiar with C++ to edit the source code of the conversion tool to for my application. But perhaps, I can use it as a baseline to compare and find out which approach in python would give me the most consistent frame extraction. Will keep you updated as I'm currently working on this and hopefully will have something more solid soon |

|

@MartyG-RealSense Update: I just test the converter tool on one file. When converting bag file into .PLY format, I got the following result summary When converting bag file into PNG format, I got the following result summary Though the difference in the number of frames for depth and infrared mode aren't much difference between the two converter, number of RGB frames is only slightly more than half the number of depth and infrared when using PNG converter. Can you please explain these differences? |

|

rs-convert has had a tendency in the past to drop some frames when converting to PNG. In a discussion about this, a RealSense team member offered a link to a Python script that they said they had successfully tested in extracting all depth, IR and color frames from a bag as images. The Python script: https://gist.github.com/wngreene/835cda68ddd9c5416defce876a4d7dd9 |

|

@MartyG-RealSense Thank you for the resource. If it's not too much trouble for you, can you link the instructions on how to install the packages used in the scripts above, namely |

|

Apologies for the delay in responding further. Accessing cv_bridge on Windows or MacOS does not sound like it is easy to implement. The link below was the best information that I could find. |

|

@MartyG-RealSense thank you for looking into this! After my response yesterday, I took a few more hours to dig into this and found instruction to install ROS on windows OS (no luck with macOS). However, I was not able to use the script above to extract frames from bag file as the version I installed was not exactly the same as the one used for the script above; the function calls and outputs changed quite a bit. In the interest of time, I decided to stick with ROS installation instruction on windows OS |

|

Great news that you found a satisfactory solution - thanks so much for the update, and good luck with your further work! |

|

Case closed due to no further comments received. |

|

I am working on trying to use rosbag files directly, as I am having some issues with pyrealsense2 and frame dropping. Here is what worked for me on macOS 10.15.7 Catalina using Python 3.8.5:

However, I did a bunch of debugging, so if anything is not working, perhaps there is a dependency I installed somewhere along the way that I didn't catch. Let me know if you have any issues using the above. |

|

Thanks very much @rajkundu for sharing your experience in this subject with the RealSense community! :) |

|

good |

Issue Description

I observed an issue with inconsistent frame queue when pulling frames from recorded bag file. I'm looping through all frames from a bag file; at each frame, I align the depth and color frames (either depth --> color or color --> depth), and then save both frames into image files for visual inspection. To stop the loop, I determine the number of frames by using the following function that I wrote. The function simple extracts the length of the video (in second) and then multiplies the length with 30 (fps) to get the number of frames in the file

Function to align frames

I have two different approaches when it comes to aligning the frames and saving them. One is that I align frames as I loop through the frames set. The other is that I loop through the frames set, keep each composite frame and save them somewhere, and then align the frames in another loop after closing the pipeline. Please see the code snippet below for more details. The strange thing is, depends on my approach, the frames extraction result would be very different to each other and when compare to the playback in intel realsense viewer application. All approaches agree upon the length of the video. However, neither made it to the end of the video (when compare to the viewer application display)

First approach: align as looping through the frames

Second approach: loop through the bag file and save composite frames. Align frames after closing the pipeline

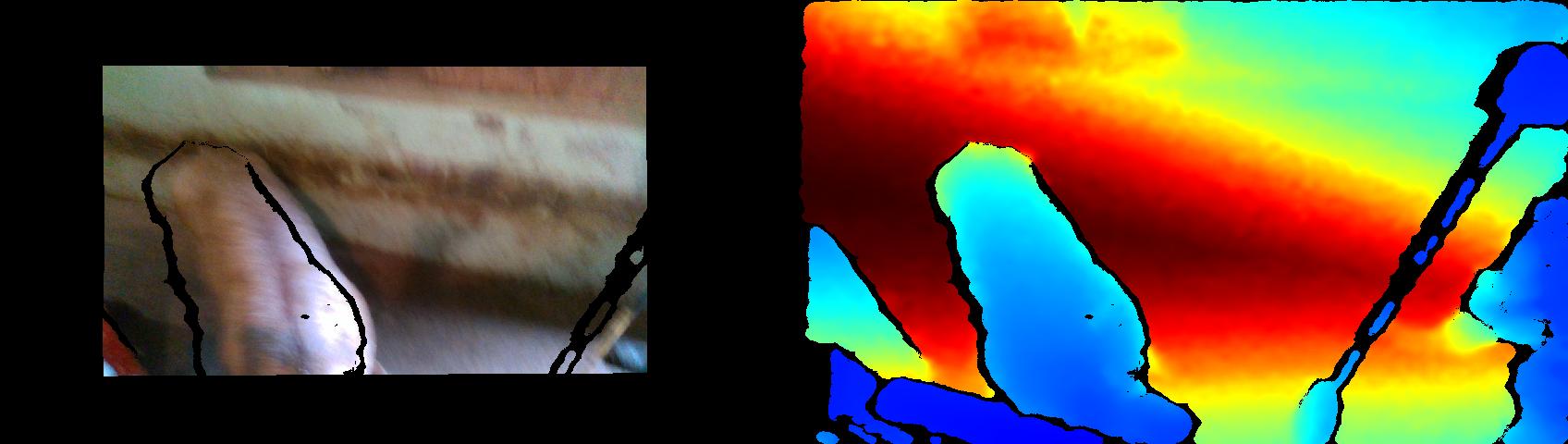

Result of first approach: last frame extracted and aligned

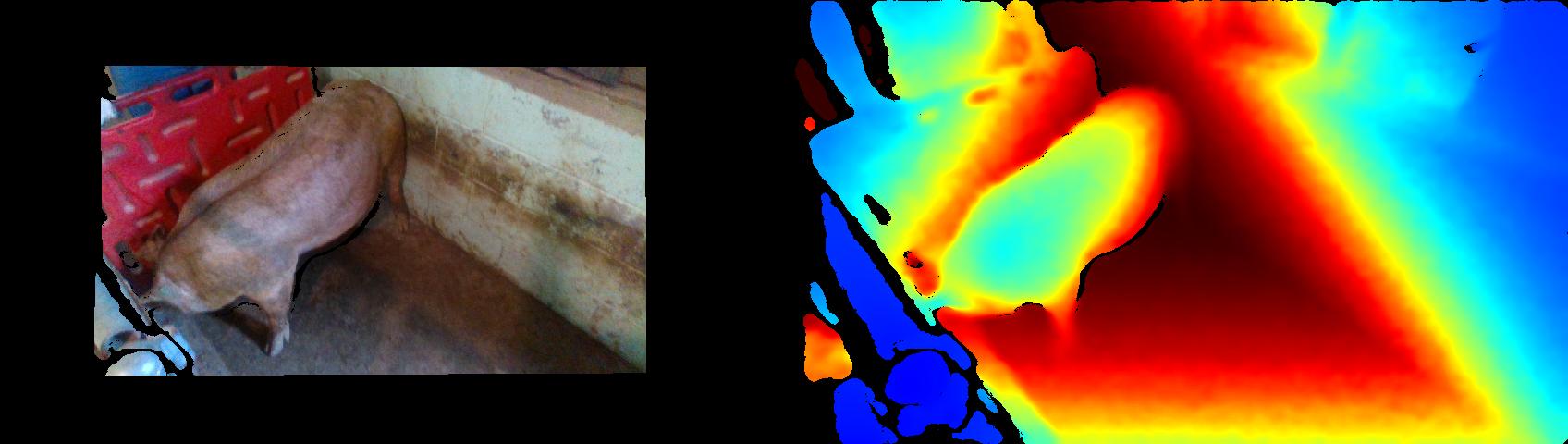

Result of second approach: last frame extracted and aligned

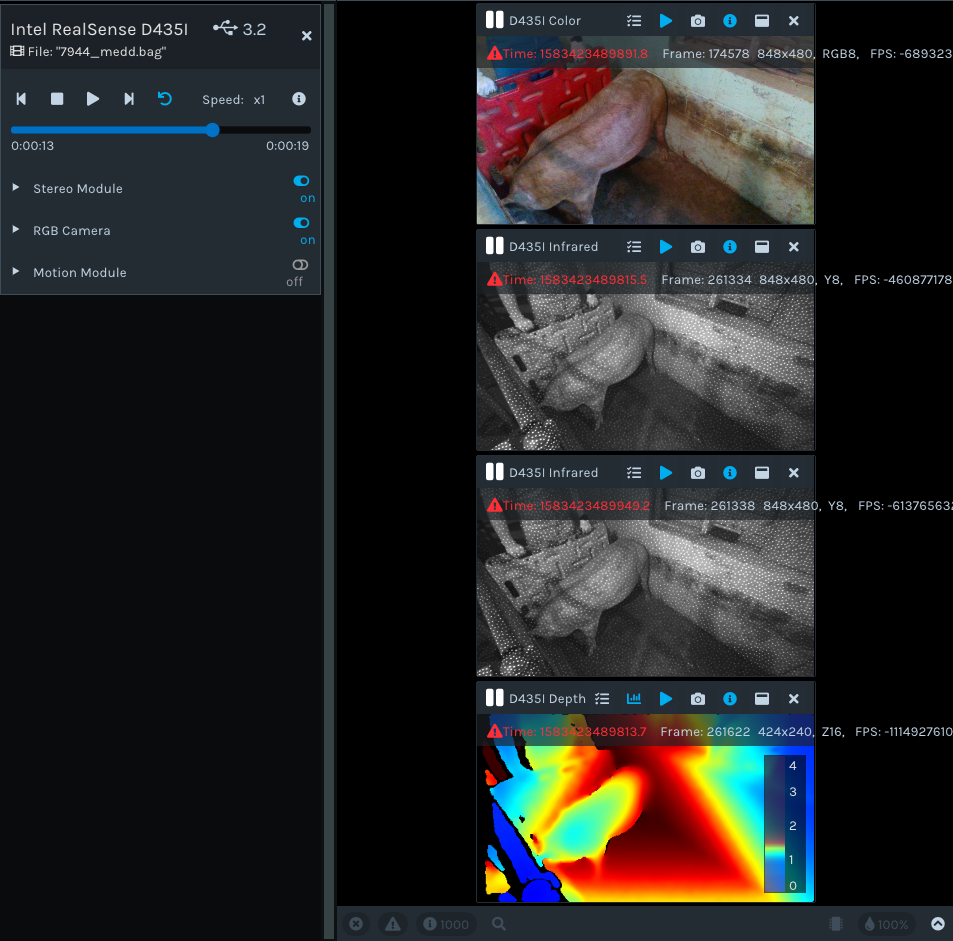

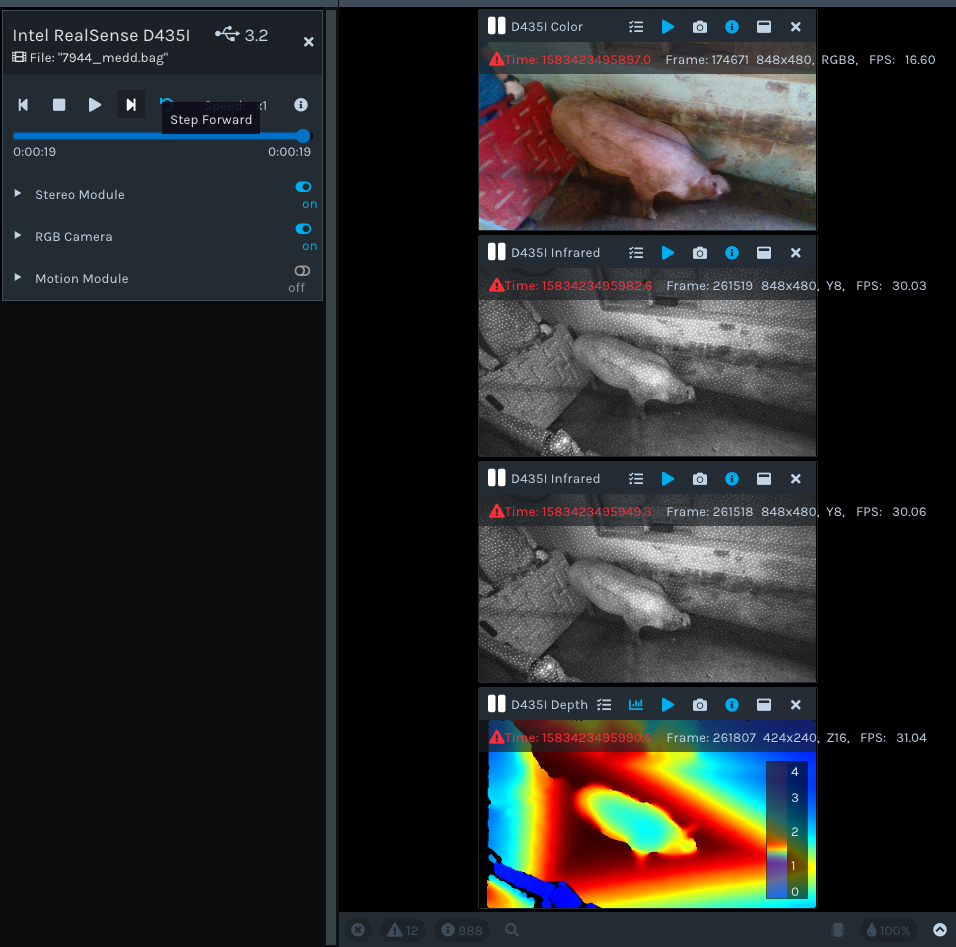

According to viewer application, the last frame extracted by second approach is well before the last frame (see image below)

The correct last frame according to viewer application

Can someone please explain this behavior? This behavior is creating some troubles for me to create a consistent pipeline for my project. Thanks!

The text was updated successfully, but these errors were encountered: