-

Notifications

You must be signed in to change notification settings - Fork 10

/

Copy pathREADME.rmd

173 lines (126 loc) · 8.46 KB

/

README.rmd

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

---

output: github_document

---

```{r setup, include=FALSE, message=FALSE, warning=TRUE}

knitr::opts_chunk$set(message = FALSE, warning = FALSE, fig.align = 'center', fig.path = "man/figures/")

```

# DALEXtra <img src="man/figures/logo.png" align="right" width="150"/>

[](https://github.com/maksymiuks/DALEXtra/actions?query=workflow%3AR-CMD-check)

[](https://codecov.io/github/ModelOriented/DALEXtra?branch=master)

[](https://cran.r-project.org/package=DALEXtra)

[](http://cranlogs.r-pkg.org/badges/grand-total/DALEXtra)

[](http://drwhy.ai/#BackBone)

# Overview

The `DALEXtra` package is an extension pack for [DALEX](https://modeloriented.github.io/DALEX) package. It contains various tools for XAI (eXplainable Artificial Intelligence) that can help us inspect and improve our model. Functionalities of the `DALEXtra` could be divided into two areas.

* Champion-Challenger analysis

+ Lets us compare two or more Machine-Learning models, determinate which one is better and improve both of them.

+ Funnel Plot of performance measures as an innovative approach to measure comparison.

+ Automatic HTML report.

* Cross langauge comaprison

+ Creating explainers for models created in different languges so they can be explained using R tools like [DrWhy.AI](https://github.com/ModelOriented/DrWhy) family.

+ Currently supported are **Python** *scikit-learn* and *keras*, **Java** *h2o*, **R** *xgboost*, *mlr*, *mlr3* and *tidymodels*.

## Installation

```{r eval = FALSE}

# Install the development version from GitHub:

# it is recommended to install latest version of DALEX from GitHub

devtools::install_github("ModelOriented/DALEX")

# install.packages("devtools")

devtools::install_github("ModelOriented/DALEXtra")

```

or latest CRAN version

```{r eval = FALSE}

install.packages("DALEX")

install.packages("DALEXtra")

```

Other packages useful with explanations.

```{r eval = FALSE}

devtools::install_github("ModelOriented/ingredients")

devtools::install_github("ModelOriented/iBreakDown")

devtools::install_github("ModelOriented/shapper")

devtools::install_github("ModelOriented/auditor")

devtools::install_github("ModelOriented/modelStudio")

```

Above packages can be used along with `explain` object to create explanations (ingredients, iBreakDown, shapper), audit our model (auditor) or automate the model exploration process (modelStudio).

# Champion-Challenger analysis

Without aby doubt, comaprison of models, espacially black-box ones is very important use case nowadays. Every day new models are being created and we need tools that can allow us to determinate which one is better. For this purpose we present Champion-Challenger analysis. It is set of functions that creates comaprisons of models and later can be gathered up to created one report with generic comments. Example of report can be found [here](http://htmlpreview.github.io/?https://github.com/ModelOriented/DALEXtra/blob/master/inst/ChampionChallenger/DALEXtra_example_of_report.html). As you can see any explenation that has generic `plot()` function can be plotted.

## Funnel Plot

Core of our analysis is funnel plot. It lets us find subsets of data where one of models is significantly better than other ones. That ability is insanely usefull, when we have models that have similiar overall performance and we want to know which one should we use.

```{r}

library("mlr")

library("DALEXtra")

task <- mlr::makeRegrTask(

id = "R",

data = apartments,

target = "m2.price"

)

learner_lm <- mlr::makeLearner(

"regr.lm"

)

model_lm <- mlr::train(learner_lm, task)

explainer_lm <- explain_mlr(model_lm, apartmentsTest, apartmentsTest$m2.price, label = "LM",

verbose = FALSE, precalculate = FALSE)

learner_rf <- mlr::makeLearner(

"regr.ranger",

num.trees=50

)

model_rf <- mlr::train(learner_rf, task)

explainer_rf <- explain_mlr(model_rf, apartmentsTest, apartmentsTest$m2.price, label = "RF",

verbose = FALSE, precalculate = FALSE)

plot_data <- funnel_measure(explainer_lm, explainer_rf,

partition_data = cbind(apartmentsTest,

"m2.per.room" = apartmentsTest$surface/apartmentsTest$no.rooms),

nbins = 5, measure_function = DALEX::loss_root_mean_square, show_info = FALSE)

```

```{r fig.width=12, fig.height=7}

plot(plot_data)[[1]]

```

Such situation is shown in the following plot. Both, `LM` and `RF` models have smiliar RMSE, but Funnel Plot shows that if we want to predict expensive or cheap apartemnts, we definetly should use `LM` while `RF` for average priced apartments. Also without any doubt

`LM` is much better than `RF` for `Srodmiescie` district. Following use case show us how powerfull tool can Funnel Plot be, for example we can compund two or models into one based of areas acquired from the Plot and thus improve our models. One another advantage of Funnel Plot is that it doesn't require model to be fitted with Variables shown on the plot, as you can see, `m2.per.room` is an artificial variable.

# Cross langauge comaprison

Here we will present short use case for our package and its compatibility with Python.

## How to setup Anaconda

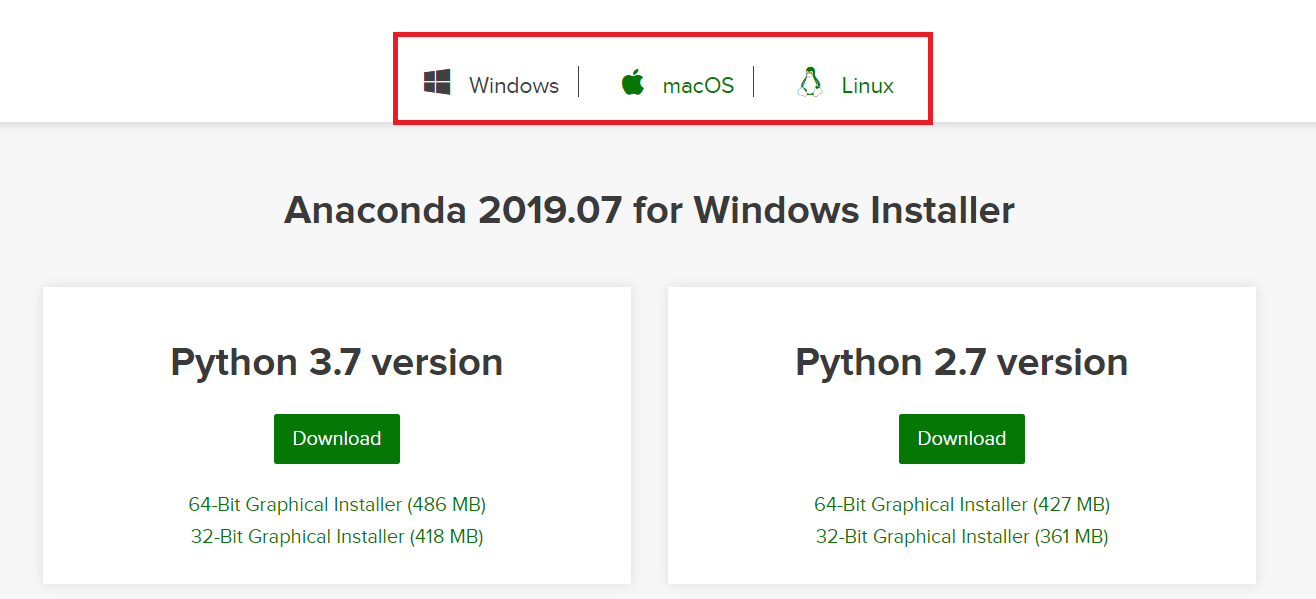

In order to be able to use some features associated with `DALEXtra`, Anaconda in needed. The easiest way to get it, is visiting

[Anaconda website](https://www.anaconda.com/distribution). And choosing proper OS as it stands in the following picture.

There is no big difference bewtween Python versions when downloading Anaconda. You can always create virtual environment with any version of Python no matter which version was downloaded first.

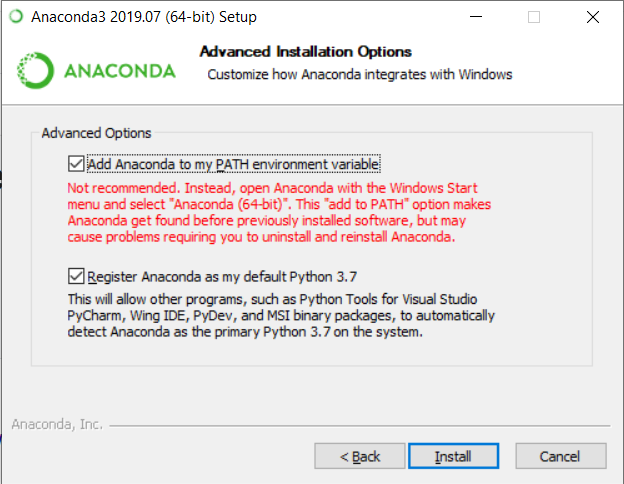

### Windows

Crucial thing is adding conda to PATH environment variable when using Windows. You can do it during the installation, by marking this checkbox.

<center>

</center>

or, if conda is already installed, follow [those instructions](https://stackoverflow.com/a/44597801/9717584).

### Unix

While using unix-like OS, adding conda to PATH is not required.

### Loading data

First we need provide the data, explainer is useless without them. Thing is Python object does not store training data so always have to provide dataset. Feel free to use those attached to `DALEX` package or those stored in `DALEXtra` files.

```{r}

titanic_test <- read.csv(system.file("extdata", "titanic_test.csv", package = "DALEXtra"))

```

Keep in mind that dataframe includes target variable (18th column) and scikit-learn models cannot work with it.

### Creating explainer

Creating exlainer from scikit-learn Python model is very simple thanks to `DALEXtra`. The only thing you need to provide is path to pickle and, if necessary, something that lets recognize Python environment. It may be a .yml file with packages specification, name of existing conda environment or path to Python virtual environment. Execution of `scikitlearn_explain` only with .pkl file and data will cause usage of default Python.

```{r}

library(DALEXtra)

explainer <- explain_scikitlearn(system.file("extdata", "scikitlearn.pkl", package = "DALEXtra"),

yml = system.file("extdata", "testing_environment.yml", package = "DALEXtra"),

data = titanic_test[,1:17], y = titanic_test$survived, colorize = FALSE)

```

Now with explainer ready we can use any of [DrWhy.Ai](https://github.com/ModelOriented/DrWhy/blob/master/README.md) universe tools to make explanations. Here is a small demo.

### Creating explanations

```{r}

library(DALEX)

plot(model_performance(explainer))

library(ingredients)

plot(feature_importance(explainer))

describe(feature_importance(explainer))

library(iBreakDown)

plot(break_down(explainer, titanic_test[2, 1:17]))

describe(break_down(explainer, titanic_test[2, 1:17]))

library(auditor)

eval <- model_evaluation(explainer)

plot_roc(eval)

# Predictions with newdata

predict(explainer, titanic_test[1:10, 1:17])

```

# Acknowledgments

Work on this package was financially supported by the `NCN Opus grant 2016/21/B/ST6/02176`.