diff --git a/docs/install/osx_setup.md b/docs/install/osx_setup.md

index 53039252888d..4e9293efce93 100644

--- a/docs/install/osx_setup.md

+++ b/docs/install/osx_setup.md

@@ -65,6 +65,10 @@ Install the dependencies, required for MXNet, with the following commands:

brew install openblas

brew tap homebrew/core

brew install opencv

+

+ # If building with MKLDNN

+ brew install llvm

+

# Get pip

easy_install pip

# For visualization of network graphs

@@ -89,12 +93,18 @@ The file called ```osx.mk``` has the configuration required for building MXNet o

make -j$(sysctl -n hw.ncpu)

```

+To build with MKLDNN

+

+```bash

+LIBRARY_PATH=$(brew --prefix llvm)/lib/ make -j $(sysctl -n hw.ncpu) CC=$(brew --prefix llvm)/bin/clang++ CXX=$(brew --prefix llvm)/bin/clang++ USE_OPENCV=1 USE_OPENMP=1 USE_MKLDNN=1 USE_BLAS=apple USE_PROFILER=1

+```

+

If building with ```GPU``` support, add the following configuration to config.mk and build:

```bash

echo "USE_CUDA = 1" >> ./config.mk

echo "USE_CUDA_PATH = /usr/local/cuda" >> ./config.mk

echo "USE_CUDNN = 1" >> ./config.mk

- make

+ make -j$(sysctl -n hw.ncpu)

```

**Note:** To change build parameters, edit ```config.mk```.

@@ -124,6 +134,18 @@ You have 2 options:

2. Building MXNet from Source Code

### Building MXNet with the Prebuilt Binary Package

+Install OpenCV and OpenBLAS.

+

+```bash

+brew install opencv

+brew install openblas@0.3.1

+```

+

+Add a soft link to the OpenBLAS installation. This example links the 0.3.1 version:

+

+```bash

+ln -sf /usr/local/opt/openblas/lib/libopenblasp-r0.3.* /usr/local/opt/openblas/lib/libopenblasp-r0.3.1.dylib

+```

Install the latest version (3.5.1+) of R from [CRAN](https://cran.r-project.org/bin/macosx/).

For OS X (Mac) users, MXNet provides a prebuilt binary package for CPUs. The prebuilt package is updated weekly. You can install the package directly in the R console using the following commands:

diff --git a/docs/install/requirements.txt b/docs/install/requirements.txt

index 3d8020cc6ecb..dfc3f70c96fb 100644

--- a/docs/install/requirements.txt

+++ b/docs/install/requirements.txt

@@ -2,7 +2,7 @@ cpplint==1.3.0

h5py==2.8.0rc1

nose

nose-timer

-numpy<1.15.0,>=1.8.2

+numpy<=1.15.2,>=1.8.2

pylint==1.8.3

requests<2.19.0,>=2.18.4

scipy==1.0.1

diff --git a/docs/install/ubuntu_setup.md b/docs/install/ubuntu_setup.md

index 804887aee863..8aac1432f8e0 100644

--- a/docs/install/ubuntu_setup.md

+++ b/docs/install/ubuntu_setup.md

@@ -162,7 +162,7 @@ If building on CPU and using OpenBLAS:

```bash

git clone --recursive https://github.com/apache/incubator-mxnet.git

- cd mxnet

+ cd incubator-mxnet

make -j $(nproc) USE_OPENCV=1 USE_BLAS=openblas

```

@@ -170,7 +170,7 @@ If building on CPU and using MKL and MKL-DNN (make sure MKL is installed accordi

```bash

git clone --recursive https://github.com/apache/incubator-mxnet.git

- cd mxnet

+ cd incubator-mxnet

make -j $(nproc) USE_OPENCV=1 USE_BLAS=mkl USE_MKLDNN=1

```

@@ -178,7 +178,7 @@ If building on GPU and you want OpenCV and OpenBLAS (make sure you have installe

```bash

git clone --recursive https://github.com/apache/incubator-mxnet.git

- cd mxnet

+ cd incubator-mxnet

make -j $(nproc) USE_OPENCV=1 USE_BLAS=openblas USE_CUDA=1 USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1

```

@@ -189,7 +189,7 @@ Building from source creates a library called ```libmxnet.so``` in the `lib` fol

You may also want to add the MXNet shared library to your `LD_LIBRARY_PATH`:

```bash

-export LD_LIBRARY_PATH=~/incubator-mxnet/lib

+export LD_LIBRARY_PATH=$PWD/lib

```

After building the MXNet library, you may install language bindings.

diff --git a/docs/install/validate_mxnet.md b/docs/install/validate_mxnet.md

index a4cf5446f606..dfe8d063f602 100644

--- a/docs/install/validate_mxnet.md

+++ b/docs/install/validate_mxnet.md

@@ -137,8 +137,25 @@ Please contribute an example!

### Perl

-Please contribute an example!

+Start the pdl2 terminal.

+

+```bash

+$ pdl2

+```

+Run a short *MXNet* Perl program to create a 2X3 matrix of ones, multiply each element in the matrix by 2 followed by adding 1. We expect the output to be a 2X3 matrix with all elements being 3.

+

+```perl

+pdl> use AI::MXNet qw(mx)

+pdl> $a = mx->nd->ones([2, 3])

+pdl> $b = $a * 2 + 1

+pdl> print $b->aspdl

+

+[

+ [3 3 3]

+ [3 3 3]

+]

+```

### R

diff --git a/docs/install/windows_setup.md b/docs/install/windows_setup.md

index 87fd1cc07d8f..8e612454a7e1 100755

--- a/docs/install/windows_setup.md

+++ b/docs/install/windows_setup.md

@@ -11,7 +11,7 @@ The following describes how to install with pip for computers with CPUs, Intel C

- [Build from Source](#build-from-source)

- Install MXNet with a Programming Language API

- [Python](#install-the-mxnet-package-for-python)

- - [R](#install-mxnet-package-for-r)

+ - [R](#install-the-mxnet-package-for-r)

- [Julia](#install-the-mxnet-package-for-julia)

@@ -119,7 +119,7 @@ We provide two primary options to build and install MXNet yourself using [Micros

**NOTE:** Visual Studio 2017's compiler is `vc15`. This is not to be confused with Visual Studio 2015's compiler, `vc14`.

-You also have the option to install MXNet with MKL or MKLDNN. In this case it is recommended that you refer to the [MKLDNN_README](https://github.com/apache/incubator-mxnet/blob/master/MKLDNN_README.md).

+You also have the option to install MXNet with MKL or MKL-DNN. In this case it is recommended that you refer to the [MKLDNN_README](https://github.com/apache/incubator-mxnet/blob/master/MKLDNN_README.md).

**Option 1: Build with Microsoft Visual Studio 2017 (VS2017)**

@@ -137,14 +137,14 @@ To build and install MXNet yourself using [VS2017](https://www.visualstudio.com/

```

1. Download and install [CMake](https://cmake.org/download) if it is not already installed. [CMake v3.12.2](https://cmake.org/files/v3.12/cmake-3.12.2-win64-x64.msi) has been tested with MXNet.

1. Download and run the [OpenCV](https://sourceforge.net/projects/opencvlibrary/files/opencv-win/3.4.1/opencv-3.4.1-vc14_vc15.exe/download) package. There are more recent versions of OpenCV, so please create an issue/PR to update this info if you validate one of these later versions.

-1. This will unzip several files. You can place them in another directory if you wish.

-1. Set the environment variable `OpenCV_DIR` to point to the OpenCV build directory that you just unzipped (e.g., `OpenCV_DIR = C:\utils\opencv\build`).

+1. This will unzip several files. You can place them in another directory if you wish. We will use `C:\utils`(```mkdir C:\utils```) as our default path.

+1. Set the environment variable `OpenCV_DIR` to point to the OpenCV build directory that you just unzipped. Start ```cmd``` and type `set OpenCV_DIR=C:\utils\opencv\build`.

1. If you don’t have the Intel Math Kernel Library (MKL) installed, you can install it and follow the [MKLDNN_README](https://github.com/apache/incubator-mxnet/blob/master/MKLDNN_README.md) from here, or you can use OpenBLAS. These instructions will assume you're using OpenBLAS.

1. Download the [OpenBlas](https://sourceforge.net/projects/openblas/files/v0.2.19/OpenBLAS-v0.2.19-Win64-int32.zip/download) package. Later versions of OpenBLAS are available, but you would need to build from source. v0.2.19 is the most recent version that ships with binaries. Contributions of more recent binaries would be appreciated.

-1. Unzip the file. You can place the unzipped files and folders in another directory if you wish.

-1. Set the environment variable `OpenBLAS_HOME` to point to the OpenBLAS directory that contains the `include` and `lib` directories (e.g., `OpenBLAS_HOME = C:\utils\OpenBLAS`).

-1. Download and install [CUDA](https://developer.nvidia.com/cuda-downloads?target_os=Windows&target_arch=x86_64&target_version=10&target_type=exelocal). If you already had CUDA, then installed VS2017, you should reinstall CUDA now so that you get the CUDA toolkit components for VS2017 integration.

-1. Download and install cuDNN. To get access to the download link, register as an NVIDIA community user. Then Follow the [link](http://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#install-windows) to install the cuDNN.

+1. Unzip the file, rename it to ```OpenBLAS``` and put it under `C:\utils`. You can place the unzipped files and folders in another directory if you wish.

+1. Set the environment variable `OpenBLAS_HOME` to point to the OpenBLAS directory that contains the `include` and `lib` directories and type `set OpenBLAS_HOME=C:\utils\OpenBLAS` on the command prompt(```cmd```).

+1. Download and install [CUDA](https://developer.nvidia.com/cuda-downloads?target_os=Windows&target_arch=x86_64&target_version=10&target_type=exelocal). If you already had CUDA, then installed VS2017, you should reinstall CUDA now so that you get the CUDA toolkit components for VS2017 integration. Note that the latest CUDA version supported by MXNet is [9.2](https://developer.nvidia.com/cuda-92-download-archive). You might also want to find other CUDA verion on the [Legacy Releases](https://developer.nvidia.com/cuda-toolkit-archive).

+1. Download and install cuDNN. To get access to the download link, register as an NVIDIA community user. Then follow the [link](http://docs.nvidia.com/deeplearning/sdk/cudnn-install/index.html#install-windows) to install the cuDNN and put those libraries into ```C:\cuda```.

1. Download and install [git](https://git-for-windows.github.io/) if you haven't already.

After you have installed all of the required dependencies, build the MXNet source code:

@@ -158,13 +158,14 @@ git clone https://github.com/apache/incubator-mxnet.git --recursive

3. Verify that the `DCUDNN_INCLUDE` and `DCUDNN_LIBRARY` environment variables are pointing to the `include` folder and `cudnn.lib` file of your CUDA installed location, and `C:\incubator-mxnet` is the location of the source code you just cloned in the previous step.

4. Create a build dir using the following command and go to the directory, for example:

```

-mkdir C:\build

-cd C:\build

+mkdir C:\incubator-mxnet\build

+cd C:\incubator-mxnet\build

```

5. Compile the MXNet source code with `cmake` by using following command:

```

cmake -G "Visual Studio 15 2017 Win64" -T cuda=9.2,host=x64 -DUSE_CUDA=1 -DUSE_CUDNN=1 -DUSE_NVRTC=1 -DUSE_OPENCV=1 -DUSE_OPENMP=1 -DUSE_BLAS=open -DUSE_LAPACK=1 -DUSE_DIST_KVSTORE=0 -DCUDA_ARCH_LIST=Common -DCUDA_TOOLSET=9.2 -DCUDNN_INCLUDE=C:\cuda\include -DCUDNN_LIBRARY=C:\cuda\lib\x64\cudnn.lib "C:\incubator-mxnet"

```

+* Make sure you set the environment variables correctly (OpenBLAS_HOME, OpenCV_DIR) and change the version of the Visual studio 2017 to v14.11 before enter above command.

6. After the CMake successfully completed, compile the the MXNet source code by using following command:

```

msbuild mxnet.sln /p:Configuration=Release;Platform=x64 /maxcpucount

@@ -206,14 +207,28 @@ We have installed MXNet core library. Next, we will install MXNet interface pack

- [Julia](#install-the-mxnet-package-for-julia)

- **Scala** is not yet available for Windows

-## Install MXNet for Python

+## Install the MXNet Package for Python

These steps are required after building from source. If you already installed MXNet by using pip, you do not need to do these steps to use MXNet with Python.

1. Install ```Python``` using windows installer available [here](https://www.python.org/downloads/release/python-2712/).

2. Install ```Numpy``` using windows installer available [here](https://scipy.org/index.html).

-3. Next, we install Python package interface for MXNet. You can find the Python interface package for [MXNet on GitHub](https://github.com/dmlc/mxnet/tree/master/python/mxnet).

-

+3. Start ```cmd``` and create a folder named ```common```(```mkdir C:\common```)

+4. Download the [mingw64_dll.zip](https://sourceforge.net/projects/openblas/files/v0.2.12/mingw64_dll.zip/download), unzip and copy three libraries (.dll files) that openblas.dll depends on to ```C:\common```.

+5. Copy the required .dll file to ```C:\common``` and make sure following libraries (.dll files) in the folder.

+```

+libgcc_s_seh-1.dll (in mingw64_dll)

+libgfortran-3.dll (in mingw64_dll)

+libquadmath-0.dll (in mingw64_dll)

+libopenblas.dll (in OpenBlas folder you download)

+opencv_world341.dll (in OpenCV folder you download)

+```

+6. Add ```C:\common``` to Environment Variables.

+ * Type ```control sysdm.cpl``` on ```cmp```

+ * Select the **Advanced tab** and click **Environment Variables**

+ * Double click the **Path** and click **New**

+ * Add ```C:\common``` and click OK

+7. Use setup.py to install the package.

```bash

# Assuming you are in root mxnet source code folder

cd python

diff --git a/docs/mxdoc.py b/docs/mxdoc.py

index 7092b9ee9eaa..5e86c1c6dd48 100644

--- a/docs/mxdoc.py

+++ b/docs/mxdoc.py

@@ -40,11 +40,12 @@

for section in [ _DOC_SET ]:

print("Document sets to generate:")

- for candidate in [ 'scala_docs', 'clojure_docs', 'doxygen_docs', 'r_docs' ]:

+ for candidate in [ 'scala_docs', 'java_docs', 'clojure_docs', 'doxygen_docs', 'r_docs' ]:

print('%-12s : %s' % (candidate, parser.get(section, candidate)))

_MXNET_DOCS_BUILD_MXNET = parser.getboolean('mxnet', 'build_mxnet')

_SCALA_DOCS = parser.getboolean(_DOC_SET, 'scala_docs')

+_JAVA_DOCS = parser.getboolean(_DOC_SET, 'java_docs')

_CLOJURE_DOCS = parser.getboolean(_DOC_SET, 'clojure_docs')

_DOXYGEN_DOCS = parser.getboolean(_DOC_SET, 'doxygen_docs')

_R_DOCS = parser.getboolean(_DOC_SET, 'r_docs')

@@ -58,7 +59,8 @@

# language names and the according file extensions and comment symbol

_LANGS = {'python' : ('py', '#'),

'r' : ('R','#'),

- 'scala' : ('scala', '#'),

+ 'scala' : ('scala', '//'),

+ 'java' : ('java', '//'),

'julia' : ('jl', '#'),

'perl' : ('pl', '#'),

'cpp' : ('cc', '//'),

@@ -101,21 +103,39 @@ def build_r_docs(app):

_run_cmd('mkdir -p ' + dest_path + '; mv ' + pdf_path + ' ' + dest_path)

def build_scala(app):

- """build scala for scala docs and clojure docs to use"""

+ """build scala for scala docs, java docs, and clojure docs to use"""

_run_cmd("cd %s/.. && make scalapkg" % app.builder.srcdir)

_run_cmd("cd %s/.. && make scalainstall" % app.builder.srcdir)

def build_scala_docs(app):

"""build scala doc and then move the outdir"""

scala_path = app.builder.srcdir + '/../scala-package'

- # scaldoc fails on some apis, so exit 0 to pass the check

- _run_cmd('cd ' + scala_path + '; scaladoc `find . -type f -name "*.scala" | egrep \"\/core|\/infer\" | egrep -v \"Suite\"`; exit 0')

+ scala_doc_sources = 'find . -type f -name "*.scala" | egrep \"\.\/core|\.\/infer\" | egrep -v \"Suite\"'

+ scala_doc_classpath = ':'.join([

+ '`find native -name "*.jar" | grep "target/lib/" | tr "\\n" ":" `',

+ '`find macros -name "*-SNAPSHOT.jar" | tr "\\n" ":" `'

+ ])

+ _run_cmd('cd {}; scaladoc `{}` -classpath {} -feature -deprecation; exit 0'

+ .format(scala_path, scala_doc_sources, scala_doc_classpath))

dest_path = app.builder.outdir + '/api/scala/docs'

_run_cmd('rm -rf ' + dest_path)

_run_cmd('mkdir -p ' + dest_path)

+ # 'index' and 'package.html' do not exist in later versions of scala; delete these after upgrading scala>2.12.x

scaladocs = ['index', 'index.html', 'org', 'lib', 'index.js', 'package.html']

for doc_file in scaladocs:

- _run_cmd('cd ' + scala_path + ' && mv -f ' + doc_file + ' ' + dest_path)

+ _run_cmd('cd ' + scala_path + ' && mv -f ' + doc_file + ' ' + dest_path + '; exit 0')

+

+def build_java_docs(app):

+ """build java docs and then move the outdir"""

+ java_path = app.builder.srcdir + '/../scala-package/core/src/main/scala/org/apache/mxnet/'

+ # scaldoc fails on some apis, so exit 0 to pass the check

+ _run_cmd('cd ' + java_path + '; scaladoc `find . -type f -name "*.scala" | egrep \"\/javaapi\" | egrep -v \"Suite\"`; exit 0')

+ dest_path = app.builder.outdir + '/api/java/docs'

+ _run_cmd('rm -rf ' + dest_path)

+ _run_cmd('mkdir -p ' + dest_path)

+ javadocs = ['index', 'index.html', 'org', 'lib', 'index.js', 'package.html']

+ for doc_file in javadocs:

+ _run_cmd('cd ' + java_path + ' && mv -f ' + doc_file + ' ' + dest_path + '; exit 0')

def build_clojure_docs(app):

"""build clojure doc and then move the outdir"""

@@ -125,7 +145,7 @@ def build_clojure_docs(app):

_run_cmd('rm -rf ' + dest_path)

_run_cmd('mkdir -p ' + dest_path)

clojure_doc_path = app.builder.srcdir + '/../contrib/clojure-package/target/doc'

- _run_cmd('cd ' + clojure_doc_path + ' && cp -r * ' + dest_path)

+ _run_cmd('cd ' + clojure_doc_path + ' && cp -r * ' + dest_path + '; exit 0')

def _convert_md_table_to_rst(table):

"""Convert a markdown table to rst format"""

@@ -404,7 +424,6 @@ def add_buttons(app, docname, source):

# source[i] = '\n'.join(lines)

def setup(app):

-

# If MXNET_DOCS_BUILD_MXNET is set something different than 1

# Skip the build step

if os.getenv('MXNET_DOCS_BUILD_MXNET', '1') == '1' or _MXNET_DOCS_BUILD_MXNET:

@@ -419,6 +438,9 @@ def setup(app):

if _SCALA_DOCS:

print("Building Scala Docs!")

app.connect("builder-inited", build_scala_docs)

+ if _JAVA_DOCS:

+ print("Building Java Docs!")

+ app.connect("builder-inited", build_java_docs)

if _CLOJURE_DOCS:

print("Building Clojure Docs!")

app.connect("builder-inited", build_clojure_docs)

diff --git a/docs/settings.ini b/docs/settings.ini

index b8e486e58e87..1f2097125ff6 100644

--- a/docs/settings.ini

+++ b/docs/settings.ini

@@ -1,68 +1,85 @@

[mxnet]

build_mxnet = 0

+[document_sets_tutorial]

+clojure_docs = 0

+doxygen_docs = 1

+r_docs = 0

+scala_docs = 0

+

[document_sets_default]

clojure_docs = 1

doxygen_docs = 1

+java_docs = 1

r_docs = 0

scala_docs = 1

[document_sets_1.2.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 1

[document_sets_v1.2.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 1

[document_sets_1.1.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_v1.1.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_1.0.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_v1.0.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_0.12.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_v0.12.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_0.11.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

[document_sets_v0.11.0]

clojure_docs = 0

doxygen_docs = 1

+java_docs = 0

r_docs = 0

scala_docs = 0

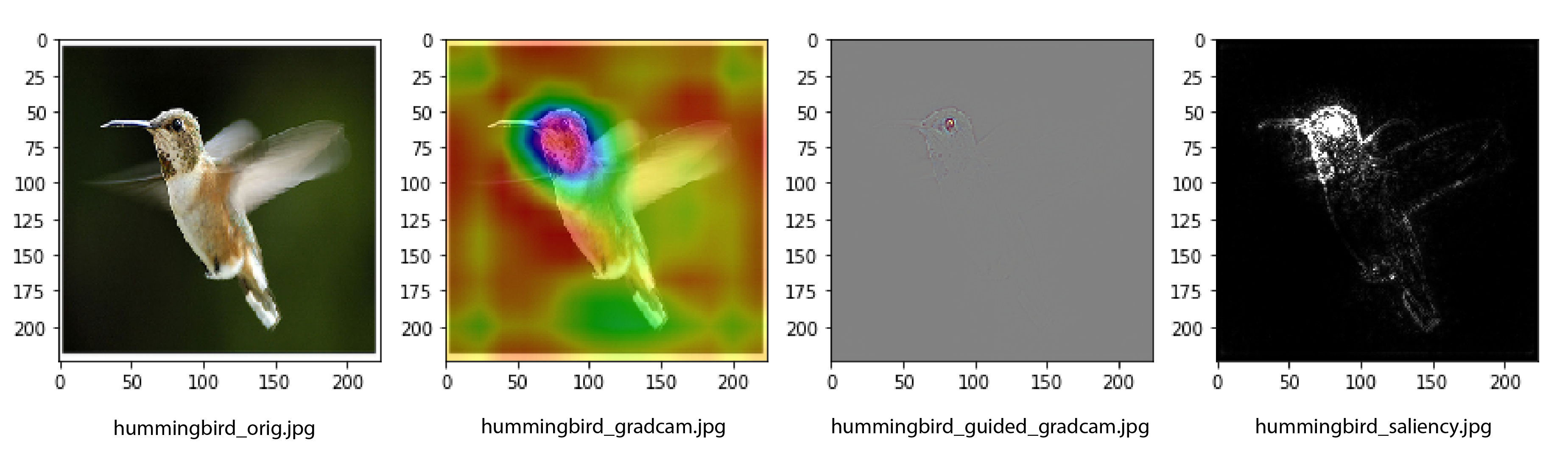

diff --git a/example/cnn_visualization/gradcam.py b/docs/tutorial_utils/vision/cnn_visualization/gradcam.py

similarity index 98%

rename from example/cnn_visualization/gradcam.py

rename to docs/tutorial_utils/vision/cnn_visualization/gradcam.py

index a8708f787584..54cb65eef11b 100644

--- a/example/cnn_visualization/gradcam.py

+++ b/docs/tutorial_utils/vision/cnn_visualization/gradcam.py

@@ -249,8 +249,8 @@ def visualize(net, preprocessed_img, orig_img, conv_layer_name):

imggrad = get_image_grad(net, preprocessed_img)

conv_out, conv_out_grad = get_conv_out_grad(net, preprocessed_img, conv_layer_name=conv_layer_name)

- cam = get_cam(imggrad, conv_out)

-

+ cam = get_cam(conv_out_grad, conv_out)

+ cam = cv2.resize(cam, (imggrad.shape[1], imggrad.shape[2]))

ggcam = get_guided_grad_cam(cam, imggrad)

img_ggcam = grad_to_image(ggcam)

diff --git a/docs/tutorials/basic/module.md b/docs/tutorials/basic/module.md

index 191e3baaaffc..f7a4d6e25de7 100644

--- a/docs/tutorials/basic/module.md

+++ b/docs/tutorials/basic/module.md

@@ -39,11 +39,16 @@ training examples each time. A separate iterator is also created for test data.

```python

import logging

+import random

logging.getLogger().setLevel(logging.INFO)

+

import mxnet as mx

import numpy as np

mx.random.seed(1234)

+np.random.seed(1234)

+random.seed(1234)

+

fname = mx.test_utils.download('https://s3.us-east-2.amazonaws.com/mxnet-public/letter_recognition/letter-recognition.data')

data = np.genfromtxt(fname, delimiter=',')[:,1:]

label = np.array([ord(l.split(',')[0])-ord('A') for l in open(fname, 'r')])

@@ -64,7 +69,7 @@ net = mx.sym.FullyConnected(net, name='fc1', num_hidden=64)

net = mx.sym.Activation(net, name='relu1', act_type="relu")

net = mx.sym.FullyConnected(net, name='fc2', num_hidden=26)

net = mx.sym.SoftmaxOutput(net, name='softmax')

-mx.viz.plot_network(net)

+mx.viz.plot_network(net, node_attrs={"shape":"oval","fixedsize":"false"})

```

@@ -135,11 +140,17 @@ for epoch in range(5):

print('Epoch %d, Training %s' % (epoch, metric.get()))

```

- Epoch 0, Training ('accuracy', 0.4554375)

- Epoch 1, Training ('accuracy', 0.6485625)

- Epoch 2, Training ('accuracy', 0.7055625)

- Epoch 3, Training ('accuracy', 0.7396875)

- Epoch 4, Training ('accuracy', 0.764375)

+

+Expected output:

+

+

+```

+Epoch 0, Training ('accuracy', 0.434625)

+Epoch 1, Training ('accuracy', 0.6516875)

+Epoch 2, Training ('accuracy', 0.6968125)

+Epoch 3, Training ('accuracy', 0.7273125)

+Epoch 4, Training ('accuracy', 0.7575625)

+```

To learn more about these APIs, visit [Module API](http://mxnet.io/api/python/module/module.html).

@@ -172,34 +183,36 @@ mod.fit(train_iter,

optimizer='sgd',

optimizer_params={'learning_rate':0.1},

eval_metric='acc',

- num_epoch=8)

+ num_epoch=7)

```

- INFO:root:Epoch[0] Train-accuracy=0.364625

- INFO:root:Epoch[0] Time cost=0.388

- INFO:root:Epoch[0] Validation-accuracy=0.557250

- INFO:root:Epoch[1] Train-accuracy=0.633625

- INFO:root:Epoch[1] Time cost=0.470

- INFO:root:Epoch[1] Validation-accuracy=0.634750

- INFO:root:Epoch[2] Train-accuracy=0.697187

- INFO:root:Epoch[2] Time cost=0.402

- INFO:root:Epoch[2] Validation-accuracy=0.665500

- INFO:root:Epoch[3] Train-accuracy=0.735062

- INFO:root:Epoch[3] Time cost=0.402

- INFO:root:Epoch[3] Validation-accuracy=0.713000

- INFO:root:Epoch[4] Train-accuracy=0.762563

- INFO:root:Epoch[4] Time cost=0.408

- INFO:root:Epoch[4] Validation-accuracy=0.742000

- INFO:root:Epoch[5] Train-accuracy=0.782312

- INFO:root:Epoch[5] Time cost=0.400

- INFO:root:Epoch[5] Validation-accuracy=0.778500

- INFO:root:Epoch[6] Train-accuracy=0.797188

- INFO:root:Epoch[6] Time cost=0.392

- INFO:root:Epoch[6] Validation-accuracy=0.798250

- INFO:root:Epoch[7] Train-accuracy=0.807750

- INFO:root:Epoch[7] Time cost=0.401

- INFO:root:Epoch[7] Validation-accuracy=0.789250

+Expected output:

+

+

+```

+INFO:root:Epoch[0] Train-accuracy=0.325437

+INFO:root:Epoch[0] Time cost=0.550

+INFO:root:Epoch[0] Validation-accuracy=0.568500

+INFO:root:Epoch[1] Train-accuracy=0.622188

+INFO:root:Epoch[1] Time cost=0.552

+INFO:root:Epoch[1] Validation-accuracy=0.656500

+INFO:root:Epoch[2] Train-accuracy=0.694375

+INFO:root:Epoch[2] Time cost=0.566

+INFO:root:Epoch[2] Validation-accuracy=0.703500

+INFO:root:Epoch[3] Train-accuracy=0.732187

+INFO:root:Epoch[3] Time cost=0.562

+INFO:root:Epoch[3] Validation-accuracy=0.748750

+INFO:root:Epoch[4] Train-accuracy=0.755375

+INFO:root:Epoch[4] Time cost=0.484

+INFO:root:Epoch[4] Validation-accuracy=0.761500

+INFO:root:Epoch[5] Train-accuracy=0.773188

+INFO:root:Epoch[5] Time cost=0.383

+INFO:root:Epoch[5] Validation-accuracy=0.715000

+INFO:root:Epoch[6] Train-accuracy=0.794687

+INFO:root:Epoch[6] Time cost=0.378

+INFO:root:Epoch[6] Validation-accuracy=0.802250

+```

By default, `fit` function has `eval_metric` set to `accuracy`, `optimizer` to `sgd`

and optimizer_params to `(('learning_rate', 0.01),)`.

@@ -225,12 +238,17 @@ It can be used as follows:

```python

score = mod.score(val_iter, ['acc'])

print("Accuracy score is %f" % (score[0][1]))

-assert score[0][1] > 0.77, "Achieved accuracy (%f) is less than expected (0.77)" % score[0][1]

+assert score[0][1] > 0.76, "Achieved accuracy (%f) is less than expected (0.76)" % score[0][1]

```

- Accuracy score is 0.789250

+

+Expected output:

+```

+Accuracy score is 0.802250

+```

+

Some of the other metrics which can be used are `top_k_acc`(top-k-accuracy),

`F1`, `RMSE`, `MSE`, `MAE`, `ce`(CrossEntropy). To learn more about the metrics,

visit [Evaluation metric](http://mxnet.io/api/python/metric/metric.html).

@@ -252,22 +270,27 @@ mod = mx.mod.Module(symbol=net)

mod.fit(train_iter, num_epoch=5, epoch_end_callback=checkpoint)

```

- INFO:root:Epoch[0] Train-accuracy=0.101062

- INFO:root:Epoch[0] Time cost=0.422

- INFO:root:Saved checkpoint to "mx_mlp-0001.params"

- INFO:root:Epoch[1] Train-accuracy=0.263313

- INFO:root:Epoch[1] Time cost=0.785

- INFO:root:Saved checkpoint to "mx_mlp-0002.params"

- INFO:root:Epoch[2] Train-accuracy=0.452188

- INFO:root:Epoch[2] Time cost=0.624

- INFO:root:Saved checkpoint to "mx_mlp-0003.params"

- INFO:root:Epoch[3] Train-accuracy=0.544125

- INFO:root:Epoch[3] Time cost=0.427

- INFO:root:Saved checkpoint to "mx_mlp-0004.params"

- INFO:root:Epoch[4] Train-accuracy=0.605250

- INFO:root:Epoch[4] Time cost=0.399

- INFO:root:Saved checkpoint to "mx_mlp-0005.params"

+Expected output:

+

+

+```

+INFO:root:Epoch[0] Train-accuracy=0.098437

+INFO:root:Epoch[0] Time cost=0.421

+INFO:root:Saved checkpoint to "mx_mlp-0001.params"

+INFO:root:Epoch[1] Train-accuracy=0.257437

+INFO:root:Epoch[1] Time cost=0.520

+INFO:root:Saved checkpoint to "mx_mlp-0002.params"

+INFO:root:Epoch[2] Train-accuracy=0.457250

+INFO:root:Epoch[2] Time cost=0.562

+INFO:root:Saved checkpoint to "mx_mlp-0003.params"

+INFO:root:Epoch[3] Train-accuracy=0.558187

+INFO:root:Epoch[3] Time cost=0.434

+INFO:root:Saved checkpoint to "mx_mlp-0004.params"

+INFO:root:Epoch[4] Train-accuracy=0.617750

+INFO:root:Epoch[4] Time cost=0.414

+INFO:root:Saved checkpoint to "mx_mlp-0005.params"

+```

To load the saved module parameters, call the `load_checkpoint` function. It

loads the Symbol and the associated parameters. We can then set the loaded

@@ -299,16 +322,25 @@ mod.fit(train_iter,

assert score[0][1] > 0.77, "Achieved accuracy (%f) is less than expected (0.77)" % score[0][1]

```

- INFO:root:Epoch[3] Train-accuracy=0.544125

- INFO:root:Epoch[3] Time cost=0.398

- INFO:root:Epoch[4] Train-accuracy=0.605250

- INFO:root:Epoch[4] Time cost=0.545

- INFO:root:Epoch[5] Train-accuracy=0.644312

- INFO:root:Epoch[5] Time cost=0.592

- INFO:root:Epoch[6] Train-accuracy=0.675000

- INFO:root:Epoch[6] Time cost=0.491

- INFO:root:Epoch[7] Train-accuracy=0.695812

- INFO:root:Epoch[7] Time cost=0.363

+

+Expected output:

+

+

+```

+INFO:root:Epoch[3] Train-accuracy=0.555438

+INFO:root:Epoch[3] Time cost=0.377

+INFO:root:Epoch[4] Train-accuracy=0.616625

+INFO:root:Epoch[4] Time cost=0.457

+INFO:root:Epoch[5] Train-accuracy=0.658438

+INFO:root:Epoch[5] Time cost=0.518

+...........................................

+INFO:root:Epoch[18] Train-accuracy=0.788687

+INFO:root:Epoch[18] Time cost=0.532

+INFO:root:Epoch[19] Train-accuracy=0.789562

+INFO:root:Epoch[19] Time cost=0.531

+INFO:root:Epoch[20] Train-accuracy=0.796250

+INFO:root:Epoch[20] Time cost=0.531

+```

diff --git a/docs/tutorials/basic/symbol.md b/docs/tutorials/basic/symbol.md

index 7ebcadfc16f3..5e1e3cd8c62f 100644

--- a/docs/tutorials/basic/symbol.md

+++ b/docs/tutorials/basic/symbol.md

@@ -89,7 +89,7 @@ f = mx.sym.reshape(d+e, shape=(1,4))

# broadcast

g = mx.sym.broadcast_to(f, shape=(2,4))

# plot

-mx.viz.plot_network(symbol=g)

+mx.viz.plot_network(symbol=g, node_attrs={"shape":"oval","fixedsize":"false"})

```

The computations declared in the above examples can be bound to the input data

@@ -108,7 +108,7 @@ net = mx.sym.FullyConnected(data=net, name='fc1', num_hidden=128)

net = mx.sym.Activation(data=net, name='relu1', act_type="relu")

net = mx.sym.FullyConnected(data=net, name='fc2', num_hidden=10)

net = mx.sym.SoftmaxOutput(data=net, name='out')

-mx.viz.plot_network(net, shape={'data':(100,200)})

+mx.viz.plot_network(net, shape={'data':(100,200)}, node_attrs={"shape":"oval","fixedsize":"false"})

```

Each symbol takes a (unique) string name. NDArray and Symbol both represent

@@ -211,7 +211,7 @@ def ConvFactory(data, num_filter, kernel, stride=(1,1), pad=(0, 0),name=None, su

prev = mx.sym.Variable(name="Previous Output")

conv_comp = ConvFactory(data=prev, num_filter=64, kernel=(7,7), stride=(2, 2))

shape = {"Previous Output" : (128, 3, 28, 28)}

-mx.viz.plot_network(symbol=conv_comp, shape=shape)

+mx.viz.plot_network(symbol=conv_comp, shape=shape, node_attrs={"shape":"oval","fixedsize":"false"})

```

Then we can define a function that constructs an inception module based on

@@ -237,7 +237,7 @@ def InceptionFactoryA(data, num_1x1, num_3x3red, num_3x3, num_d3x3red, num_d3x3,

return concat

prev = mx.sym.Variable(name="Previous Output")

in3a = InceptionFactoryA(prev, 64, 64, 64, 64, 96, "avg", 32, name="in3a")

-mx.viz.plot_network(symbol=in3a, shape=shape)

+mx.viz.plot_network(symbol=in3a, shape=shape, node_attrs={"shape":"oval","fixedsize":"false"})

```

Finally, we can obtain the whole network by chaining multiple inception

diff --git a/docs/tutorials/c++/subgraphAPI.md b/docs/tutorials/c++/subgraphAPI.md

new file mode 100644

index 000000000000..0ae4341b287c

--- /dev/null

+++ b/docs/tutorials/c++/subgraphAPI.md

@@ -0,0 +1,104 @@

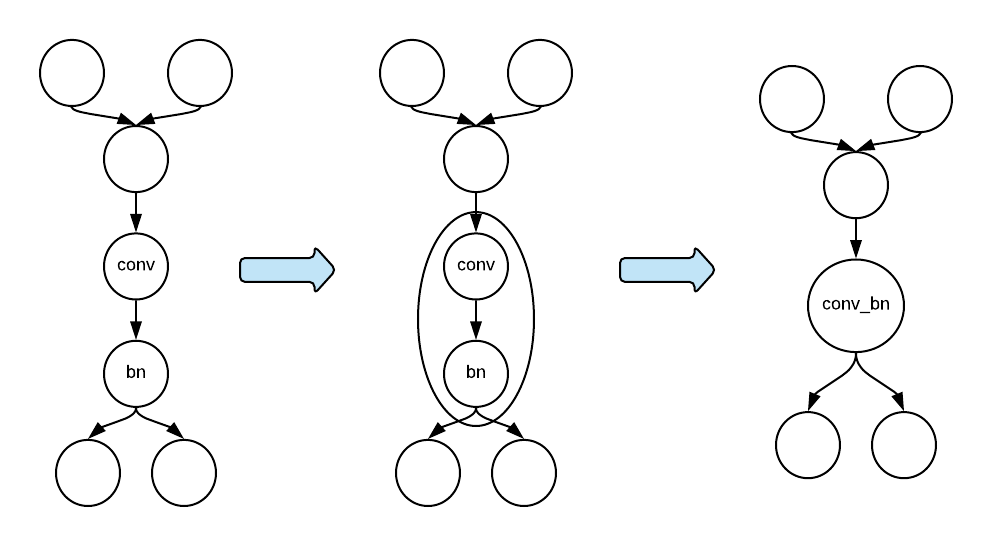

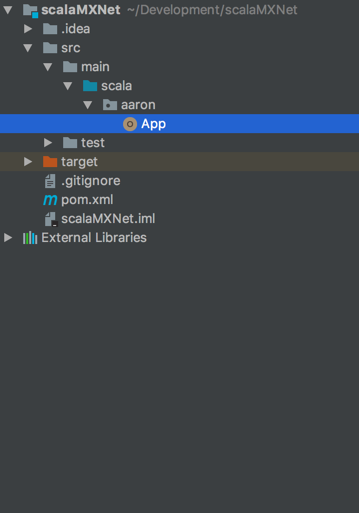

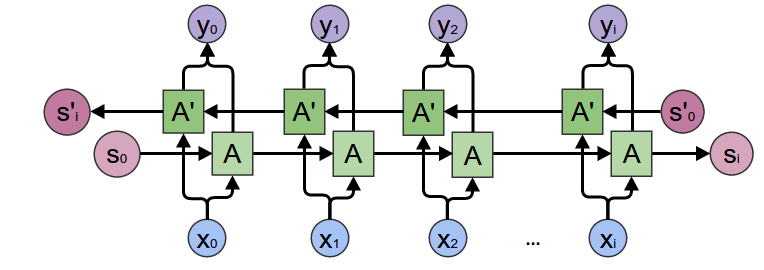

+## Subgraph API

+

+The subgraph API has been proposed and implemented as the default mechanism for integrating backend libraries to MXNet. The subgraph API is a very flexible interface. Although it was proposed as an integration mechanism, it has been used as a tool for manipulating NNVM graphs for graph-level optimizations, such as operator fusion.

+

+The subgraph API works as the following steps:

+

+* Search for particular patterns in a graph.

+* Group the operators/nodes with particular patterns into a subgraph and shrink the subgraph into a single node.

+* Replace the subgraph in the original graph with the subgraph node.

+

+The figure below illustrates the subgraph mechanism.

+

+

+

+The subgraph API allows the backend developers to customize the subgraph mechanism in two places:

+

+* Subgraph searching: define a subgraph selector to search for particular patterns in a computation graph.

+* Subgraph node creation: attach an operator to run the computation in the subgraph. We can potentially manipulate the subgraph here.

+

+

+The following is a demonstration of how the subgraph API can be applied to a simple task. Refer to the previous figure for an overview of the process. That is, replacing `Convolution` and `BatchNorm` with the conv_bn.

+

+The first step is to define a subgraph selector to find the required pattern. To find a pattern that has `Convolution` and `BatchNorm`, we can start the search on the node with `Convolution`. Then from the `Convolution` node, we search for `BatchNorm` along the outgoing edge.

+

+```C++

+class SgSelector : public SubgraphSelector {

+ public:

+ SgSelector() {

+ find_bn = false;

+ }

+ bool Select(const nnvm::Node &n) override {

+ // Here we start on the Convolution node to search for a subgraph.

+ return n.op() && n.op()->name == "Convolution";

+ }

+ bool SelectInput(const nnvm::Node &n, const nnvm::Node &new_node) override {

+ // We don't need to search on the incoming edge.

+ return false;

+ }

+ bool SelectOutput(const nnvm::Node &n, const nnvm::Node &new_node) override {

+ // We search on the outgoing edge. Once we find a BatchNorm node, we won't

+ // accept any more BatchNorm nodes.

+ if (new_node.op() && new_node.op()->name == "BatchNorm" && !find_bn) {

+ find_bn = true;

+ return true;

+ } else {

+ return false;

+ }

+ }

+ std::vector

Filter(const std::vector &candidates) override {

+ // We might have found a Convolution node, but we might have failed to find a BatchNorm

+ // node that uses the output of the Convolution node. If we failed, we should skip

+ // the Convolution node as well.

+ if (find_bn)

+ return candidates;

+ else

+ return std::vector();

+ }

+ private:

+ bool find_bn;

+};

+```

+

+The second step is to define a subgraph property to use the subgraph selector above to customize the subgraph searching. By defining this class, we can also customize subgraph node creation. When customizing node creation, we can specify what operator to run the subgraph on the node. In this example, we use `CachedOp`, which itself is a graph executor, to run the subgraph with `Convolution` and `BatchNorm`. In practice, it's most likely that we use a single operator from a backend library to replace the two operators for execution.

+

+```C++

+class SgProperty : public SubgraphProperty {

+ public:

+ static SubgraphPropertyPtr Create() {

+ return std::make_shared();

+ }

+ nnvm::NodePtr CreateSubgraphNode(

+ const nnvm::Symbol &sym, const int subgraph_id = 0) const override {

+ // We can use CachedOp to execute the subgraph.

+ nnvm::NodePtr n = nnvm::Node::Create();

+ n->attrs.op = Op::Get("_CachedOp");

+ n->attrs.name = "ConvBN" + std::to_string(subgraph_id);

+ n->attrs.subgraphs.push_back(std::make_shared(sym));

+ std::vector > flags{{"static_alloc", "true"}};

+ n->attrs.parsed = CachedOpPtr(new CachedOp(sym, flags));

+ return n;

+ }

+ SubgraphSelectorPtr CreateSubgraphSelector() const override {

+ return std::make_shared();

+ }

+};

+```

+

+After defining the subgraph property, we need to register it.

+

+```C++

+MXNET_REGISTER_SUBGRAPH_PROPERTY(SgTest, SgProperty);

+```

+

+After compiling this subgraph mechanism into MXNet, we can use the environment variable `MXNET_SUBGRAPH_BACKEND` to activate it.

+

+```bash

+export MXNET_SUBGRAPH_BACKEND=SgTest

+```

+

+This tutorial shows a simple example of how to use the subgraph API to search for patterns in an NNVM graph.

+Intested users can try different pattern matching rules (i.e., define their own `SubgraphSelector`) and

+attach different operators to execute the subgraphs.

+

+

diff --git a/docs/tutorials/control_flow/ControlFlowTutorial.md b/docs/tutorials/control_flow/ControlFlowTutorial.md

index 9e4c66f8521d..4b6a23136b5d 100644

--- a/docs/tutorials/control_flow/ControlFlowTutorial.md

+++ b/docs/tutorials/control_flow/ControlFlowTutorial.md

@@ -15,13 +15,13 @@ from mxnet.gluon import HybridBlock

## foreach

`foreach` is a for loop that iterates over the first dimension of the input data (it can be an array or a list of arrays). It is defined with the following signature:

-```python

+```

foreach(body, data, init_states, name) => (outputs, states)

```

It runs the Python function defined in `body` for every slice from the input arrays. The signature of the `body` function is defined as follows:

-```python

+```

body(data, states) => (outputs, states)

```

@@ -243,13 +243,13 @@ res, states = lstm(rnn_data, [x for x in init_states], valid_length)

## while_loop

`while_loop` defines a while loop. It has the following signature:

-```python

+```

while_loop(cond, body, loop_vars, max_iterations, name) => (outputs, states)

```

Instead of running over the first dimension of an array, `while_loop` checks a condition function in every iteration and runs a `body` function for computation. The signature of the `body` function is defined as follows:

-```python

+```

body(state1, state2, ...) => (outputs, states)

```

@@ -297,13 +297,13 @@ print(state)

## cond

`cond` defines an if condition. It has the following signature:

-```python

+```

cond(pred, then_func, else_func, name)

```

`cond` checks `pred`, which is a symbol or an NDArray with one element. If its value is true, it calls `then_func`. Otherwise, it calls `else_func`. The signature of `then_func` and `else_func` are as follows:

-```python

+```

func() => [outputs]

```

diff --git a/docs/tutorials/gluon/hybrid.md b/docs/tutorials/gluon/hybrid.md

index f9f2c112f532..6d64acdce275 100644

--- a/docs/tutorials/gluon/hybrid.md

+++ b/docs/tutorials/gluon/hybrid.md

@@ -125,6 +125,7 @@ with other language front-ends like C, C++ and Scala. To this end, we simply

use `export` and `SymbolBlock.imports`:

```python

+net(x)

net.export('model', epoch=1)

```

diff --git a/docs/tutorials/gluon/info_gan.md b/docs/tutorials/gluon/info_gan.md

new file mode 100644

index 000000000000..c8f07c6fda35

--- /dev/null

+++ b/docs/tutorials/gluon/info_gan.md

@@ -0,0 +1,437 @@

+

+# Image similarity search with InfoGAN

+

+This notebook shows how to implement an InfoGAN based on Gluon. InfoGAN is an extension of GANs, where the generator input is split in 2 parts: random noise and a latent code (see [InfoGAN Paper](https://arxiv.org/pdf/1606.03657.pdf)).

+The codes are made meaningful by maximizing the mutual information between code and generator output. InfoGAN learns a disentangled representation in a completely unsupervised manner. It can be used for many applications such as image similarity search. This notebook uses the DCGAN example from the [Straight Dope Book](https://gluon.mxnet.io/chapter14_generative-adversarial-networks/dcgan.html) and extends it to create an InfoGAN.

+

+

+```python

+from __future__ import print_function

+from datetime import datetime

+import logging

+import multiprocessing

+import os

+import sys

+import tarfile

+import time

+

+import numpy as np

+from matplotlib import pyplot as plt

+from mxboard import SummaryWriter

+import mxnet as mx

+from mxnet import gluon

+from mxnet import ndarray as nd

+from mxnet.gluon import nn, utils

+from mxnet import autograd

+

+```

+

+The latent code vector can contain several variables, which can be categorical and/or continuous. We set `n_continuous` to 2 and `n_categories` to 10.

+

+

+```python

+batch_size = 64

+z_dim = 100

+n_continuous = 2

+n_categories = 10

+ctx = mx.gpu() if mx.test_utils.list_gpus() else mx.cpu()

+```

+

+Some functions to load and normalize images.

+

+

+```python

+lfw_url = 'http://vis-www.cs.umass.edu/lfw/lfw-deepfunneled.tgz'

+data_path = 'lfw_dataset'

+if not os.path.exists(data_path):

+ os.makedirs(data_path)

+ data_file = utils.download(lfw_url)

+ with tarfile.open(data_file) as tar:

+ tar.extractall(path=data_path)

+

+```

+

+

+```python

+def transform(data, width=64, height=64):

+ data = mx.image.imresize(data, width, height)

+ data = nd.transpose(data, (2,0,1))

+ data = data.astype(np.float32)/127.5 - 1

+ if data.shape[0] == 1:

+ data = nd.tile(data, (3, 1, 1))

+ return data.reshape((1,) + data.shape)

+```

+

+

+```python

+def get_files(data_dir):

+ images = []

+ filenames = []

+ for path, _, fnames in os.walk(data_dir):

+ for fname in fnames:

+ if not fname.endswith('.jpg'):

+ continue

+ img = os.path.join(path, fname)

+ img_arr = mx.image.imread(img)

+ img_arr = transform(img_arr)

+ images.append(img_arr)

+ filenames.append(path + "/" + fname)

+ return images, filenames

+```

+

+Load the dataset `lfw_dataset` which contains images of celebrities.

+

+

+```python

+data_dir = 'lfw_dataset'

+images, filenames = get_files(data_dir)

+split = int(len(images)*0.8)

+test_images = images[split:]

+test_filenames = filenames[split:]

+train_images = images[:split]

+train_filenames = filenames[:split]

+

+train_data = gluon.data.ArrayDataset(nd.concatenate(train_images))

+train_dataloader = gluon.data.DataLoader(train_data, batch_size=batch_size, shuffle=True, last_batch='rollover', num_workers=multiprocessing.cpu_count()-1)

+```

+

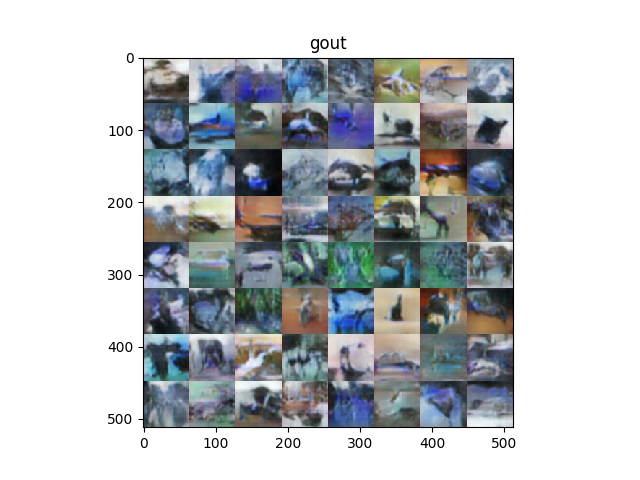

+## Generator

+Define the Generator model. Architecture is taken from the DCGAN implementation in [Straight Dope Book](https://gluon.mxnet.io/chapter14_generative-adversarial-networks/dcgan.html). The Generator consist of 4 layers where each layer involves a strided convolution, batch normalization, and rectified nonlinearity. It takes as input random noise and the latent code and produces an `(64,64,3)` output image.

+

+

+```python

+class Generator(gluon.HybridBlock):

+ def __init__(self, **kwargs):

+ super(Generator, self).__init__(**kwargs)

+ with self.name_scope():

+ self.prev = nn.HybridSequential()

+ self.prev.add(nn.Dense(1024, use_bias=False), nn.BatchNorm(), nn.Activation(activation='relu'))

+ self.G = nn.HybridSequential()

+

+ self.G.add(nn.Conv2DTranspose(64 * 8, 4, 1, 0, use_bias=False))

+ self.G.add(nn.BatchNorm())

+ self.G.add(nn.Activation('relu'))

+ self.G.add(nn.Conv2DTranspose(64 * 4, 4, 2, 1, use_bias=False))

+ self.G.add(nn.BatchNorm())

+ self.G.add(nn.Activation('relu'))

+ self.G.add(nn.Conv2DTranspose(64 * 2, 4, 2, 1, use_bias=False))

+ self.G.add(nn.BatchNorm())

+ self.G.add(nn.Activation('relu'))

+ self.G.add(nn.Conv2DTranspose(64, 4, 2, 1, use_bias=False))

+ self.G.add(nn.BatchNorm())

+ self.G.add(nn.Activation('relu'))

+ self.G.add(nn.Conv2DTranspose(3, 4, 2, 1, use_bias=False))

+ self.G.add(nn.Activation('tanh'))

+

+ def hybrid_forward(self, F, x):

+ x = self.prev(x)

+ x = F.reshape(x, (0, -1, 1, 1))

+ return self.G(x)

+```

+

+## Discriminator

+Define the Discriminator and Q model. The Q model shares many layers with the Discriminator. Its task is to estimate the code `c` for a given fake image. It is used to maximize the lower bound to the mutual information.

+

+

+```python

+class Discriminator(gluon.HybridBlock):

+ def __init__(self, **kwargs):

+ super(Discriminator, self).__init__(**kwargs)

+ with self.name_scope():

+ self.D = nn.HybridSequential()

+ self.D.add(nn.Conv2D(64, 4, 2, 1, use_bias=False))

+ self.D.add(nn.LeakyReLU(0.2))

+ self.D.add(nn.Conv2D(64 * 2, 4, 2, 1, use_bias=False))

+ self.D.add(nn.BatchNorm())

+ self.D.add(nn.LeakyReLU(0.2))

+ self.D.add(nn.Conv2D(64 * 4, 4, 2, 1, use_bias=False))

+ self.D.add(nn.BatchNorm())

+ self.D.add(nn.LeakyReLU(0.2))

+ self.D.add(nn.Conv2D(64 * 8, 4, 2, 1, use_bias=False))

+ self.D.add(nn.BatchNorm())

+ self.D.add(nn.LeakyReLU(0.2))

+

+ self.D.add(nn.Dense(1024, use_bias=False), nn.BatchNorm(), nn.Activation(activation='relu'))

+

+ self.prob = nn.Dense(1)

+ self.feat = nn.HybridSequential()

+ self.feat.add(nn.Dense(128, use_bias=False), nn.BatchNorm(), nn.Activation(activation='relu'))

+ self.category_prob = nn.Dense(n_categories)

+ self.continuous_mean = nn.Dense(n_continuous)

+ self.Q = nn.HybridSequential()

+ self.Q.add(self.feat, self.category_prob, self.continuous_mean)

+

+ def hybrid_forward(self, F, x):

+ x = self.D(x)

+ prob = self.prob(x)

+ feat = self.feat(x)

+ category_prob = self.category_prob(feat)

+ continuous_mean = self.continuous_mean(feat)

+

+ return prob, category_prob, continuous_mean

+```

+

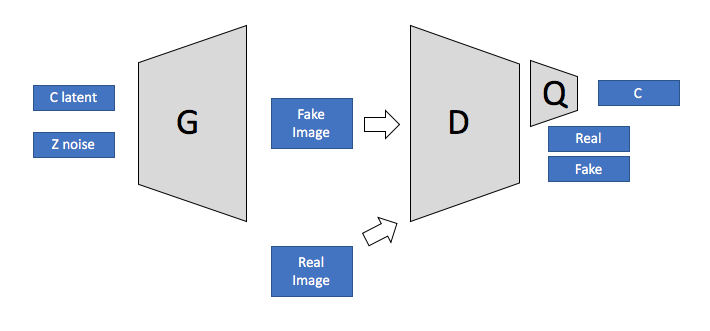

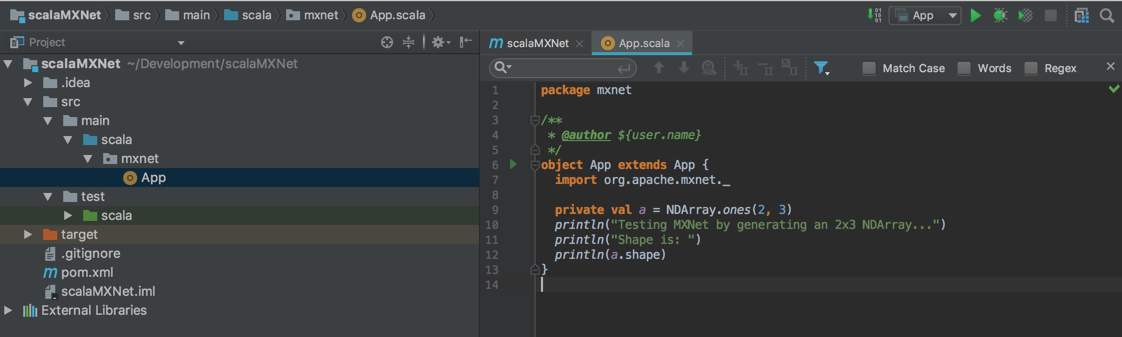

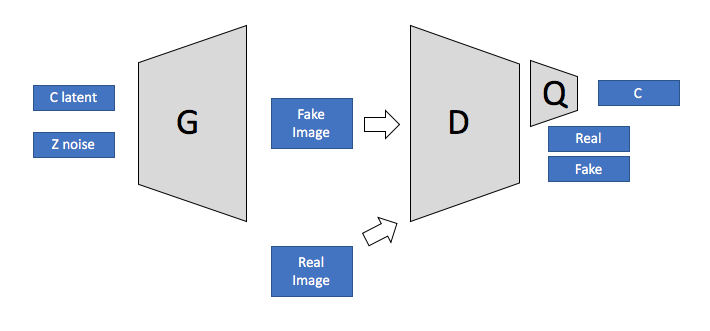

+The InfoGAN has the following layout.

+ +

+Discriminator and Generator are the same as in the DCGAN example. On top of the Disciminator is the Q model, which is estimating the code `c` for given fake images. The Generator's input is random noise and the latent code `c`.

+

+## Training Loop

+Initialize Generator and Discriminator and define correspoing trainer function.

+

+

+```python

+generator = Generator()

+generator.hybridize()

+generator.initialize(mx.init.Normal(0.002), ctx=ctx)

+

+discriminator = Discriminator()

+discriminator.hybridize()

+discriminator.initialize(mx.init.Normal(0.002), ctx=ctx)

+

+lr = 0.0001

+beta = 0.5

+

+g_trainer = gluon.Trainer(generator.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+d_trainer = gluon.Trainer(discriminator.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+q_trainer = gluon.Trainer(discriminator.Q.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+```

+

+Create vectors with real (=1) and fake labels (=0).

+

+

+```python

+real_label = nd.ones((batch_size,), ctx=ctx)

+fake_label = nd.zeros((batch_size,),ctx=ctx)

+```

+

+Load a pretrained model.

+

+

+```python

+if os.path.isfile('infogan_d_latest.params') and os.path.isfile('infogan_g_latest.params'):

+ discriminator.load_parameters('infogan_d_latest.params', ctx=ctx, allow_missing=True, ignore_extra=True)

+ generator.load_parameters('infogan_g_latest.params', ctx=ctx, allow_missing=True, ignore_extra=True)

+```

+There are 2 differences between InfoGAN and DCGAN: the extra latent code and the Q network to estimate the code.

+The latent code is part of the Generator input and it contains mutliple variables (continuous, categorical) that can represent different distributions. In order to make sure that the Generator uses the latent code, mutual information is introduced into the GAN loss term. Mutual information measures how much X is known given Y or vice versa. It is defined as:

+

+

+

+The InfoGAN loss is:

+

+

+

+where `V(D,G)` is the GAN loss and the mutual information `I(c, G(z, c))` goes in as regularization. The goal is to reach high mutual information, in order to learn meaningful codes for the data.

+

+

+Define the loss functions. `SoftmaxCrossEntropyLoss` for the categorical code, `L2Loss` for the continious code and `SigmoidBinaryCrossEntropyLoss` for the normal GAN loss.

+

+

+```python

+loss1 = gluon.loss.SigmoidBinaryCrossEntropyLoss()

+loss2 = gluon.loss.L2Loss()

+loss3 = gluon.loss.SoftmaxCrossEntropyLoss()

+```

+

+This function samples `c`, `z`, and concatenates them to create the generator input.

+

+

+```python

+def create_generator_input():

+

+ #create random noise

+ z = nd.random_normal(0, 1, shape=(batch_size, z_dim), ctx=ctx)

+ label = nd.array(np.random.randint(n_categories, size=batch_size)).as_in_context(ctx)

+ c1 = nd.one_hot(label, depth=n_categories).as_in_context(ctx)

+ c2 = nd.random.uniform(-1, 1, shape=(batch_size, n_continuous)).as_in_context(ctx)

+

+ # concatenate random noise with c which will be the input of the generator

+ return nd.concat(z, c1, c2, dim=1), label, c2

+```

+

+Define the training loop.

+1. The discriminator receives `real_data` and `loss1` measures how many real images have been identified as real

+2. The discriminator receives `fake_image` from the Generator and `loss1` measures how many fake images have been identified as fake

+3. Update Discriminator. Currently, it is updated every second iteration in order to avoid that the Discriminator becomes too strong. You may want to change that.

+4. The updated discriminator receives `fake_image` and `loss1` measures how many fake images have been been identified as real, `loss2` measures the difference between the sampled continuous latent code `c` and the output of the Q model and `loss3` measures the difference between the sampled categorical latent code `c` and the output of the Q model.

+4. Update Generator and Q

+

+

+```python

+with SummaryWriter(logdir='./logs/') as sw:

+

+ epochs = 1

+ counter = 0

+ for epoch in range(epochs):

+ print("Epoch", epoch)

+ starttime = time.time()

+

+ d_error_epoch = nd.zeros((1,), ctx=ctx)

+ g_error_epoch = nd.zeros((1,), ctx=ctx)

+

+ for idx, data in enumerate(train_dataloader):

+

+ #get real data and generator input

+ real_data = data.as_in_context(ctx)

+ g_input, label, c2 = create_generator_input()

+

+

+ #Update discriminator: Input real data and fake data

+ with autograd.record():

+ output_real,_,_ = discriminator(real_data)

+ d_error_real = loss1(output_real, real_label)

+

+ # create fake image and input it to discriminator

+ fake_image = generator(g_input)

+ output_fake,_,_ = discriminator(fake_image.detach())

+ d_error_fake = loss1(output_fake, fake_label)

+

+ # total discriminator error

+ d_error = d_error_real + d_error_fake

+

+ d_error_epoch += d_error.mean()

+

+ #Update D every second iteration

+ if (counter+1) % 2 == 0:

+ d_error.backward()

+ d_trainer.step(batch_size)

+

+ #Update generator: Input random noise and latent code vector

+ with autograd.record():

+ fake_image = generator(g_input)

+ output_fake, category_prob, continuous_mean = discriminator(fake_image)

+ g_error = loss1(output_fake, real_label) + loss3(category_prob, label) + loss2(c2, continuous_mean)

+

+ g_error.backward()

+ g_error_epoch += g_error.mean()

+

+ g_trainer.step(batch_size)

+ q_trainer.step(batch_size)

+

+ # logging

+ if idx % 10 == 0:

+

+ logging.info('speed: {} samples/s'.format(batch_size / (time.time() - starttime)))

+ logging.info('discriminator loss = %f, generator loss = %f at iter %d epoch %d'

+ %(d_error_epoch.asscalar()/idx,g_error_epoch.asscalar()/idx, idx, epoch))

+

+ g_input,_,_ = create_generator_input()

+

+ # create some fake image for logging in MXBoard

+ fake_image = generator(g_input)

+

+ sw.add_scalar(tag='Loss_D', value={'test':d_error_epoch.asscalar()/idx}, global_step=counter)

+ sw.add_scalar(tag='Loss_G', value={'test':d_error_epoch.asscalar()/idx}, global_step=counter)

+ sw.add_image(tag='data_image', image=((fake_image[0]+ 1.0) * 127.5).astype(np.uint8) , global_step=counter)

+ sw.flush()

+

+ discriminator.save_parameters("infogan_d_latest.params")

+ generator.save_parameters("infogan_g_latest.params")

+```

+

+## Image similarity

+Once the InfoGAN is trained, we can use the Discriminator to do an image similarity search. The idea is that the network learned meaningful features from the images based on the mutual information e.g. pose of people in an image.

+

+Load the trained discriminator and retrieve one of its last layers.

+

+

+```python

+discriminator = Discriminator()

+discriminator.load_parameters("infogan_d_latest.params", ctx=ctx, ignore_extra=True)

+

+discriminator = discriminator.D[:11]

+print (discriminator)

+

+discriminator.hybridize()

+```

+

+Nearest neighbor function, which takes a matrix of features and an input feature vector. It returns the 3 closest features.

+

+

+```python

+def get_knn(features, input_vector, k=3):

+ dist = (nd.square(features - input_vector).sum(axis=1))/features.shape[0]

+ indices = dist.asnumpy().argsort()[:k]

+ return [(index, dist[index].asscalar()) for index in indices]

+```

+

+A helper function to visualize image data.

+

+

+```python

+def visualize(img_array):

+ plt.imshow(((img_array.asnumpy().transpose(1, 2, 0) + 1.0) * 127.5).astype(np.uint8))

+ plt.axis('off')

+```

+

+Take some images from the test data, obtain its feature vector from `discriminator.D[:11]` and plot images of the corresponding closest vectors in the feature space.

+

+

+```python

+feature_size = 8192

+

+features = nd.zeros((len(test_images), feature_size), ctx=ctx)

+

+for idx, image in enumerate(test_images):

+

+ feature = discriminator(nd.array(image, ctx=ctx))

+ feature = feature.reshape(feature_size,)

+ features[idx,:] = feature.copyto(ctx)

+

+

+for image in test_images[:100]:

+

+ feature = discriminator(mx.nd.array(image, ctx=ctx))

+ feature = feature.reshape((feature_size,))

+ image = image.reshape((3,64,64))

+

+

+ indices = get_knn(features, feature, k=10)

+ fig = plt.figure(figsize=(15,12))

+ plt.subplot(1,10,1)

+

+ visualize(image)

+ for i in range(2,9):

+ if indices[i-1][1] < 1.5:

+ plt.subplot(1,10,i)

+ sim = test_images[indices[i-1][0]].reshape(3,64,64)

+ visualize(sim)

+ plt.show()

+ plt.clf()

+```

+

+

+## How the Generator learns

+We trained the Generator for a couple of epochs and stored a couple of fake images per epoch. Check the video.

+

+

+

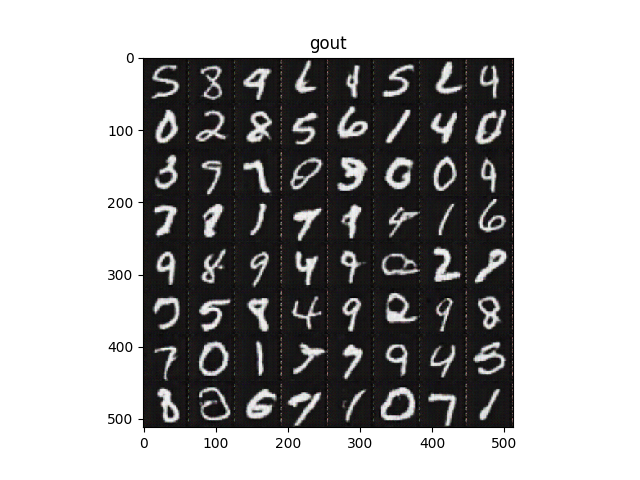

+The following function computes the TSNE on the feature matrix and stores the result in a json-file. This file can be loaded with [TSNEViewer](https://ml4a.github.io/guides/ImageTSNEViewer/)

+

+

+```python

+import json

+

+from sklearn.manifold import TSNE

+from scipy.spatial import distance

+

+tsne = TSNE(n_components=2, learning_rate=150, perplexity=30, verbose=2).fit_transform(features.asnumpy())

+

+# save data to json

+data = []

+counter = 0

+for i,f in enumerate(test_filenames):

+

+ point = [float((tsne[i,k] - np.min(tsne[:,k]))/(np.max(tsne[:,k]) - np.min(tsne[:,k]))) for k in range(2) ]

+ data.append({"path": os.path.abspath(os.path.join(os.getcwd(),f)), "point": point})

+

+with open("imagetsne.json", 'w') as outfile:

+ json.dump(data, outfile)

+```

+

+Load the file with TSNEViewer. You can now inspect whether similiar looking images are grouped nearby or not.

+

+

+

+Discriminator and Generator are the same as in the DCGAN example. On top of the Disciminator is the Q model, which is estimating the code `c` for given fake images. The Generator's input is random noise and the latent code `c`.

+

+## Training Loop

+Initialize Generator and Discriminator and define correspoing trainer function.

+

+

+```python

+generator = Generator()

+generator.hybridize()

+generator.initialize(mx.init.Normal(0.002), ctx=ctx)

+

+discriminator = Discriminator()

+discriminator.hybridize()

+discriminator.initialize(mx.init.Normal(0.002), ctx=ctx)

+

+lr = 0.0001

+beta = 0.5

+

+g_trainer = gluon.Trainer(generator.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+d_trainer = gluon.Trainer(discriminator.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+q_trainer = gluon.Trainer(discriminator.Q.collect_params(), 'adam', {'learning_rate': lr, 'beta1': beta})

+```

+

+Create vectors with real (=1) and fake labels (=0).

+

+

+```python

+real_label = nd.ones((batch_size,), ctx=ctx)

+fake_label = nd.zeros((batch_size,),ctx=ctx)

+```

+

+Load a pretrained model.

+

+

+```python

+if os.path.isfile('infogan_d_latest.params') and os.path.isfile('infogan_g_latest.params'):

+ discriminator.load_parameters('infogan_d_latest.params', ctx=ctx, allow_missing=True, ignore_extra=True)

+ generator.load_parameters('infogan_g_latest.params', ctx=ctx, allow_missing=True, ignore_extra=True)

+```

+There are 2 differences between InfoGAN and DCGAN: the extra latent code and the Q network to estimate the code.

+The latent code is part of the Generator input and it contains mutliple variables (continuous, categorical) that can represent different distributions. In order to make sure that the Generator uses the latent code, mutual information is introduced into the GAN loss term. Mutual information measures how much X is known given Y or vice versa. It is defined as:

+

+

+

+The InfoGAN loss is:

+

+

+

+where `V(D,G)` is the GAN loss and the mutual information `I(c, G(z, c))` goes in as regularization. The goal is to reach high mutual information, in order to learn meaningful codes for the data.

+

+

+Define the loss functions. `SoftmaxCrossEntropyLoss` for the categorical code, `L2Loss` for the continious code and `SigmoidBinaryCrossEntropyLoss` for the normal GAN loss.

+

+

+```python

+loss1 = gluon.loss.SigmoidBinaryCrossEntropyLoss()

+loss2 = gluon.loss.L2Loss()

+loss3 = gluon.loss.SoftmaxCrossEntropyLoss()

+```

+

+This function samples `c`, `z`, and concatenates them to create the generator input.

+

+

+```python

+def create_generator_input():

+

+ #create random noise

+ z = nd.random_normal(0, 1, shape=(batch_size, z_dim), ctx=ctx)

+ label = nd.array(np.random.randint(n_categories, size=batch_size)).as_in_context(ctx)

+ c1 = nd.one_hot(label, depth=n_categories).as_in_context(ctx)

+ c2 = nd.random.uniform(-1, 1, shape=(batch_size, n_continuous)).as_in_context(ctx)

+

+ # concatenate random noise with c which will be the input of the generator

+ return nd.concat(z, c1, c2, dim=1), label, c2

+```

+

+Define the training loop.

+1. The discriminator receives `real_data` and `loss1` measures how many real images have been identified as real

+2. The discriminator receives `fake_image` from the Generator and `loss1` measures how many fake images have been identified as fake

+3. Update Discriminator. Currently, it is updated every second iteration in order to avoid that the Discriminator becomes too strong. You may want to change that.

+4. The updated discriminator receives `fake_image` and `loss1` measures how many fake images have been been identified as real, `loss2` measures the difference between the sampled continuous latent code `c` and the output of the Q model and `loss3` measures the difference between the sampled categorical latent code `c` and the output of the Q model.

+4. Update Generator and Q

+

+

+```python

+with SummaryWriter(logdir='./logs/') as sw:

+

+ epochs = 1

+ counter = 0

+ for epoch in range(epochs):

+ print("Epoch", epoch)

+ starttime = time.time()

+

+ d_error_epoch = nd.zeros((1,), ctx=ctx)

+ g_error_epoch = nd.zeros((1,), ctx=ctx)

+

+ for idx, data in enumerate(train_dataloader):

+

+ #get real data and generator input

+ real_data = data.as_in_context(ctx)

+ g_input, label, c2 = create_generator_input()

+

+

+ #Update discriminator: Input real data and fake data

+ with autograd.record():

+ output_real,_,_ = discriminator(real_data)

+ d_error_real = loss1(output_real, real_label)

+

+ # create fake image and input it to discriminator

+ fake_image = generator(g_input)

+ output_fake,_,_ = discriminator(fake_image.detach())

+ d_error_fake = loss1(output_fake, fake_label)

+

+ # total discriminator error

+ d_error = d_error_real + d_error_fake

+

+ d_error_epoch += d_error.mean()

+

+ #Update D every second iteration

+ if (counter+1) % 2 == 0:

+ d_error.backward()

+ d_trainer.step(batch_size)

+

+ #Update generator: Input random noise and latent code vector

+ with autograd.record():

+ fake_image = generator(g_input)

+ output_fake, category_prob, continuous_mean = discriminator(fake_image)

+ g_error = loss1(output_fake, real_label) + loss3(category_prob, label) + loss2(c2, continuous_mean)

+

+ g_error.backward()

+ g_error_epoch += g_error.mean()

+

+ g_trainer.step(batch_size)

+ q_trainer.step(batch_size)

+

+ # logging

+ if idx % 10 == 0:

+

+ logging.info('speed: {} samples/s'.format(batch_size / (time.time() - starttime)))

+ logging.info('discriminator loss = %f, generator loss = %f at iter %d epoch %d'

+ %(d_error_epoch.asscalar()/idx,g_error_epoch.asscalar()/idx, idx, epoch))

+

+ g_input,_,_ = create_generator_input()

+

+ # create some fake image for logging in MXBoard

+ fake_image = generator(g_input)

+

+ sw.add_scalar(tag='Loss_D', value={'test':d_error_epoch.asscalar()/idx}, global_step=counter)

+ sw.add_scalar(tag='Loss_G', value={'test':d_error_epoch.asscalar()/idx}, global_step=counter)

+ sw.add_image(tag='data_image', image=((fake_image[0]+ 1.0) * 127.5).astype(np.uint8) , global_step=counter)

+ sw.flush()

+

+ discriminator.save_parameters("infogan_d_latest.params")

+ generator.save_parameters("infogan_g_latest.params")

+```

+

+## Image similarity

+Once the InfoGAN is trained, we can use the Discriminator to do an image similarity search. The idea is that the network learned meaningful features from the images based on the mutual information e.g. pose of people in an image.

+

+Load the trained discriminator and retrieve one of its last layers.

+

+

+```python

+discriminator = Discriminator()

+discriminator.load_parameters("infogan_d_latest.params", ctx=ctx, ignore_extra=True)

+

+discriminator = discriminator.D[:11]

+print (discriminator)

+

+discriminator.hybridize()

+```

+

+Nearest neighbor function, which takes a matrix of features and an input feature vector. It returns the 3 closest features.

+

+

+```python

+def get_knn(features, input_vector, k=3):

+ dist = (nd.square(features - input_vector).sum(axis=1))/features.shape[0]

+ indices = dist.asnumpy().argsort()[:k]

+ return [(index, dist[index].asscalar()) for index in indices]

+```

+

+A helper function to visualize image data.

+

+

+```python

+def visualize(img_array):

+ plt.imshow(((img_array.asnumpy().transpose(1, 2, 0) + 1.0) * 127.5).astype(np.uint8))

+ plt.axis('off')

+```

+

+Take some images from the test data, obtain its feature vector from `discriminator.D[:11]` and plot images of the corresponding closest vectors in the feature space.

+

+

+```python

+feature_size = 8192

+

+features = nd.zeros((len(test_images), feature_size), ctx=ctx)

+

+for idx, image in enumerate(test_images):

+

+ feature = discriminator(nd.array(image, ctx=ctx))

+ feature = feature.reshape(feature_size,)

+ features[idx,:] = feature.copyto(ctx)

+

+

+for image in test_images[:100]:

+

+ feature = discriminator(mx.nd.array(image, ctx=ctx))

+ feature = feature.reshape((feature_size,))

+ image = image.reshape((3,64,64))

+

+

+ indices = get_knn(features, feature, k=10)

+ fig = plt.figure(figsize=(15,12))

+ plt.subplot(1,10,1)

+

+ visualize(image)

+ for i in range(2,9):

+ if indices[i-1][1] < 1.5:

+ plt.subplot(1,10,i)

+ sim = test_images[indices[i-1][0]].reshape(3,64,64)

+ visualize(sim)

+ plt.show()

+ plt.clf()

+```

+

+

+## How the Generator learns

+We trained the Generator for a couple of epochs and stored a couple of fake images per epoch. Check the video.

+

+

+

+The following function computes the TSNE on the feature matrix and stores the result in a json-file. This file can be loaded with [TSNEViewer](https://ml4a.github.io/guides/ImageTSNEViewer/)

+

+

+```python

+import json

+

+from sklearn.manifold import TSNE

+from scipy.spatial import distance

+

+tsne = TSNE(n_components=2, learning_rate=150, perplexity=30, verbose=2).fit_transform(features.asnumpy())

+

+# save data to json

+data = []

+counter = 0

+for i,f in enumerate(test_filenames):

+

+ point = [float((tsne[i,k] - np.min(tsne[:,k]))/(np.max(tsne[:,k]) - np.min(tsne[:,k]))) for k in range(2) ]

+ data.append({"path": os.path.abspath(os.path.join(os.getcwd(),f)), "point": point})

+

+with open("imagetsne.json", 'w') as outfile:

+ json.dump(data, outfile)

+```

+

+Load the file with TSNEViewer. You can now inspect whether similiar looking images are grouped nearby or not.

+

+ +

+

diff --git a/docs/tutorials/gluon/learning_rate_finder.md b/docs/tutorials/gluon/learning_rate_finder.md

index 661a017099e6..b571a53f674c 100644

--- a/docs/tutorials/gluon/learning_rate_finder.md

+++ b/docs/tutorials/gluon/learning_rate_finder.md

@@ -80,7 +80,6 @@ We also adjust our `DataLoader` so that it continuously provides batches of data

```python

-from multiprocessing import cpu_count

from mxnet.gluon.data.vision import transforms

transform = transforms.Compose([

@@ -109,7 +108,7 @@ class ContinuousBatchSampler():

sampler = mx.gluon.data.RandomSampler(len(dataset))

batch_sampler = ContinuousBatchSampler(sampler, batch_size=128)

-data_loader = mx.gluon.data.DataLoader(dataset, batch_sampler=batch_sampler, num_workers=cpu_count())

+data_loader = mx.gluon.data.DataLoader(dataset, batch_sampler=batch_sampler)

```

## Implementation

@@ -143,7 +142,7 @@ class LRFinder():

self.learner.trainer._init_kvstore()

# Store params and optimizer state for restore after lr_finder procedure

# Useful for applying the method partway through training, not just for initialization of lr.

- self.learner.net.save_params("lr_finder.params")

+ self.learner.net.save_parameters("lr_finder.params")

self.learner.trainer.save_states("lr_finder.state")

lr = lr_start

self.results = [] # List of (lr, loss) tuples

@@ -156,7 +155,7 @@ class LRFinder():

break

lr = lr * lr_multiplier

# Restore params (as finder changed them)

- self.learner.net.load_params("lr_finder.params", ctx=self.learner.ctx)

+ self.learner.net.load_parameters("lr_finder.params", ctx=self.learner.ctx)

self.learner.trainer.load_states("lr_finder.state")

return self.results

@@ -231,10 +230,10 @@ As discussed before, we should select a learning rate where the loss is falling

```python

-learner.net.save_params("net.params")

+learner.net.save_parameters("net.params")

lr = 0.05

-for iter_idx in range(500):

+for iter_idx in range(300):

learner.iteration(lr=lr)

if ((iter_idx % 100) == 0):

print("Iteration: {}, Loss: {:.5g}".format(iter_idx, learner.iteration_loss))

@@ -247,9 +246,6 @@ Iteration: 100, Loss: 1.6653

Iteration: 200, Loss: 1.4891

-Iteration: 300, Loss: 1.0846

-

-Iteration: 400, Loss: 1.0633

Final Loss: 1.1812

@@ -262,10 +258,10 @@ And now we have a baseline, let's see what happens when we train with a learning

```python

net = mx.gluon.model_zoo.vision.resnet18_v2(classes=10)

learner = Learner(net=net, data_loader=data_loader, ctx=ctx)

-learner.net.load_params("net.params", ctx=ctx)

+learner.net.load_parameters("net.params", ctx=ctx)

lr = 0.5

-for iter_idx in range(500):

+for iter_idx in range(300):

learner.iteration(lr=lr)

if ((iter_idx % 100) == 0):

print("Iteration: {}, Loss: {:.5g}".format(iter_idx, learner.iteration_loss))

@@ -278,9 +274,6 @@ Iteration: 100, Loss: 1.9666

Iteration: 200, Loss: 1.6919

-Iteration: 300, Loss: 1.3643

-

-Iteration: 400, Loss: 1.4743

Final Loss: 1.366

@@ -293,10 +286,10 @@ And lastly, we see how the model trains with a more conservative learning rate o

```python

net = mx.gluon.model_zoo.vision.resnet18_v2(classes=10)

learner = Learner(net=net, data_loader=data_loader, ctx=ctx)

-learner.net.load_params("net.params", ctx=ctx)

+learner.net.load_parameters("net.params", ctx=ctx)

lr = 0.005

-for iter_idx in range(500):

+for iter_idx in range(300):

learner.iteration(lr=lr)

if ((iter_idx % 100) == 0):

print("Iteration: {}, Loss: {:.5g}".format(iter_idx, learner.iteration_loss))

@@ -309,9 +302,6 @@ Iteration: 100, Loss: 1.8621

Iteration: 200, Loss: 1.6316

-Iteration: 300, Loss: 1.6295

-

-Iteration: 400, Loss: 1.4019

Final Loss: 1.2919

diff --git a/docs/tutorials/gluon/learning_rate_schedules.md b/docs/tutorials/gluon/learning_rate_schedules.md

index dc340b799b79..88b109e7f33e 100644

--- a/docs/tutorials/gluon/learning_rate_schedules.md

+++ b/docs/tutorials/gluon/learning_rate_schedules.md

@@ -12,7 +12,6 @@ In this tutorial, we visualize the schedules defined in `mx.lr_scheduler`, show

```python

-%matplotlib inline

from __future__ import print_function

import math

import matplotlib.pyplot as plt

@@ -20,6 +19,7 @@ import mxnet as mx

from mxnet.gluon import nn

from mxnet.gluon.data.vision import transforms

import numpy as np

+%matplotlib inline

```

```python

@@ -134,7 +134,7 @@ batch_size = 64

# Load the training data

train_dataset = mx.gluon.data.vision.MNIST(train=True).transform_first(transforms.ToTensor())

-train_dataloader = mx.gluon.data.DataLoader(train_dataset, batch_size, shuffle=True)

+train_dataloader = mx.gluon.data.DataLoader(train_dataset, batch_size, shuffle=True, num_workers=5)

# Build a simple convolutional network

def build_cnn():

diff --git a/docs/tutorials/gluon/save_load_params.md b/docs/tutorials/gluon/save_load_params.md

index d8eac88d8f59..ebc8103e7b45 100644

--- a/docs/tutorials/gluon/save_load_params.md

+++ b/docs/tutorials/gluon/save_load_params.md

@@ -243,7 +243,7 @@ One of the main reasons to serialize model architecture into a JSON file is to l

Serialized Hybrid networks (saved as .JSON and .params file) can be loaded and used inside Python frontend using `gluon.nn.SymbolBlock`. To demonstrate that, let's load the network we serialized above.

```python

-deserialized_net = gluon.nn.SymbolBlock.imports("lenet-symbol.json", ['data'], "lenet-0001.params")

+deserialized_net = gluon.nn.SymbolBlock.imports("lenet-symbol.json", ['data'], "lenet-0001.params", ctx=ctx)

```

`deserialized_net` now contains the network we deserialized from files. Let's test the deserialized network to make sure it works.

diff --git a/docs/tutorials/index.md b/docs/tutorials/index.md

index df1d892247b7..23cf67529c19 100644

--- a/docs/tutorials/index.md

+++ b/docs/tutorials/index.md

@@ -3,6 +3,7 @@

```eval_rst

.. toctree::

:hidden:

+

basic/index.md

c++/index.md

control_flow/index.md

@@ -57,6 +58,7 @@ Select API:

* [Logistic Regression](/tutorials/gluon/logistic_regression_explained.html)

* [Word-level text generation with RNN, LSTM and GRU](http://gluon.mxnet.io/chapter05_recurrent-neural-networks/rnns-gluon.html)

+

+

diff --git a/docs/tutorials/gluon/learning_rate_finder.md b/docs/tutorials/gluon/learning_rate_finder.md

index 661a017099e6..b571a53f674c 100644

--- a/docs/tutorials/gluon/learning_rate_finder.md

+++ b/docs/tutorials/gluon/learning_rate_finder.md

@@ -80,7 +80,6 @@ We also adjust our `DataLoader` so that it continuously provides batches of data

```python

-from multiprocessing import cpu_count

from mxnet.gluon.data.vision import transforms

transform = transforms.Compose([

@@ -109,7 +108,7 @@ class ContinuousBatchSampler():

sampler = mx.gluon.data.RandomSampler(len(dataset))

batch_sampler = ContinuousBatchSampler(sampler, batch_size=128)

-data_loader = mx.gluon.data.DataLoader(dataset, batch_sampler=batch_sampler, num_workers=cpu_count())

+data_loader = mx.gluon.data.DataLoader(dataset, batch_sampler=batch_sampler)

```

## Implementation

@@ -143,7 +142,7 @@ class LRFinder():

self.learner.trainer._init_kvstore()

# Store params and optimizer state for restore after lr_finder procedure

# Useful for applying the method partway through training, not just for initialization of lr.

- self.learner.net.save_params("lr_finder.params")

+ self.learner.net.save_parameters("lr_finder.params")

self.learner.trainer.save_states("lr_finder.state")

lr = lr_start

self.results = [] # List of (lr, loss) tuples

@@ -156,7 +155,7 @@ class LRFinder():

break

lr = lr * lr_multiplier

# Restore params (as finder changed them)

- self.learner.net.load_params("lr_finder.params", ctx=self.learner.ctx)

+ self.learner.net.load_parameters("lr_finder.params", ctx=self.learner.ctx)

self.learner.trainer.load_states("lr_finder.state")

return self.results

@@ -231,10 +230,10 @@ As discussed before, we should select a learning rate where the loss is falling

```python

-learner.net.save_params("net.params")

+learner.net.save_parameters("net.params")

lr = 0.05

-for iter_idx in range(500):

+for iter_idx in range(300):

learner.iteration(lr=lr)

if ((iter_idx % 100) == 0):

print("Iteration: {}, Loss: {:.5g}".format(iter_idx, learner.iteration_loss))

@@ -247,9 +246,6 @@ Iteration: 100, Loss: 1.6653

Iteration: 200, Loss: 1.4891

-Iteration: 300, Loss: 1.0846

-

-Iteration: 400, Loss: 1.0633

Final Loss: 1.1812

@@ -262,10 +258,10 @@ And now we have a baseline, let's see what happens when we train with a learning

```python

net = mx.gluon.model_zoo.vision.resnet18_v2(classes=10)

learner = Learner(net=net, data_loader=data_loader, ctx=ctx)

-learner.net.load_params("net.params", ctx=ctx)

+learner.net.load_parameters("net.params", ctx=ctx)

lr = 0.5

-for iter_idx in range(500):

+for iter_idx in range(300):

learner.iteration(lr=lr)

if ((iter_idx % 100) == 0):

print("Iteration: {}, Loss: {:.5g}".format(iter_idx, learner.iteration_loss))

@@ -278,9 +274,6 @@ Iteration: 100, Loss: 1.9666

Iteration: 200, Loss: 1.6919

-Iteration: 300, Loss: 1.3643

-

-Iteration: 400, Loss: 1.4743

Final Loss: 1.366