-

Notifications

You must be signed in to change notification settings - Fork 28.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-23799] FilterEstimation.evaluateInSet produces devision by zero in a case of empty table with analyzed statistics #20913

[SPARK-23799] FilterEstimation.evaluateInSet produces devision by zero in a case of empty table with analyzed statistics #20913

Conversation

…ision by zero can occur. In order to fix this, check was added.

|

ok to test |

|

@mshtelma can you add a unit test? |

|

Test build #88643 has finished for PR 20913 at commit

|

|

Good catch! +1 for adding tests in |

|

retest this please |

|

Test build #88771 has finished for PR 20913 at commit

|

… IN conditions, if the source table is empty, division by zero can occur. In order to fix this, check was added.

|

I have added the unit case for this particular situation |

|

Test build #88850 has finished for PR 20913 at commit

|

|

retest this please |

| @@ -427,7 +427,11 @@ case class FilterEstimation(plan: Filter) extends Logging { | |||

|

|

|||

| // return the filter selectivity. Without advanced statistics such as histograms, | |||

| // we have to assume uniform distribution. | |||

| Some(math.min(newNdv.toDouble / ndv.toDouble, 1.0)) | |||

| if (ndv.toDouble != 0) { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What's the concrete example query when ndv.toDouble == 0?

Also, is this only an place where we need this check?

For example, we don't here?:

Line 166 in 5cfd5fa

| 1.0 / bin.ndv.toDouble |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have experienced this problem for the sub condition with IN clause, smth like "FLD in ("value")".

To my mind, this happens, if the table is empty. In this case ndv will be 0.

I think, it will make sense, to check it everywhere it is used in this way.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can you add a test for the empty table case?

I think we need to fix the other places if they have the same issue. cc: @wzhfy

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have developed the test that illustrates the problem. It is just a small scala app right now.

Right now it breaks after the last select. Adding REFRESH TABLE TBL does not fix the problem in this particular case.

Should I add it to some particular test suite ?

object SparkCBOStatisticsTest {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder

.master("local[*]")

.config("spark.sql.adaptive.enabled", "true")

.config("spark.sql.cbo.enabled", "true")

.config("spark.sql.cbo.joinReorder.enabled", "true")

.config("spark.sql.sources.bucketing.enabled", "true")

.getOrCreate()

import spark.implicits._

import org.apache.spark.sql.functions._

val df = spark.range(10000L).select('id,

'id * 2 as "fld1",

'id * 12 as "fld2",

lit("aaa") + 'id as "fld3",

lit("bbb") + 'id * 'id as "fld4")

df.write

.mode(SaveMode.Overwrite)

.bucketBy(10, "id","fld1", "fld2")

.sortBy("id","fld1", "fld2")

.saveAsTable("TBL")

spark.sql("ANALYZE TABLE TBL COMPUTE STATISTICS ")

spark.sql("ANALYZE TABLE TBL COMPUTE STATISTICS FOR COLUMNS ID, FLD1, FLD2, FLD3")

val df2 = spark.sql("select t1.fld1*t2.fld2 as fld1, t1.fld2, t1.fld3 from tbl t1 join tbl t2 on t1.id=t2.id where t1.fld1>100 and t1.fld3 in (-123.23,321.23) ")

df2.explain(true)

df2.show(100)

df2.createTempView("tbl2")

spark.sql("DESC FORMATTED TBL").show(false)

spark.sql("select * from tbl2 where fld3 in ('qqq', 'qwe') and fld1<100 ").show(100, false)

}

}

|

Test build #88877 has finished for PR 20913 at commit

|

|

retest this please |

|

Test build #88882 has finished for PR 20913 at commit

|

This change basically rewrites the security documentation so that it's up to date with new features, more correct, and more complete. Because security is such an important feature, I chose to move all the relevant configuration documentation to the security page, instead of having them peppered all over the place in the configuration page. This allows an almost one-stop shop for security configuration in Spark. The only exceptions are some YARN-specific minor features which I left in the YARN page. I also re-organized the page's topics, since they didn't make a lot of sense. You had kerberos features described inside paragraphs talking about UI access control, and other oddities. It should be easier now to find information about specific Spark security features. I also enabled TOCs for both the Security and YARN pages, since that makes it easier to see what is covered. I removed most of the comments from the SecurityManager javadoc since they just replicated information in the security doc, with different levels of out-of-dateness. Author: Marcelo Vanzin <[email protected]> Closes apache#20742 from vanzin/SPARK-23572.

…ionSummary ## What changes were proposed in this pull request? Adding r2adj in LinearRegressionSummary for Python API. ## How was this patch tested? Added unit tests to exercise the api calls for the summary classes in tests.py. Author: Kevin Yu <[email protected]> Closes apache#20842 from kevinyu98/spark-23162.

## What changes were proposed in this pull request? The UUID() expression is stateful and should implement the `Stateful` trait instead of the `Nondeterministic` trait. ## How was this patch tested? Added test. Author: Liang-Chi Hsieh <[email protected]> Closes apache#20912 from viirya/SPARK-23794.

## What changes were proposed in this pull request? This PR migrate micro batch rate source to V2 API and rewrite UTs to suite V2 test. ## How was this patch tested? UTs. Author: jerryshao <[email protected]> Closes apache#20688 from jerryshao/SPARK-23096.

… enabled ## What changes were proposed in this pull request? When using Arrow for createDataFrame or toPandas and an error is encountered with fallback disabled, this will raise the same type of error instead of a RuntimeError. This change also allows for the traceback of the error to be retained and prevents the accidental chaining of exceptions with Python 3. ## How was this patch tested? Updated existing tests to verify error type. Author: Bryan Cutler <[email protected]> Closes apache#20839 from BryanCutler/arrow-raise-same-error-SPARK-23699.

## What changes were proposed in this pull request? This PR proposes to add lineSep option for a configurable line separator in text datasource. It supports this option by using `LineRecordReader`'s functionality with passing it to the constructor. The approach is similar with apache#20727; however, one main difference is, it uses text datasource's `lineSep` option to parse line by line in JSON's schema inference. ## How was this patch tested? Manually tested and unit tests were added. Author: hyukjinkwon <[email protected]> Author: hyukjinkwon <[email protected]> Closes apache#20877 from HyukjinKwon/linesep-json.

This reverts commit c68ec4e.

## What changes were proposed in this pull request? Title add spark logo, use spark logo image. reference other big data system ui, so i think spark should add it. spark fix before:  spark fix after:  reference kafka ui:  reference storm ui:  reference yarn ui:  reference nifi ui:  reference flink ui:  ## How was this patch tested? manual tests Please review http://spark.apache.org/contributing.html before opening a pull request. Author: guoxiaolong <[email protected]> Closes apache#20818 from guoxiaolongzte/SPARK-23675.

… with dynamic allocation ## What changes were proposed in this pull request? ignore errors when you are waiting for a broadcast.unpersist. This is handling it the same way as doing rdd.unpersist in https://issues.apache.org/jira/browse/SPARK-22618 ## How was this patch tested? Patch was tested manually against a couple jobs that exhibit this behavior, with the change the application no longer dies due to this and just prints the warning. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Thomas Graves <[email protected]> Closes apache#20924 from tgravescs/SPARK-23806.

## What changes were proposed in this pull request? This PR proposes to expose `repartitionByRange`. ```R > df <- createDataFrame(iris) ... > getNumPartitions(repartitionByRange(df, 3, col = df$Species)) [1] 3 ``` ## How was this patch tested? Manually tested and the unit tests were added. The diff with `repartition` can be checked as below: ```R > df <- createDataFrame(mtcars) > take(repartition(df, 10, df$wt), 3) mpg cyl disp hp drat wt qsec vs am gear carb 1 14.3 8 360.0 245 3.21 3.570 15.84 0 0 3 4 2 10.4 8 460.0 215 3.00 5.424 17.82 0 0 3 4 3 32.4 4 78.7 66 4.08 2.200 19.47 1 1 4 1 > take(repartitionByRange(df, 10, df$wt), 3) mpg cyl disp hp drat wt qsec vs am gear carb 1 30.4 4 75.7 52 4.93 1.615 18.52 1 1 4 2 2 33.9 4 71.1 65 4.22 1.835 19.90 1 1 4 1 3 27.3 4 79.0 66 4.08 1.935 18.90 1 1 4 1 ``` Author: hyukjinkwon <[email protected]> Closes apache#20902 from HyukjinKwon/r-repartitionByRange.

…tion before setting state ## What changes were proposed in this pull request? Changed `LauncherBackend` `set` method so that it checks if the connection is open or not before writing to it (uses `isConnected`). ## How was this patch tested? None Author: Sahil Takiar <[email protected]> Closes apache#20893 from sahilTakiar/master.

…SQL CLI ## What changes were proposed in this pull request? In SparkSQLCLI, SessionState generates before SparkContext instantiating. When we use --proxy-user to impersonate, it's unable to initializing a metastore client to talk to the secured metastore for no kerberos ticket. This PR use real user ugi to obtain token for owner before talking to kerberized metastore. ## How was this patch tested? Manually verified with kerberized hive metasotre / hdfs. Author: Kent Yao <[email protected]> Closes apache#20784 from yaooqinn/SPARK-23639.

…ons. ## What changes were proposed in this pull request? Set default Spark session in the TestSparkSession and TestHiveSparkSession constructors. ## How was this patch tested? new unit tests Author: Jose Torres <[email protected]> Closes apache#20926 from jose-torres/test3.

… to starting with 'org.slf4j'

## What changes were proposed in this pull request?

isSharedClass returns if some classes can/should be shared or not. It checks if the classes names have some keywords or start with some names. Following the logic, it can occur unintended behaviors when a custom package has `slf4j` inside the package or class name. As I guess, the first intention seems to figure out the class containing `org.slf4j`. It would be better to change the comparison logic to `name.startsWith("org.slf4j")`

## How was this patch tested?

This patch should pass all of the current tests and keep all of the current behaviors. In my case, I'm using ProtobufDeserializer to get a table schema from hive tables. Thus some Protobuf packages and names have `slf4j` inside. Without this patch, it cannot be resolved because of ClassCastException from different classloaders.

Author: Jongyoul Lee <[email protected]>

Closes apache#20860 from jongyoul/SPARK-23743.

…arquet ## What changes were proposed in this pull request? This PR supports for pushing down filters for DateType in parquet ## How was this patch tested? Added UT and tested in local. Author: yucai <[email protected]> Closes apache#20851 from yucai/SPARK-23727.

…partitioned into specific number of partitions ## What changes were proposed in this pull request? Currently, the requiredChildDistribution does not specify the partitions. This can cause the weird corner cases where the child's distribution is `SinglePartition` which satisfies the required distribution of `ClusterDistribution(no-num-partition-requirement)`, thus eliminating the shuffle needed to repartition input data into the required number of partitions (i.e. same as state stores). That can lead to "file not found" errors on the state store delta files as the micro-batch-with-no-shuffle will not run certain tasks and therefore not generate the expected state store delta files. This PR adds the required constraint on the number of partitions. ## How was this patch tested? Modified test harness to always check that ANY stateful operator should have a constraint on the number of partitions. As part of that, the existing opt-in checks on child output partitioning were removed, as they are redundant. Author: Tathagata Das <[email protected]> Closes apache#20941 from tdas/SPARK-23827.

## What changes were proposed in this pull request? Address apache#20449 (comment), If `resultIter` is already a `InterruptibleIterator`, don't double wrap it. ## How was this patch tested? Existing tests. Author: Xingbo Jiang <[email protected]> Closes apache#20920 from jiangxb1987/SPARK-23040.

…torizerModel ## What changes were proposed in this pull request? Adding test for default params for `CountVectorizerModel` constructed from vocabulary. This required that the param `maxDF` be added, which was done in SPARK-23615. ## How was this patch tested? Added an explicit test for CountVectorizerModel in DefaultValuesTests. Author: Bryan Cutler <[email protected]> Closes apache#20942 from BryanCutler/pyspark-CountVectorizerModel-default-param-test-SPARK-15009.

## What changes were proposed in this pull request? Kubernetes driver and executor pods should request `memory + memoryOverhead` as their resources instead of just `memory`, see https://issues.apache.org/jira/browse/SPARK-23825 ## How was this patch tested? Existing unit tests were adapted. Author: David Vogelbacher <[email protected]> Closes apache#20943 from dvogelbacher/spark-23825.

…utor cores ## What changes were proposed in this pull request? As mentioned in SPARK-23285, this PR introduces a new configuration property `spark.kubernetes.executor.cores` for specifying the physical CPU cores requested for each executor pod. This is to avoid changing the semantics of `spark.executor.cores` and `spark.task.cpus` and their role in task scheduling, task parallelism, dynamic resource allocation, etc. The new configuration property only determines the physical CPU cores available to an executor. An executor can still run multiple tasks simultaneously by using appropriate values for `spark.executor.cores` and `spark.task.cpus`. ## How was this patch tested? Unit tests. felixcheung srowen jiangxb1987 jerryshao mccheah foxish Author: Yinan Li <[email protected]> Author: Yinan Li <[email protected]> Closes apache#20553 from liyinan926/master.

## What changes were proposed in this pull request? This PR implemented the following cleanups related to `UnsafeWriter` class: - Remove code duplication between `UnsafeRowWriter` and `UnsafeArrayWriter` - Make `BufferHolder` class internal by delegating its accessor methods to `UnsafeWriter` - Replace `UnsafeRow.setTotalSize(...)` with `UnsafeRowWriter.setTotalSize()` ## How was this patch tested? Tested by existing UTs Author: Kazuaki Ishizaki <[email protected]> Closes apache#20850 from kiszk/SPARK-23713.

…Server test. It was possible that the disconnect() was called on the handle before the server had received the handshake messages, so no connection was yet attached to the handle. The fix waits until we're sure the handle has been mapped to a client connection. Author: Marcelo Vanzin <[email protected]> Closes apache#20950 from vanzin/SPARK-23834.

## What changes were proposed in this pull request? Introduce `handleInvalid` parameter in `VectorAssembler` that can take in `"keep", "skip", "error"` options. "error" throws an error on seeing a row containing a `null`, "skip" filters out all such rows, and "keep" adds relevant number of NaN. "keep" figures out an example to find out what this number of NaN s should be added and throws an error when no such number could be found. ## How was this patch tested? Unit tests are added to check the behavior of `assemble` on specific rows and the transformer is called on `DataFrame`s of different configurations to test different corner cases. Author: Yogesh Garg <yogesh(dot)garg()databricks(dot)com> Author: Bago Amirbekian <[email protected]> Author: Yogesh Garg <[email protected]> Closes apache#20829 from yogeshg/rformula_handleinvalid.

These tests can fail with a timeout if the remote repos are not responding, or slow. The tests don't need anything from those repos, so use an empty ivy config file to avoid setting up the defaults. The tests are passing reliably for me locally now, and failing more often than not today without this change since http://dl.bintray.com/spark-packages/maven doesn't seem to be loading from my machine. Author: Marcelo Vanzin <[email protected]> Closes apache#20916 from vanzin/SPARK-19964.

## What changes were proposed in this pull request? Easy fix in the markdown. ## How was this patch tested? jekyII build test manually. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: lemonjing <[email protected]> Closes apache#20897 from Lemonjing/master.

## What changes were proposed in this pull request? Address apache#20924 (comment), show block manager id when remove RDD/Broadcast fails. ## How was this patch tested? N/A Author: Xingbo Jiang <[email protected]> Closes apache#20960 from jiangxb1987/bmid.

## What changes were proposed in this pull request? Migrate foreach sink to DataSourceV2. Since the previous attempt at this PR apache#20552, we've changed and strictly defined the lifecycle of writer components. This means we no longer need the complicated lifecycle shim from that PR; it just naturally works. ## How was this patch tested? existing tests Author: Jose Torres <[email protected]> Closes apache#20951 from jose-torres/foreach.

## What changes were proposed in this pull request? Add interpreted execution for `MapObjects` expression. ## How was this patch tested? Added unit test. Author: Liang-Chi Hsieh <[email protected]> Closes apache#20771 from viirya/SPARK-23587.

## What changes were proposed in this pull request? Currently, the active spark session is set inconsistently (e.g., in createDataFrame, prior to query execution). Many places in spark also incorrectly query active session when they should be calling activeSession.getOrElse(defaultSession) and so might get None even if a Spark session exists. The semantics here can be cleaned up if we also set the active session when the default session is set. Related: https://github.com/apache/spark/pull/20926/files ## How was this patch tested? Unit test, existing test. Note that if apache#20926 merges first we should also update the tests there. Author: Eric Liang <[email protected]> Closes apache#20927 from ericl/active-session-cleanup.

…esolved state ## What changes were proposed in this pull request? Add cast to nulls introduced by PropagateEmptyRelation so in cases they're part of coalesce they will not break its type checking rules ## How was this patch tested? Added unit test Author: Robert Kruszewski <[email protected]> Closes apache#20914 from robert3005/rk/propagate-empty-fix.

## What changes were proposed in this pull request? In TestHive, the base spark session does this in getOrCreate(), we emulate that behavior for tests. ## How was this patch tested? N/A Author: gatorsmile <[email protected]> Closes apache#20969 from gatorsmile/setDefault.

## What changes were proposed in this pull request?

This pr added a new optimizer rule `UpdateNullabilityInAttributeReferences ` to update the nullability that `Filter` changes when having `IsNotNull`. In the master, optimized plans do not respect the nullability when `Filter` has `IsNotNull`. This wrongly generates unnecessary code. For example:

```

scala> val df = Seq((Some(1), Some(2))).toDF("a", "b")

scala> val bIsNotNull = df.where($"b" =!= 2).select($"b")

scala> val targetQuery = bIsNotNull.distinct

scala> val targetQuery.queryExecution.optimizedPlan.output(0).nullable

res5: Boolean = true

scala> targetQuery.debugCodegen

Found 2 WholeStageCodegen subtrees.

== Subtree 1 / 2 ==

*HashAggregate(keys=[b#19], functions=[], output=[b#19])

+- Exchange hashpartitioning(b#19, 200)

+- *HashAggregate(keys=[b#19], functions=[], output=[b#19])

+- *Project [_2#16 AS b#19]

+- *Filter isnotnull(_2#16)

+- LocalTableScan [_1#15, _2#16]

Generated code:

...

/* 124 */ protected void processNext() throws java.io.IOException {

...

/* 132 */ // output the result

/* 133 */

/* 134 */ while (agg_mapIter.next()) {

/* 135 */ wholestagecodegen_numOutputRows.add(1);

/* 136 */ UnsafeRow agg_aggKey = (UnsafeRow) agg_mapIter.getKey();

/* 137 */ UnsafeRow agg_aggBuffer = (UnsafeRow) agg_mapIter.getValue();

/* 138 */

/* 139 */ boolean agg_isNull4 = agg_aggKey.isNullAt(0);

/* 140 */ int agg_value4 = agg_isNull4 ? -1 : (agg_aggKey.getInt(0));

/* 141 */ agg_rowWriter1.zeroOutNullBytes();

/* 142 */

// We don't need this NULL check because NULL is filtered out in `$"b" =!=2`

/* 143 */ if (agg_isNull4) {

/* 144 */ agg_rowWriter1.setNullAt(0);

/* 145 */ } else {

/* 146 */ agg_rowWriter1.write(0, agg_value4);

/* 147 */ }

/* 148 */ append(agg_result1);

/* 149 */

/* 150 */ if (shouldStop()) return;

/* 151 */ }

/* 152 */

/* 153 */ agg_mapIter.close();

/* 154 */ if (agg_sorter == null) {

/* 155 */ agg_hashMap.free();

/* 156 */ }

/* 157 */ }

/* 158 */

/* 159 */ }

```

In the line 143, we don't need this NULL check because NULL is filtered out in `$"b" =!=2`.

This pr could remove this NULL check;

```

scala> val targetQuery.queryExecution.optimizedPlan.output(0).nullable

res5: Boolean = false

scala> targetQuery.debugCodegen

...

Generated code:

...

/* 144 */ protected void processNext() throws java.io.IOException {

...

/* 152 */ // output the result

/* 153 */

/* 154 */ while (agg_mapIter.next()) {

/* 155 */ wholestagecodegen_numOutputRows.add(1);

/* 156 */ UnsafeRow agg_aggKey = (UnsafeRow) agg_mapIter.getKey();

/* 157 */ UnsafeRow agg_aggBuffer = (UnsafeRow) agg_mapIter.getValue();

/* 158 */

/* 159 */ int agg_value4 = agg_aggKey.getInt(0);

/* 160 */ agg_rowWriter1.write(0, agg_value4);

/* 161 */ append(agg_result1);

/* 162 */

/* 163 */ if (shouldStop()) return;

/* 164 */ }

/* 165 */

/* 166 */ agg_mapIter.close();

/* 167 */ if (agg_sorter == null) {

/* 168 */ agg_hashMap.free();

/* 169 */ }

/* 170 */ }

```

## How was this patch tested?

Added `UpdateNullabilityInAttributeReferencesSuite` for unit tests.

Author: Takeshi Yamamuro <[email protected]>

Closes apache#18576 from maropu/SPARK-21351.

## What changes were proposed in this pull request? This pr added interpreted execution for `Invoke`. ## How was this patch tested? Added tests in `ObjectExpressionsSuite`. Author: Kazuaki Ishizaki <[email protected]> Closes apache#20797 from kiszk/SPARK-28583.

….imagePullSecrets ## What changes were proposed in this pull request? Pass through the `imagePullSecrets` option to the k8s pod in order to allow user to access private image registries. See https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/ ## How was this patch tested? Unit tests + manual testing. Manual testing procedure: 1. Have private image registry. 2. Spark-submit application with no `spark.kubernetes.imagePullSecret` set. Do `kubectl describe pod ...`. See the error message: ``` Error syncing pod, skipping: failed to "StartContainer" for "spark-kubernetes-driver" with ErrImagePull: "rpc error: code = 2 desc = Error: Status 400 trying to pull repository ...: \"{\\n \\\"errors\\\" : [ {\\n \\\"status\\\" : 400,\\n \\\"message\\\" : \\\"Unsupported docker v1 repository request for '...'\\\"\\n } ]\\n}\"" ``` 3. Create secret `kubectl create secret docker-registry ...` 4. Spark-submit with `spark.kubernetes.imagePullSecret` set to the new secret. See that deployment was successful. Author: Andrew Korzhuev <[email protected]> Author: Andrew Korzhuev <[email protected]> Closes apache#20811 from andrusha/spark-23668-image-pull-secrets.

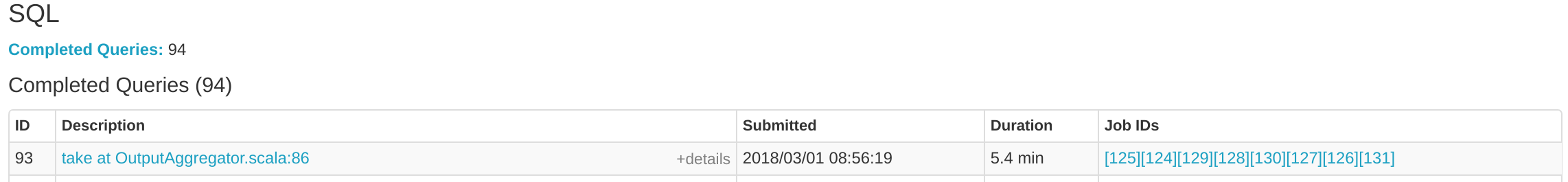

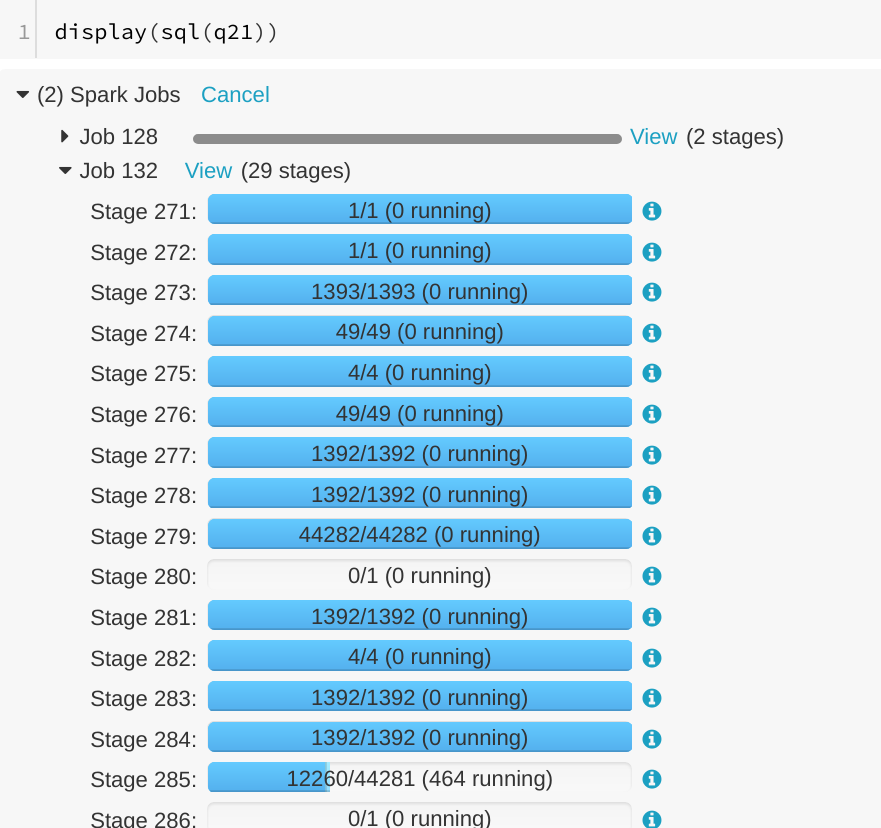

… SQL tab ## What changes were proposed in this pull request? A running SQL query would appear as completed in the Spark UI:  We can see the query in "Completed queries", while in in the job page we see it's still running Job 132.  After some time in the query still appears in "Completed queries" (while it's still running), but the "Duration" gets increased.  To reproduce, we can run a query with multiple jobs. E.g. Run TPCDS q6. The reason is that updates from executions are written into kvstore periodically, and the job start event may be missed. ## How was this patch tested? Manually run the job again and check the SQL Tab. The fix is pretty simple. Author: Gengliang Wang <[email protected]> Closes apache#20955 from gengliangwang/jobCompleted.

…tor is killed multiple times. ## What changes were proposed in this pull request? `YarnAllocator` uses `numExecutorsRunning` to track the number of running executor. `numExecutorsRunning` is used to check if there're executors missing and need to allocate more. In current code, `numExecutorsRunning` can be negative when driver asks to kill a same idle executor multiple times. ## How was this patch tested? UT added Author: jinxing <[email protected]> Closes apache#20781 from jinxing64/SPARK-23637.

|

Test build #88934 has finished for PR 20913 at commit

|

|

@mshtelma Would you please update the branch? seems there's something wrong with the commits. |

|

@mshtelma You can close this PR and open a new one instead. |

# Conflicts: # docs/security.md # python/pyspark/ml/tests.py # sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/GenerateUnsafeProjection.scala # sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ObjectExpressionsSuite.scala # sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/json/JsonSuite.scala

|

@jiangxb1987 @wzhfy Done. I have merged the latest changes from master. |

|

@mshtelma You shouldn't merge the changes and you need to rebase your pr. So, I think it's easy to close this pr and open a new pr as @jiangxb1987 suggested above. |

|

Test build #89099 has finished for PR 20913 at commit

|

During evaluation of IN conditions, if the source table is empty, division by zero can occur. In order to fix this, check was added.