-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Node becomes unresponsive causing all peers to be dropped #3143

Comments

|

I did have this issue as well. |

|

It seems to be as if they are all unique proposals remove requests. In general that shouldn't degrade the performance just having this many proposals to be removed from your local store. But it might trigger some complex UI update logic for each removal. I have to look into this in more detail. |

|

It's happening more and more frequently, almost feels like an attack. |

|

Do you see this performance problems, when you are in the DAO section or this happening without being there? I just want to rule out if it is a problem in the ProposalsView which listens to the changes triggered by this or if it's a non-ui code issue already. |

|

I've seen it reproduce multiple times today while on the Funds -> Send Bitcoin screen |

|

I just tested one instance that wasn't synced for a while and the application froze while receiving all blocks Because of the freezing of the app lots all connections, but the client reconnected afterwards without any problems. the app became responsive again and I also received the block of remove requests. Being in the market>offer book didn't cause any connection drops for me so far. |

|

After a restart of my synced node I received the removal request while being in the market>offer book and it froze my app this time. It didn't disconnect me, but I'm surprised that this consumes so much CPU removing the proposals. |

|

Ok - I can reproduce it each time. When the remove requests are coming in while using the app, it completely freezes the client, which causes depending on the performance of your instance probably also connection drops if it takes too long to parse through all these removal requests. @sqrrm @ManfredKarrer Do you have an idea how we could improve the handling of theses remove requests? Parsing them maybe one by one with a delay (if the required bits of the code are necessary for validation)? |

|

Seems UI thread related according to this log print |

|

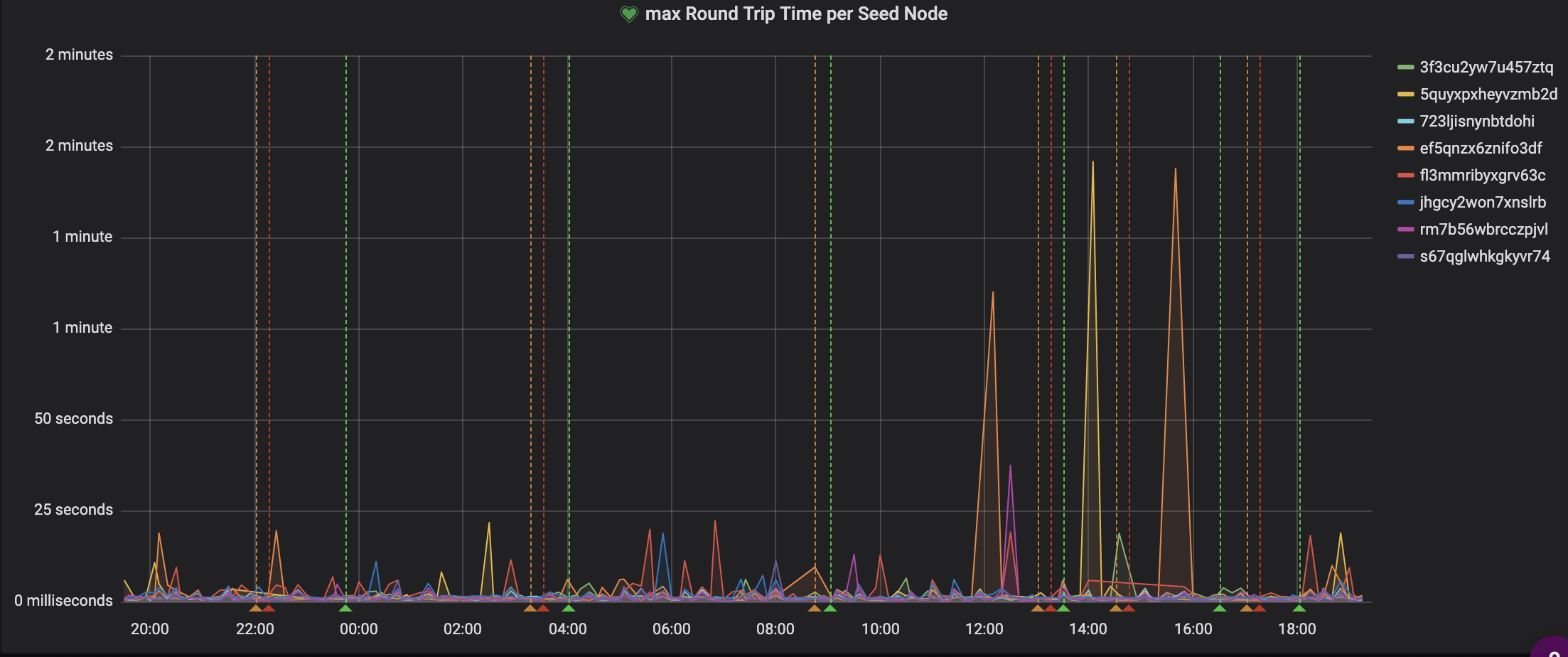

Also take a look how seednode response times have recently been going into the minutes as well https://monitor.bisq.network/d/wTDl6T_iz/p2p-stats?orgId=1&fullscreen&panelId=10&refresh=30s&from=now-24h&to=now |

|

Looking into it. TempProposalStore should not be added to resources anymore. Each new client gets the old data and then need to remove it. |

|

The validation calls are slow as they get called each time the list changed on each element. I will optimize that so that the list only gets updated once the batch processing is done. |

Improve TempProposal processing (fixes #3143)

|

Reproduced again on master (HEAD=9047ff17a2f2a36236895aaeaaea8300ed0347d4), node was stuck for about 17 seconds: |

|

There are other performance issues still. @christophsturm i strying to find a good profiler so we can see which methods are called too often and how much cpu time they consume. VisualVM is not good enough for that... |

…ch removes bisq-network#3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for bisq-network#3148 and those interfaces will be removed in the next patch.

…ch removes bisq-network#3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for bisq-network#3148 and those interfaces will be removed in the next patch.

…ch removes bisq-network#3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for bisq-network#3148 and those interfaces will be removed in the next patch.

…ch removes bisq-network#3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for bisq-network#3148 and those interfaces will be removed in the next patch.

* [PR COMMENTS] Make maxSequenceNumberBeforePurge final Instead of using a subclass that overwrites a value, utilize Guice to inject the real value of 10000 in the app and let the tests overwrite it with their own. * [TESTS] Clean up 'Analyze Code' warnings Remove unused imports and clean up some access modifiers now that the final test structure is complete * [REFACTOR] HashMapListener::onAdded/onRemoved Previously, this interface was called each time an item was changed. This required listeners to understand performance implications of multiple adds or removes in a short time span. Instead, give each listener the ability to process a list of added or removed entrys which can help them avoid performance issues. This patch is just a refactor. Each listener is called once for each ProtectedStorageEntry. Future patches will change this. * [REFACTOR] removeFromMapAndDataStore can operate on Collections Minor performance overhead for constructing MapEntry and Collections of one element, but keeps the code cleaner and all removes can still use the same logic to remove from map, delete from data store, signal listeners, etc. The MapEntry type is used instead of Pair since it will require less operations when this is eventually used in the removeExpiredEntries path. * Change removeFromMapAndDataStore to signal listeners at the end in a batch All current users still call this one-at-a-time. But, it gives the ability for the expire code path to remove in a batch. * Update removeExpiredEntries to remove all items in a batch This will cause HashMapChangedListeners to receive just one onRemoved() call for the expire work instead of multiple onRemoved() calls for each item. This required a bit of updating for the remove validation in tests so that it correctly compares onRemoved with multiple items. * ProposalService::onProtectedDataRemoved signals listeners once on batch removes #3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for #3148 and those interfaces will be removed in the next patch. * Remove HashmapChangedListener::onBatch operations Now that the only user of this interface has been removed, go ahead and delete it. This is a partial revert of f5d75c4 that includes the code that was added into ProposalService that subscribed to the P2PDataStore. * [TESTS] Regression test for #3629 Write a test that shows the incorrect behavior for #3629, the hashmap is rebuilt from disk using the 20-byte key instead of the 32-byte key. * [BUGFIX] Reconstruct HashMap using 32-byte key Addresses the first half of #3629 by ensuring that the reconstructed HashMap always has the 32-byte key for each payload. It turns out, the TempProposalStore persists the ProtectedStorageEntrys on-disk as a List and doesn't persist the key at all. Then, on reconstruction, it creates the 20-byte key for its internal map. The fix is to update the TempProposalStore to use the 32-byte key instead. This means that all writes, reads, and reconstrution of the TempProposalStore uses the 32-byte key which matches perfectly with the in-memory map of the P2PDataStorage that expects 32-byte keys. Important to note that until all seednodes receive this update, nodes will continue to have both the 20-byte and 32-byte keys in their HashMap. * [BUGFIX] Use 32-byte key in requestData path Addresses the second half of #3629 by using the HashMap, not the protectedDataStore to generate the known keys in the requestData path. This won't have any bandwidth reduction until all seednodes have the update and only have the 32-byte key in their HashMap. fixes #3629 * [DEAD CODE] Remove getProtectedDataStoreMap The only user has been migrated to getMap(). Delete it so future development doesn't have the same 20-byte vs 32-byte key issue. * [TESTS] Allow tests to validate SequenceNumberMap write separately In order to implement remove-before-add behavior, we need a way to verify that the SequenceNumberMap was the only item updated. * Implement remove-before-add message sequence behavior It is possible to receive a RemoveData or RemoveMailboxData message before the relevant AddData, but the current code does not handle it. This results in internal state updates and signal handler's being called when an Add is received with a lower sequence number than a previously seen Remove. Minor test validation changes to allow tests to specify that only the SequenceNumberMap should be written during an operation. * [TESTS] Allow remove() verification to be more flexible Now that we have introduced remove-before-add, we need a way to validate that the SequenceNumberMap was written, but nothing else. Add this feature to the validation path. * Broadcast remove-before-add messages to P2P network In order to aid in propagation of remove() messages, broadcast them in the event the remove is seen before the add. * [TESTS] Clean up remove verification helpers Now that there are cases where the SequenceNumberMap and Broadcast are called, but no other internal state is updated, the existing helper functions conflate too many decisions. Remove them in favor of explicitly defining each state change expected. * [BUGFIX] Fix duplicate sequence number use case (startup) Fix a bug introduced in d484617 that did not properly handle a valid use case for duplicate sequence numbers. For in-memory-only ProtectedStoragePayloads, the client nodes need a way to reconstruct the Payloads after startup from peer and seed nodes. This involves sending a ProtectedStorageEntry with a sequence number that is equal to the last one the client had already seen. This patch adds tests to confirm the bug and fix as well as the changes necessary to allow adding of Payloads that were previously seen, but removed during a restart. * Clean up AtomicBoolean usage in FileManager Although the code was correct, it was hard to understand the relationship between the to-be-written object and the savePending flag. Trade two dependent atomics for one and comment the code to make it more clear for the next reader. * [DEADCODE] Clean up FileManager.java * [BUGFIX] Shorter delay values not taking precedence Fix a bug in the FileManager where a saveLater called with a low delay won't execute until the delay specified by a previous saveLater call. The trade off here is the execution of a task that returns early vs. losing the requested delay. * [REFACTOR] Inline saveNowInternal Only one caller after deadcode removal.

… PersistablePayload (#3640) * [PR COMMENTS] Make maxSequenceNumberBeforePurge final Instead of using a subclass that overwrites a value, utilize Guice to inject the real value of 10000 in the app and let the tests overwrite it with their own. * [TESTS] Clean up 'Analyze Code' warnings Remove unused imports and clean up some access modifiers now that the final test structure is complete * [REFACTOR] HashMapListener::onAdded/onRemoved Previously, this interface was called each time an item was changed. This required listeners to understand performance implications of multiple adds or removes in a short time span. Instead, give each listener the ability to process a list of added or removed entrys which can help them avoid performance issues. This patch is just a refactor. Each listener is called once for each ProtectedStorageEntry. Future patches will change this. * [REFACTOR] removeFromMapAndDataStore can operate on Collections Minor performance overhead for constructing MapEntry and Collections of one element, but keeps the code cleaner and all removes can still use the same logic to remove from map, delete from data store, signal listeners, etc. The MapEntry type is used instead of Pair since it will require less operations when this is eventually used in the removeExpiredEntries path. * Change removeFromMapAndDataStore to signal listeners at the end in a batch All current users still call this one-at-a-time. But, it gives the ability for the expire code path to remove in a batch. * Update removeExpiredEntries to remove all items in a batch This will cause HashMapChangedListeners to receive just one onRemoved() call for the expire work instead of multiple onRemoved() calls for each item. This required a bit of updating for the remove validation in tests so that it correctly compares onRemoved with multiple items. * ProposalService::onProtectedDataRemoved signals listeners once on batch removes #3143 identified an issue that tempProposals listeners were being signaled once for each item that was removed during the P2PDataStore operation that expired old TempProposal objects. Some of the listeners are very expensive (ProposalListPresentation::updateLists()) which results in large UI performance issues. Now that the infrastructure is in place to receive updates from the P2PDataStore in a batch, the ProposalService can apply all of the removes received from the P2PDataStore at once. This results in only 1 onChanged() callback for each listener. The end result is that updateLists() is only called once and the performance problems are reduced. This removes the need for #3148 and those interfaces will be removed in the next patch. * Remove HashmapChangedListener::onBatch operations Now that the only user of this interface has been removed, go ahead and delete it. This is a partial revert of f5d75c4 that includes the code that was added into ProposalService that subscribed to the P2PDataStore. * [TESTS] Regression test for #3629 Write a test that shows the incorrect behavior for #3629, the hashmap is rebuilt from disk using the 20-byte key instead of the 32-byte key. * [BUGFIX] Reconstruct HashMap using 32-byte key Addresses the first half of #3629 by ensuring that the reconstructed HashMap always has the 32-byte key for each payload. It turns out, the TempProposalStore persists the ProtectedStorageEntrys on-disk as a List and doesn't persist the key at all. Then, on reconstruction, it creates the 20-byte key for its internal map. The fix is to update the TempProposalStore to use the 32-byte key instead. This means that all writes, reads, and reconstrution of the TempProposalStore uses the 32-byte key which matches perfectly with the in-memory map of the P2PDataStorage that expects 32-byte keys. Important to note that until all seednodes receive this update, nodes will continue to have both the 20-byte and 32-byte keys in their HashMap. * [BUGFIX] Use 32-byte key in requestData path Addresses the second half of #3629 by using the HashMap, not the protectedDataStore to generate the known keys in the requestData path. This won't have any bandwidth reduction until all seednodes have the update and only have the 32-byte key in their HashMap. fixes #3629 * [DEAD CODE] Remove getProtectedDataStoreMap The only user has been migrated to getMap(). Delete it so future development doesn't have the same 20-byte vs 32-byte key issue. * [TESTS] Allow tests to validate SequenceNumberMap write separately In order to implement remove-before-add behavior, we need a way to verify that the SequenceNumberMap was the only item updated. * Implement remove-before-add message sequence behavior It is possible to receive a RemoveData or RemoveMailboxData message before the relevant AddData, but the current code does not handle it. This results in internal state updates and signal handler's being called when an Add is received with a lower sequence number than a previously seen Remove. Minor test validation changes to allow tests to specify that only the SequenceNumberMap should be written during an operation. * [TESTS] Allow remove() verification to be more flexible Now that we have introduced remove-before-add, we need a way to validate that the SequenceNumberMap was written, but nothing else. Add this feature to the validation path. * Broadcast remove-before-add messages to P2P network In order to aid in propagation of remove() messages, broadcast them in the event the remove is seen before the add. * [TESTS] Clean up remove verification helpers Now that there are cases where the SequenceNumberMap and Broadcast are called, but no other internal state is updated, the existing helper functions conflate too many decisions. Remove them in favor of explicitly defining each state change expected. * [BUGFIX] Fix duplicate sequence number use case (startup) Fix a bug introduced in d484617 that did not properly handle a valid use case for duplicate sequence numbers. For in-memory-only ProtectedStoragePayloads, the client nodes need a way to reconstruct the Payloads after startup from peer and seed nodes. This involves sending a ProtectedStorageEntry with a sequence number that is equal to the last one the client had already seen. This patch adds tests to confirm the bug and fix as well as the changes necessary to allow adding of Payloads that were previously seen, but removed during a restart. * Clean up AtomicBoolean usage in FileManager Although the code was correct, it was hard to understand the relationship between the to-be-written object and the savePending flag. Trade two dependent atomics for one and comment the code to make it more clear for the next reader. * [DEADCODE] Clean up FileManager.java * [BUGFIX] Shorter delay values not taking precedence Fix a bug in the FileManager where a saveLater called with a low delay won't execute until the delay specified by a previous saveLater call. The trade off here is the execution of a task that returns early vs. losing the requested delay. * [REFACTOR] Inline saveNowInternal Only one caller after deadcode removal. * [TESTS] Introduce MapStoreServiceFake Now that we want to make changes to the MapStoreService, it isn't sufficient to have a Fake of the ProtectedDataStoreService. Tests now use a REAL ProtectedDataStoreService and a FAKE MapStoreService to exercise more of the production code and allow future testing of changes to MapStoreService. * Persist changes to ProtectedStorageEntrys With the addition of ProtectedStorageEntrys, there are now persistable maps that have different payloads and the same keys. In the ProtectedDataStoreService case, the value is the ProtectedStorageEntry which has a createdTimeStamp, sequenceNumber, and signature that can all change, but still contain an identical payload. Previously, the service was only updating the on-disk representation on the first object and never again. So, when it was recreated from disk it would not have any of the updated metadata. This was just copied from the append-only implementation where the value was the Payload which was immutable. This hasn't caused any issues to this point, but it causes strange behavior such as always receiving seqNr==1 items from seednodes on startup. It is good practice to keep the in-memory objects and on-disk objects in sync and removes an unexpected failure in future dev work that expects the same behavior as the append-only on-disk objects. * [DEADCODE] Remove protectedDataStoreListener There were no users. * [DEADCODE] Remove unused methods in ProtectedDataStoreService

Here is one example where node was completely unresponsive for 30+ seconds while processing these events

The text was updated successfully, but these errors were encountered: