diff --git a/README.md b/README.md

index d78295523d6519..fc68777e10101b 100644

--- a/README.md

+++ b/README.md

@@ -110,8 +110,11 @@ Here are the companies that have officially adopted DataHub. Please feel free to

- [Adevinta](https://www.adevinta.com/)

- [Banksalad](https://www.banksalad.com)

- [Cabify](https://cabify.tech/)

+- [ClassDojo](https://www.classdojo.com/)

+- [Coursera](https://www.coursera.org/)

- [DefinedCrowd](http://www.definedcrowd.com)

- [DFDS](https://www.dfds.com/)

+- [Digital Turbine](https://www.digitalturbine.com/)

- [Expedia Group](http://expedia.com)

- [Experius](https://www.experius.nl)

- [Geotab](https://www.geotab.com)

@@ -122,15 +125,21 @@ Here are the companies that have officially adopted DataHub. Please feel free to

- [Klarna](https://www.klarna.com)

- [LinkedIn](http://linkedin.com)

- [Moloco](https://www.moloco.com/en)

+- [N26](https://n26brasil.com/)

+- [Optum](https://www.optum.com/)

- [Peloton](https://www.onepeloton.com)

- [Razer](https://www.razer.com)

- [Saxo Bank](https://www.home.saxo)

+- [Showroomprive](https://www.showroomprive.com/)

+- [SpotHero](https://spothero.com)

- [Stash](https://www.stash.com)

- [Shanghai HuaRui Bank](https://www.shrbank.com)

- [ThoughtWorks](https://www.thoughtworks.com)

- [TypeForm](http://typeform.com)

+- [Udemy](https://www.udemy.com/)

- [Uphold](https://uphold.com)

- [Viasat](https://viasat.com)

+- [Wikimedia](https://www.wikimedia.org)

- [Wolt](https://wolt.com)

- [Zynga](https://www.zynga.com)

diff --git a/build.gradle b/build.gradle

index b57419e285a38c..044699c58e8544 100644

--- a/build.gradle

+++ b/build.gradle

@@ -10,6 +10,10 @@ buildscript {

ext.testContainersVersion = '1.17.4'

ext.jacksonVersion = '2.13.4'

ext.jettyVersion = '9.4.46.v20220331'

+ ext.log4jVersion = '2.19.0'

+ ext.slf4jVersion = '1.7.32'

+ ext.logbackClassic = '1.2.11'

+

apply from: './repositories.gradle'

buildscript.repositories.addAll(project.repositories)

dependencies {

@@ -55,6 +59,8 @@ project.ext.externalDependency = [

'awsGlueSchemaRegistrySerde': 'software.amazon.glue:schema-registry-serde:1.1.10',

'awsMskIamAuth': 'software.amazon.msk:aws-msk-iam-auth:1.1.1',

'awsSecretsManagerJdbc': 'com.amazonaws.secretsmanager:aws-secretsmanager-jdbc:1.0.8',

+ 'awsPostgresIamAuth': 'software.amazon.jdbc:aws-advanced-jdbc-wrapper:1.0.0',

+ 'awsRds':'software.amazon.awssdk:rds:2.18.24',

'cacheApi' : 'javax.cache:cache-api:1.1.0',

'commonsCli': 'commons-cli:commons-cli:1.5.0',

'commonsIo': 'commons-io:commons-io:2.4',

@@ -112,9 +118,11 @@ project.ext.externalDependency = [

'kafkaAvroSerde': 'io.confluent:kafka-streams-avro-serde:5.5.1',

'kafkaAvroSerializer': 'io.confluent:kafka-avro-serializer:5.1.4',

'kafkaClients': 'org.apache.kafka:kafka-clients:2.3.0',

- 'logbackClassic': 'ch.qos.logback:logback-classic:1.2.9',

- 'log4jCore': 'org.apache.logging.log4j:log4j-core:2.19.0',

- 'log4jApi': 'org.apache.logging.log4j:log4j-api:2.19.0',

+ 'logbackClassic': "ch.qos.logback:logback-classic:$logbackClassic",

+ 'slf4jApi': "org.slf4j:slf4j-api:$slf4jVersion",

+ 'log4jCore': "org.apache.logging.log4j:log4j-core:$log4jVersion",

+ 'log4jApi': "org.apache.logging.log4j:log4j-api:$log4jVersion",

+ 'log4j12Api': "org.slf4j:log4j-over-slf4j:$slf4jVersion",

'lombok': 'org.projectlombok:lombok:1.18.12',

'mariadbConnector': 'org.mariadb.jdbc:mariadb-java-client:2.6.0',

'mavenArtifact': "org.apache.maven:maven-artifact:$mavenVersion",

@@ -193,15 +201,12 @@ configure(subprojects.findAll {! it.name.startsWith('spark-lineage') }) {

exclude group: "io.netty", module: "netty"

exclude group: "log4j", module: "log4j"

exclude group: "org.springframework.boot", module: "spring-boot-starter-logging"

- exclude group: "ch.qos.logback", module: "logback-classic"

exclude group: "org.apache.logging.log4j", module: "log4j-to-slf4j"

exclude group: "com.vaadin.external.google", module: "android-json"

exclude group: "org.slf4j", module: "slf4j-reload4j"

exclude group: "org.slf4j", module: "slf4j-log4j12"

exclude group: "org.slf4j", module: "slf4j-nop"

exclude group: "org.slf4j", module: "slf4j-ext"

- exclude group: "org.slf4j", module: "jul-to-slf4j"

- exclude group: "org.slf4j", module: "jcl-over-toslf4j"

}

}

diff --git a/datahub-frontend/play.gradle b/datahub-frontend/play.gradle

index 579449e9e39b16..f6ecd57534dfa6 100644

--- a/datahub-frontend/play.gradle

+++ b/datahub-frontend/play.gradle

@@ -55,13 +55,14 @@ dependencies {

testImplementation externalDependency.playTest

testCompile externalDependency.testng

+ implementation externalDependency.slf4jApi

compileOnly externalDependency.lombok

runtime externalDependency.guice

runtime (externalDependency.playDocs) {

exclude group: 'com.typesafe.akka', module: 'akka-http-core_2.12'

}

runtime externalDependency.playGuice

- runtime externalDependency.logbackClassic

+ implementation externalDependency.logbackClassic

annotationProcessor externalDependency.lombok

}

diff --git a/datahub-graphql-core/build.gradle b/datahub-graphql-core/build.gradle

index aa13ce05d7d59e..528054833bb9aa 100644

--- a/datahub-graphql-core/build.gradle

+++ b/datahub-graphql-core/build.gradle

@@ -15,6 +15,7 @@ dependencies {

compile externalDependency.antlr4

compile externalDependency.guava

+ implementation externalDependency.slf4jApi

compileOnly externalDependency.lombok

annotationProcessor externalDependency.lombok

diff --git a/datahub-ranger-plugin/build.gradle b/datahub-ranger-plugin/build.gradle

index b3277a664af22f..a08d3f2b1e4c9c 100644

--- a/datahub-ranger-plugin/build.gradle

+++ b/datahub-ranger-plugin/build.gradle

@@ -28,7 +28,7 @@ dependencies {

exclude group: "org.apache.htrace", module: "htrace-core4"

}

implementation externalDependency.hadoopCommon3

- implementation externalDependency.log4jApi

+ implementation externalDependency.log4j12Api

constraints {

implementation(externalDependency.woodstoxCore) {

diff --git a/datahub-upgrade/build.gradle b/datahub-upgrade/build.gradle

index 49872fa111d514..4d4d2b99390bcf 100644

--- a/datahub-upgrade/build.gradle

+++ b/datahub-upgrade/build.gradle

@@ -14,7 +14,8 @@ dependencies {

exclude group: 'com.nimbusds', module: 'nimbus-jose-jwt'

exclude group: "org.apache.htrace", module: "htrace-core4"

}

- compile externalDependency.lombok

+ implementation externalDependency.slf4jApi

+ compileOnly externalDependency.lombok

compile externalDependency.picocli

compile externalDependency.parquet

compile externalDependency.springBeans

diff --git a/datahub-web-react/src/app/analyticsDashboard/components/AnalyticsPage.tsx b/datahub-web-react/src/app/analyticsDashboard/components/AnalyticsPage.tsx

index 76bd2e34eddeaa..440d16f518fe03 100644

--- a/datahub-web-react/src/app/analyticsDashboard/components/AnalyticsPage.tsx

+++ b/datahub-web-react/src/app/analyticsDashboard/components/AnalyticsPage.tsx

@@ -10,6 +10,7 @@ import { Message } from '../../shared/Message';

import { useListDomainsQuery } from '../../../graphql/domain.generated';

import filterSearchQuery from '../../search/utils/filterSearchQuery';

import { ANTD_GRAY } from '../../entity/shared/constants';

+import { useGetAuthenticatedUser } from '../../useGetAuthenticatedUser';

const HighlightGroup = styled.div`

display: flex;

@@ -46,6 +47,8 @@ const StyledSearchBar = styled(Input)`

`;

export const AnalyticsPage = () => {

+ const me = useGetAuthenticatedUser();

+ const canManageDomains = me?.platformPrivileges?.createDomains;

const { data: chartData, loading: chartLoading, error: chartError } = useGetAnalyticsChartsQuery();

const { data: highlightData, loading: highlightLoading, error: highlightError } = useGetHighlightsQuery();

const {

@@ -53,6 +56,7 @@ export const AnalyticsPage = () => {

error: domainError,

data: domainData,

} = useListDomainsQuery({

+ skip: !canManageDomains,

variables: {

input: {

start: 0,

@@ -82,12 +86,11 @@ export const AnalyticsPage = () => {

skip: domain === '' && query === '',

});

+ const isLoading = highlightLoading || chartLoading || domainLoading || metadataAnalyticsLoading;

return (

<>

+ {isLoading && }

- {highlightLoading && (

-

- )}

{highlightError && (

)}

@@ -96,7 +99,6 @@ export const AnalyticsPage = () => {

))}

<>

- {chartLoading && }

{chartError && (

)}

@@ -107,7 +109,6 @@ export const AnalyticsPage = () => {

))}

<>

- {domainLoading && }

{domainError && (

)}

@@ -148,9 +149,6 @@ export const AnalyticsPage = () => {

)}

<>

- {metadataAnalyticsLoading && (

-

- )}

{metadataAnalyticsError && (

)}

@@ -165,7 +163,6 @@ export const AnalyticsPage = () => {

))}

<>

- {chartLoading && }

{chartError && }

{!chartLoading &&

chartData?.getAnalyticsCharts

diff --git a/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/MlFeatureTableFeatures.tsx b/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/MlFeatureTableFeatures.tsx

index 8f908e3a2e486b..7a3a933140e2d3 100644

--- a/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/MlFeatureTableFeatures.tsx

+++ b/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/MlFeatureTableFeatures.tsx

@@ -1,163 +1,23 @@

-import React, { useState } from 'react';

-import { Table, Typography } from 'antd';

-import { CheckSquareOutlined } from '@ant-design/icons';

-import { AlignType } from 'rc-table/lib/interface';

-import styled from 'styled-components';

-import { Link } from 'react-router-dom';

+import React from 'react';

-import MlFeatureDataTypeIcon from './MlFeatureDataTypeIcon';

-import { MlFeatureDataType, MlPrimaryKey, MlFeature } from '../../../../../types.generated';

+import { MlPrimaryKey, MlFeature } from '../../../../../types.generated';

import { GetMlFeatureTableQuery } from '../../../../../graphql/mlFeatureTable.generated';

-import { useBaseEntity, useRefetch } from '../../../shared/EntityContext';

+import { useBaseEntity } from '../../../shared/EntityContext';

import { notEmpty } from '../../../shared/utils';

-import TagTermGroup from '../../../../shared/tags/TagTermGroup';

-import SchemaDescriptionField from '../../../dataset/profile/schema/components/SchemaDescriptionField';

-import { useUpdateDescriptionMutation } from '../../../../../graphql/mutations.generated';

-import { useEntityRegistry } from '../../../../useEntityRegistry';

-

-const FeaturesContainer = styled.div`

- margin-bottom: 100px;

-`;

-

-const defaultColumns = [

- {

- title: 'Type',

- dataIndex: 'dataType',

- key: 'dataType',

- width: 100,

- align: 'left' as AlignType,

- render: (dataType: MlFeatureDataType) => {

- return ;

- },

- },

-];

+import TableOfMlFeatures from './TableOfMlFeatures';

export default function MlFeatureTableFeatures() {

const baseEntity = useBaseEntity();

- const refetch = useRefetch();

const featureTable = baseEntity?.mlFeatureTable;

- const [updateDescription] = useUpdateDescriptionMutation();

- const entityRegistry = useEntityRegistry();

-

- const [tagHoveredIndex, setTagHoveredIndex] = useState(undefined);

- const features =

+ const features = (

featureTable?.properties && (featureTable?.properties?.mlFeatures || featureTable?.properties?.mlPrimaryKeys)

? [

...(featureTable?.properties?.mlPrimaryKeys || []),

...(featureTable?.properties?.mlFeatures || []),

].filter(notEmpty)

- : [];

-

- const onTagTermCell = (record: any, rowIndex: number | undefined) => ({

- onMouseEnter: () => {

- setTagHoveredIndex(`${record.urn}-${rowIndex}`);

- },

- onMouseLeave: () => {

- setTagHoveredIndex(undefined);

- },

- });

-

- const nameColumn = {

- title: 'Name',

- dataIndex: 'name',

- key: 'name',

- width: 100,

- render: (name: string, feature: MlFeature | MlPrimaryKey) => (

-

- {name}

-

- ),

- };

-

- const descriptionColumn = {

- title: 'Description',

- dataIndex: 'description',

- key: 'description',

- render: (_, feature: MlFeature | MlPrimaryKey) => (

-

- updateDescription({

- variables: {

- input: {

- description: updatedDescription,

- resourceUrn: feature.urn,

- },

- },

- }).then(refetch)

- }

- />

- ),

- width: 300,

- };

-

- const tagColumn = {

- width: 125,

- title: 'Tags',

- dataIndex: 'tags',

- key: 'tags',

- render: (_, feature: MlFeature | MlPrimaryKey, rowIndex: number) => (

- setTagHoveredIndex(undefined)}

- entityUrn={feature.urn}

- entityType={feature.type}

- refetch={refetch}

- />

- ),

- onCell: onTagTermCell,

- };

-

- const termColumn = {

- width: 125,

- title: 'Terms',

- dataIndex: 'glossaryTerms',

- key: 'glossarTerms',

- render: (_, feature: MlFeature | MlPrimaryKey, rowIndex: number) => (

- setTagHoveredIndex(undefined)}

- entityUrn={feature.urn}

- entityType={feature.type}

- refetch={refetch}

- />

- ),

- onCell: onTagTermCell,

- };

-

- const primaryKeyColumn = {

- title: 'Primary Key',

- dataIndex: 'primaryKey',

- key: 'primaryKey',

- render: (_: any, record: MlFeature | MlPrimaryKey) =>

- record.__typename === 'MLPrimaryKey' ? : null,

- width: 50,

- };

-

- const allColumns = [...defaultColumns, nameColumn, descriptionColumn, tagColumn, termColumn, primaryKeyColumn];

+ : []

+ ) as Array;

- return (

-

- {features && features.length > 0 && (

- `${record.dataType}-${record.name}`}

- expandable={{ defaultExpandAllRows: true, expandRowByClick: true }}

- pagination={false}

- />

- )}

-

- );

+ return ;

}

diff --git a/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/TableOfMlFeatures.tsx b/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/TableOfMlFeatures.tsx

new file mode 100644

index 00000000000000..cf0bb808b3278d

--- /dev/null

+++ b/datahub-web-react/src/app/entity/mlFeatureTable/profile/features/TableOfMlFeatures.tsx

@@ -0,0 +1,155 @@

+import React, { useState } from 'react';

+import { Table, Typography } from 'antd';

+import { CheckSquareOutlined } from '@ant-design/icons';

+import { AlignType } from 'rc-table/lib/interface';

+import styled from 'styled-components';

+import { Link } from 'react-router-dom';

+

+import MlFeatureDataTypeIcon from './MlFeatureDataTypeIcon';

+import { MlFeatureDataType, MlPrimaryKey, MlFeature } from '../../../../../types.generated';

+import { useRefetch } from '../../../shared/EntityContext';

+import TagTermGroup from '../../../../shared/tags/TagTermGroup';

+import SchemaDescriptionField from '../../../dataset/profile/schema/components/SchemaDescriptionField';

+import { useUpdateDescriptionMutation } from '../../../../../graphql/mutations.generated';

+import { useEntityRegistry } from '../../../../useEntityRegistry';

+

+const FeaturesContainer = styled.div`

+ margin-bottom: 100px;

+`;

+

+const defaultColumns = [

+ {

+ title: 'Type',

+ dataIndex: 'dataType',

+ key: 'dataType',

+ width: 100,

+ align: 'left' as AlignType,

+ render: (dataType: MlFeatureDataType) => {

+ return ;

+ },

+ },

+];

+

+type Props = {

+ features: Array;

+};

+

+export default function TableOfMlFeatures({ features }: Props) {

+ const refetch = useRefetch();

+ const [updateDescription] = useUpdateDescriptionMutation();

+ const entityRegistry = useEntityRegistry();

+

+ const [tagHoveredIndex, setTagHoveredIndex] = useState(undefined);

+

+ const onTagTermCell = (record: any, rowIndex: number | undefined) => ({

+ onMouseEnter: () => {

+ setTagHoveredIndex(`${record.urn}-${rowIndex}`);

+ },

+ onMouseLeave: () => {

+ setTagHoveredIndex(undefined);

+ },

+ });

+

+ const nameColumn = {

+ title: 'Name',

+ dataIndex: 'name',

+ key: 'name',

+ width: 100,

+ render: (name: string, feature: MlFeature | MlPrimaryKey) => (

+

+ {name}

+

+ ),

+ };

+

+ const descriptionColumn = {

+ title: 'Description',

+ dataIndex: 'description',

+ key: 'description',

+ render: (_, feature: MlFeature | MlPrimaryKey) => (

+

+ updateDescription({

+ variables: {

+ input: {

+ description: updatedDescription,

+ resourceUrn: feature.urn,

+ },

+ },

+ }).then(refetch)

+ }

+ />

+ ),

+ width: 300,

+ };

+

+ const tagColumn = {

+ width: 125,

+ title: 'Tags',

+ dataIndex: 'tags',

+ key: 'tags',

+ render: (_, feature: MlFeature | MlPrimaryKey, rowIndex: number) => (

+ setTagHoveredIndex(undefined)}

+ entityUrn={feature.urn}

+ entityType={feature.type}

+ refetch={refetch}

+ />

+ ),

+ onCell: onTagTermCell,

+ };

+

+ const termColumn = {

+ width: 125,

+ title: 'Terms',

+ dataIndex: 'glossaryTerms',

+ key: 'glossaryTerms',

+ render: (_, feature: MlFeature | MlPrimaryKey, rowIndex: number) => (

+ setTagHoveredIndex(undefined)}

+ entityUrn={feature.urn}

+ entityType={feature.type}

+ refetch={refetch}

+ />

+ ),

+ onCell: onTagTermCell,

+ };

+

+ const primaryKeyColumn = {

+ title: 'Primary Key',

+ dataIndex: 'primaryKey',

+ key: 'primaryKey',

+ render: (_: any, record: MlFeature | MlPrimaryKey) =>

+ record.__typename === 'MLPrimaryKey' ? : null,

+ width: 50,

+ };

+

+ const allColumns = [...defaultColumns, nameColumn, descriptionColumn, tagColumn, termColumn, primaryKeyColumn];

+

+ return (

+

+ {features && features.length > 0 && (

+ `${record.dataType}-${record.name}`}

+ expandable={{ defaultExpandAllRows: true, expandRowByClick: true }}

+ pagination={false}

+ />

+ )}

+

+ );

+}

diff --git a/datahub-web-react/src/app/entity/mlModel/profile/MlModelFeaturesTab.tsx b/datahub-web-react/src/app/entity/mlModel/profile/MlModelFeaturesTab.tsx

index 65c6a0c9b84a72..b8dc64793c2256 100644

--- a/datahub-web-react/src/app/entity/mlModel/profile/MlModelFeaturesTab.tsx

+++ b/datahub-web-react/src/app/entity/mlModel/profile/MlModelFeaturesTab.tsx

@@ -1,15 +1,17 @@

import React from 'react';

-import { EntityType } from '../../../../types.generated';

+import { MlPrimaryKey, MlFeature } from '../../../../types.generated';

import { useBaseEntity } from '../../shared/EntityContext';

import { GetMlModelQuery } from '../../../../graphql/mlModel.generated';

-import { EntityList } from '../../shared/tabs/Entity/components/EntityList';

+import TableOfMlFeatures from '../../mlFeatureTable/profile/features/TableOfMlFeatures';

export default function MlModelFeaturesTab() {

const entity = useBaseEntity() as GetMlModelQuery;

const model = entity && entity.mlModel;

- const features = model?.features?.relationships.map((relationship) => relationship.entity);

+ const features = model?.features?.relationships.map((relationship) => relationship.entity) as Array<

+ MlFeature | MlPrimaryKey

+ >;

- return ;

+ return ;

}

diff --git a/datahub-web-react/src/app/recommendations/renderer/component/EntityNameList.tsx b/datahub-web-react/src/app/recommendations/renderer/component/EntityNameList.tsx

index 6323a8ac74bb51..73819d1f9fcf5d 100644

--- a/datahub-web-react/src/app/recommendations/renderer/component/EntityNameList.tsx

+++ b/datahub-web-react/src/app/recommendations/renderer/component/EntityNameList.tsx

@@ -13,7 +13,7 @@ const StyledCheckbox = styled(Checkbox)`

`;

const StyledList = styled(List)`

- overflow-y: scroll;

+ overflow-y: auto;

height: 100%;

margin-top: -1px;

box-shadow: ${(props) => props.theme.styles['box-shadow']};

diff --git a/datahub-web-react/src/app/search/AdvancedSearchFilters.tsx b/datahub-web-react/src/app/search/AdvancedSearchFilters.tsx

index f4e70e1b9007d4..ab242b49fed69f 100644

--- a/datahub-web-react/src/app/search/AdvancedSearchFilters.tsx

+++ b/datahub-web-react/src/app/search/AdvancedSearchFilters.tsx

@@ -11,11 +11,9 @@ import { FIELDS_THAT_USE_CONTAINS_OPERATOR, UnionType } from './utils/constants'

import { AdvancedSearchAddFilterSelect } from './AdvancedSearchAddFilterSelect';

export const SearchFilterWrapper = styled.div`

- min-height: 100%;

+ flex: 1;

+ padding: 6px 12px 10px 12px;

overflow: auto;

- margin-top: 6px;

- margin-left: 12px;

- margin-right: 12px;

&::-webkit-scrollbar {

height: 12px;

diff --git a/datahub-web-react/src/app/search/SearchFiltersSection.tsx b/datahub-web-react/src/app/search/SearchFiltersSection.tsx

index a2fca0605b4ec3..cca78ae2ae4923 100644

--- a/datahub-web-react/src/app/search/SearchFiltersSection.tsx

+++ b/datahub-web-react/src/app/search/SearchFiltersSection.tsx

@@ -17,7 +17,8 @@ type Props = {

};

const FiltersContainer = styled.div`

- display: block;

+ display: flex;

+ flex-direction: column;

max-width: 260px;

min-width: 260px;

overflow-wrap: break-word;

@@ -45,7 +46,8 @@ const FiltersHeader = styled.div`

`;

const SearchFilterContainer = styled.div`

- padding-top: 10px;

+ flex: 1;

+ overflow: auto;

`;

// This component renders the entire filters section that allows toggling

diff --git a/datahub-web-react/src/app/search/SimpleSearchFilters.tsx b/datahub-web-react/src/app/search/SimpleSearchFilters.tsx

index e6b4da2f455310..654341be7715c5 100644

--- a/datahub-web-react/src/app/search/SimpleSearchFilters.tsx

+++ b/datahub-web-react/src/app/search/SimpleSearchFilters.tsx

@@ -7,6 +7,7 @@ import { SimpleSearchFilter } from './SimpleSearchFilter';

const TOP_FILTERS = ['degree', 'entity', 'tags', 'glossaryTerms', 'domains', 'owners'];

export const SearchFilterWrapper = styled.div`

+ padding-top: 10px;

max-height: 100%;

overflow: auto;

diff --git a/datahub-web-react/src/graphql/mlModel.graphql b/datahub-web-react/src/graphql/mlModel.graphql

index 5d60be86a31c2b..91280f0904c558 100644

--- a/datahub-web-react/src/graphql/mlModel.graphql

+++ b/datahub-web-react/src/graphql/mlModel.graphql

@@ -8,7 +8,21 @@ query getMLModel($urn: String!) {

...partialLineageResults

}

features: relationships(input: { types: ["Consumes"], direction: OUTGOING, start: 0, count: 100 }) {

- ...fullRelationshipResults

+ start

+ count

+ total

+ relationships {

+ type

+ direction

+ entity {

+ ... on MLFeature {

+ ...nonRecursiveMLFeature

+ }

+ ... on MLPrimaryKey {

+ ...nonRecursiveMLPrimaryKey

+ }

+ }

+ }

}

}

}

diff --git a/docker/datahub-actions/env/docker.env b/docker/datahub-actions/env/docker.env

index 48fb0b080d2436..363d9bc578b426 100644

--- a/docker/datahub-actions/env/docker.env

+++ b/docker/datahub-actions/env/docker.env

@@ -20,3 +20,18 @@ KAFKA_PROPERTIES_SECURITY_PROTOCOL=PLAINTEXT

# KAFKA_PROPERTIES_SSL_KEYSTORE_PASSWORD=keystore_password

# KAFKA_PROPERTIES_SSL_KEY_PASSWORD=keystore_password

# KAFKA_PROPERTIES_SSL_TRUSTSTORE_PASSWORD=truststore_password

+

+# The following env vars are meant to be passed through from the Host System

+# to configure the Slack and Teams Actions

+# _ENABLED flags need to be set to "true" case sensitive for the action to be enabled

+DATAHUB_ACTIONS_SLACK_ENABLED

+DATAHUB_ACTIONS_SLACK_DATAHUB_BASE_URL

+DATAHUB_ACTIONS_SLACK_BOT_TOKEN

+DATAHUB_ACTIONS_SLACK_SIGNING_SECRET

+DATAHUB_ACTIONS_SLACK_CHANNEL

+DATAHUB_ACTIONS_SLACK_SUPPRESS_SYSTEM_ACTIVITY

+

+DATAHUB_ACTIONS_TEAMS_ENABLED

+DATAHUB_ACTIONS_TEAMS_DATAHUB_BASE_URL

+DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL

+DATAHUB_ACTIONS_TEAMS_SUPPRESS_SYSTEM_ACTIVITY

diff --git a/docker/datahub-gms/env/docker.postgres.env b/docker/datahub-gms/env/docker.postgres.env

index 0f4f78dccb77b8..f99134ebb02388 100644

--- a/docker/datahub-gms/env/docker.postgres.env

+++ b/docker/datahub-gms/env/docker.postgres.env

@@ -3,6 +3,9 @@ EBEAN_DATASOURCE_PASSWORD=datahub

EBEAN_DATASOURCE_HOST=postgres:5432

EBEAN_DATASOURCE_URL=jdbc:postgresql://postgres:5432/datahub

EBEAN_DATASOURCE_DRIVER=org.postgresql.Driver

+# Uncomment EBEAN_POSTGRES_USE_AWS_IAM_AUTH below to add support for IAM authentication for Postgres.

+# Password is not required when accessing Postgres using IAM auth. It can be replaced by dummy password

+# EBEAN_POSTGRES_USE_AWS_IAM_AUTH=true

KAFKA_BOOTSTRAP_SERVER=broker:29092

KAFKA_SCHEMAREGISTRY_URL=http://schema-registry:8081

ELASTICSEARCH_HOST=elasticsearch

diff --git a/docker/mariadb/init.sql b/docker/mariadb/init.sql

index 084fdc93a3717a..c4132575cf442c 100644

--- a/docker/mariadb/init.sql

+++ b/docker/mariadb/init.sql

@@ -11,6 +11,8 @@ create table metadata_aspect_v2 (

constraint pk_metadata_aspect_v2 primary key (urn,aspect,version)

);

+create index timeIndex ON metadata_aspect_v2 (createdon);

+

insert into metadata_aspect_v2 (urn, aspect, version, metadata, createdon, createdby) values(

'urn:li:corpuser:datahub',

'corpUserInfo',

diff --git a/docker/mysql-setup/init.sql b/docker/mysql-setup/init.sql

index 6bd7133a359a89..78098af4648bce 100644

--- a/docker/mysql-setup/init.sql

+++ b/docker/mysql-setup/init.sql

@@ -12,7 +12,8 @@ create table if not exists metadata_aspect_v2 (

createdon datetime(6) not null,

createdby varchar(255) not null,

createdfor varchar(255),

- constraint pk_metadata_aspect_v2 primary key (urn,aspect,version)

+ constraint pk_metadata_aspect_v2 primary key (urn,aspect,version),

+ INDEX timeIndex (createdon)

);

-- create default records for datahub user if not exists

diff --git a/docker/mysql/init.sql b/docker/mysql/init.sql

index fa9d856f499e4e..97ae3ea1467445 100644

--- a/docker/mysql/init.sql

+++ b/docker/mysql/init.sql

@@ -8,7 +8,8 @@ CREATE TABLE metadata_aspect_v2 (

createdon datetime(6) NOT NULL,

createdby VARCHAR(255) NOT NULL,

createdfor VARCHAR(255),

- CONSTRAINT pk_metadata_aspect_v2 PRIMARY KEY (urn,aspect,version)

+ constraint pk_metadata_aspect_v2 primary key (urn,aspect,version),

+ INDEX timeIndex (createdon)

) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin;

INSERT INTO metadata_aspect_v2 (urn, aspect, version, metadata, createdon, createdby) VALUES(

diff --git a/docker/postgres-setup/init.sql b/docker/postgres-setup/init.sql

index e7c515e7385acc..12fff7aec7fe6f 100644

--- a/docker/postgres-setup/init.sql

+++ b/docker/postgres-setup/init.sql

@@ -11,6 +11,8 @@ CREATE TABLE IF NOT EXISTS metadata_aspect_v2 (

CONSTRAINT pk_metadata_aspect_v2 PRIMARY KEY (urn, aspect, version)

);

+create index timeIndex ON metadata_aspect_v2 (createdon);

+

-- create default records for datahub user if not exists

CREATE TEMP TABLE temp_metadata_aspect_v2 AS TABLE metadata_aspect_v2;

INSERT INTO temp_metadata_aspect_v2 (urn, aspect, version, metadata, createdon, createdby) VALUES(

diff --git a/docker/postgres/init.sql b/docker/postgres/init.sql

index 72298ed4b6726d..4da8adaf8a6da0 100644

--- a/docker/postgres/init.sql

+++ b/docker/postgres/init.sql

@@ -11,6 +11,8 @@ create table metadata_aspect_v2 (

constraint pk_metadata_aspect_v2 primary key (urn,aspect,version)

);

+create index timeIndex ON metadata_aspect_v2 (createdon);

+

insert into metadata_aspect_v2 (urn, aspect, version, metadata, createdon, createdby) values(

'urn:li:corpuser:datahub',

'corpUserInfo',

diff --git a/docker/quickstart/docker-compose-without-neo4j-m1.quickstart.yml b/docker/quickstart/docker-compose-without-neo4j-m1.quickstart.yml

index e87f55c0086c81..942a7b1f4c952c 100644

--- a/docker/quickstart/docker-compose-without-neo4j-m1.quickstart.yml

+++ b/docker/quickstart/docker-compose-without-neo4j-m1.quickstart.yml

@@ -33,6 +33,16 @@ services:

- DATAHUB_SYSTEM_CLIENT_ID=__datahub_system

- DATAHUB_SYSTEM_CLIENT_SECRET=JohnSnowKnowsNothing

- KAFKA_PROPERTIES_SECURITY_PROTOCOL=PLAINTEXT

+ - DATAHUB_ACTIONS_SLACK_ENABLED

+ - DATAHUB_ACTIONS_SLACK_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_SLACK_BOT_TOKEN

+ - DATAHUB_ACTIONS_SLACK_SIGNING_SECRET

+ - DATAHUB_ACTIONS_SLACK_CHANNEL

+ - DATAHUB_ACTIONS_SLACK_SUPPRESS_SYSTEM_ACTIVITY

+ - DATAHUB_ACTIONS_TEAMS_ENABLED

+ - DATAHUB_ACTIONS_TEAMS_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL

+ - DATAHUB_ACTIONS_TEAMS_SUPPRESS_SYSTEM_ACTIVITY

hostname: actions

image: acryldata/datahub-actions:${ACTIONS_VERSION:-head}

restart: on-failure:5

diff --git a/docker/quickstart/docker-compose-without-neo4j.quickstart.yml b/docker/quickstart/docker-compose-without-neo4j.quickstart.yml

index e6f6b73396de39..7917b845c91d54 100644

--- a/docker/quickstart/docker-compose-without-neo4j.quickstart.yml

+++ b/docker/quickstart/docker-compose-without-neo4j.quickstart.yml

@@ -33,6 +33,16 @@ services:

- DATAHUB_SYSTEM_CLIENT_ID=__datahub_system

- DATAHUB_SYSTEM_CLIENT_SECRET=JohnSnowKnowsNothing

- KAFKA_PROPERTIES_SECURITY_PROTOCOL=PLAINTEXT

+ - DATAHUB_ACTIONS_SLACK_ENABLED

+ - DATAHUB_ACTIONS_SLACK_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_SLACK_BOT_TOKEN

+ - DATAHUB_ACTIONS_SLACK_SIGNING_SECRET

+ - DATAHUB_ACTIONS_SLACK_CHANNEL

+ - DATAHUB_ACTIONS_SLACK_SUPPRESS_SYSTEM_ACTIVITY

+ - DATAHUB_ACTIONS_TEAMS_ENABLED

+ - DATAHUB_ACTIONS_TEAMS_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL

+ - DATAHUB_ACTIONS_TEAMS_SUPPRESS_SYSTEM_ACTIVITY

hostname: actions

image: acryldata/datahub-actions:${ACTIONS_VERSION:-head}

restart: on-failure:5

diff --git a/docker/quickstart/docker-compose.quickstart.yml b/docker/quickstart/docker-compose.quickstart.yml

index 486740bcf418ad..3f6bd5a348c326 100644

--- a/docker/quickstart/docker-compose.quickstart.yml

+++ b/docker/quickstart/docker-compose.quickstart.yml

@@ -35,6 +35,16 @@ services:

- DATAHUB_SYSTEM_CLIENT_ID=__datahub_system

- DATAHUB_SYSTEM_CLIENT_SECRET=JohnSnowKnowsNothing

- KAFKA_PROPERTIES_SECURITY_PROTOCOL=PLAINTEXT

+ - DATAHUB_ACTIONS_SLACK_ENABLED

+ - DATAHUB_ACTIONS_SLACK_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_SLACK_BOT_TOKEN

+ - DATAHUB_ACTIONS_SLACK_SIGNING_SECRET

+ - DATAHUB_ACTIONS_SLACK_CHANNEL

+ - DATAHUB_ACTIONS_SLACK_SUPPRESS_SYSTEM_ACTIVITY

+ - DATAHUB_ACTIONS_TEAMS_ENABLED

+ - DATAHUB_ACTIONS_TEAMS_DATAHUB_BASE_URL

+ - DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL

+ - DATAHUB_ACTIONS_TEAMS_SUPPRESS_SYSTEM_ACTIVITY

hostname: actions

image: acryldata/datahub-actions:${ACTIONS_VERSION:-head}

restart: on-failure:5

diff --git a/docker/quickstart/generate_docker_quickstart.py b/docker/quickstart/generate_docker_quickstart.py

index 3a54d8c21155dd..4888adda2d0382 100644

--- a/docker/quickstart/generate_docker_quickstart.py

+++ b/docker/quickstart/generate_docker_quickstart.py

@@ -1,11 +1,11 @@

import os

+from collections import OrderedDict

+from collections.abc import Mapping

+

import click

import yaml

-from collections.abc import Mapping

from dotenv import dotenv_values

from yaml import Loader

-from collections import OrderedDict

-

# Generates a merged docker-compose file with env variables inlined.

# Usage: python3 docker_compose_cli_gen.py ../docker-compose.yml ../docker-compose.override.yml ../docker-compose-gen.yml

@@ -54,7 +54,10 @@ def modify_docker_config(base_path, docker_yaml_config):

# 5. Append to an "environment" block to YAML

for key, value in env_vars.items():

- service["environment"].append(f"{key}={value}")

+ if value is not None:

+ service["environment"].append(f"{key}={value}")

+ else:

+ service["environment"].append(f"{key}")

# 6. Delete the "env_file" value

del service["env_file"]

diff --git a/docs-website/src/pages/_components/Logos/index.js b/docs-website/src/pages/_components/Logos/index.js

index da8484fec7eb72..0046cb6094288a 100644

--- a/docs-website/src/pages/_components/Logos/index.js

+++ b/docs-website/src/pages/_components/Logos/index.js

@@ -151,6 +151,11 @@ const companiesByIndustry = [

{

name: "And More",

companies: [

+ {

+ name: "Wikimedia Foundation",

+ imageUrl: "/img/logos/companies/wikimedia-foundation.png",

+ imageSize: "medium",

+ },

{

name: "Cabify",

imageUrl: "/img/logos/companies/cabify.png",

diff --git a/docs-website/static/img/logos/companies/wikimedia-foundation.png b/docs-website/static/img/logos/companies/wikimedia-foundation.png

new file mode 100644

index 00000000000000..c4119fab23be1d

Binary files /dev/null and b/docs-website/static/img/logos/companies/wikimedia-foundation.png differ

diff --git a/docs/actions/README.md b/docs/actions/README.md

index fa0c6cb4b71efe..23596ec67514e5 100644

--- a/docs/actions/README.md

+++ b/docs/actions/README.md

@@ -203,6 +203,8 @@ Some pre-included Actions include

- [Hello World](actions/hello_world.md)

- [Executor](actions/executor.md)

+- [Slack](actions/slack.md)

+- [Microsoft Teams](actions/teams.md)

## Development

diff --git a/docs/actions/actions/slack.md b/docs/actions/actions/slack.md

new file mode 100644

index 00000000000000..6416fdb2665538

--- /dev/null

+++ b/docs/actions/actions/slack.md

@@ -0,0 +1,282 @@

+import FeatureAvailability from '@site/src/components/FeatureAvailability';

+

+# Slack

+

+

+

+

+# Slack

+

+| | |

+| --- | --- |

+| **Status** |  |

+| **Version Requirements** |  |

+

+

+## Overview

+

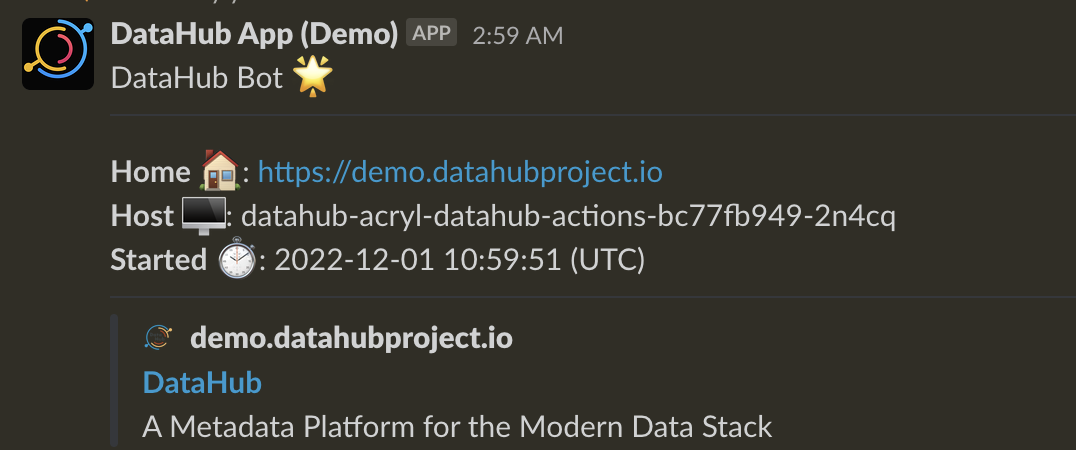

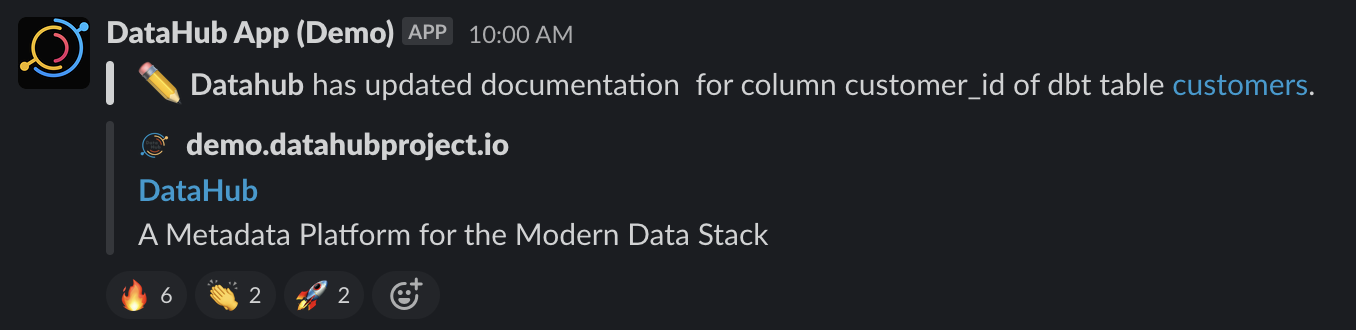

+This Action integrates DataHub with Slack to send notifications to a configured Slack channel in your workspace.

+

+### Capabilities

+

+- Sending notifications of important events to a Slack channel

+ - Adding or Removing a tag from an entity (dataset, dashboard etc.)

+ - Updating documentation at the entity or field (column) level.

+ - Adding or Removing ownership from an entity (dataset, dashboard, etc.)

+ - Creating a Domain

+ - and many more.

+

+### User Experience

+

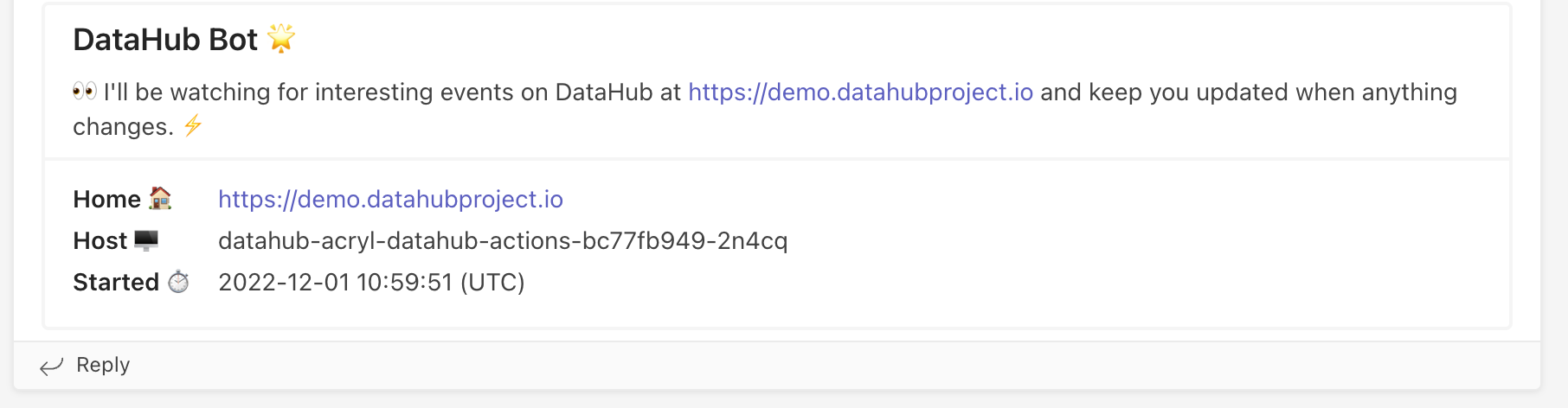

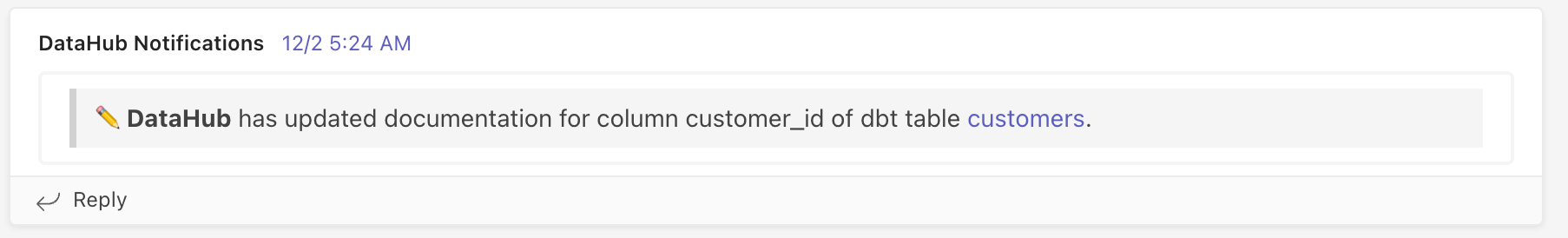

+On startup, the action will produce a welcome message that looks like the one below.

+

+

+

+On each event, the action will produce a notification message that looks like the one below.

+

+

+Watch the townhall demo to see this in action:

+[](https://www.youtube.com/watch?v=BlCLhG8lGoY&t=2998s)

+

+### Supported Events

+

+- `EntityChangeEvent_v1`

+- Currently, the `MetadataChangeLog_v1` event is **not** processed by the Action.

+

+## Action Quickstart

+

+### Prerequisites

+

+Ensure that you have configured the Slack App in your Slack workspace.

+

+#### Install the DataHub Slack App into your Slack workspace

+

+The following steps should be performed by a Slack Workspace Admin.

+- Navigate to https://api.slack.com/apps/

+- Click Create New App

+- Use “From an app manifest” option

+- Select your workspace

+- Paste this Manifest in YAML. We suggest changing the name and `display_name` to be `DataHub App YOUR_TEAM_NAME` but this is not required. This name will show up in your Slack workspace.

+```yml

+display_information:

+ name: DataHub App

+ description: An app to integrate DataHub with Slack

+ background_color: "#000000"

+features:

+ bot_user:

+ display_name: DataHub App

+ always_online: false

+oauth_config:

+ scopes:

+ bot:

+ - channels:history

+ - channels:read

+ - chat:write

+ - commands

+ - groups:read

+ - im:read

+ - mpim:read

+ - team:read

+ - users:read

+ - users:read.email

+settings:

+ org_deploy_enabled: false

+ socket_mode_enabled: false

+ token_rotation_enabled: false

+```

+

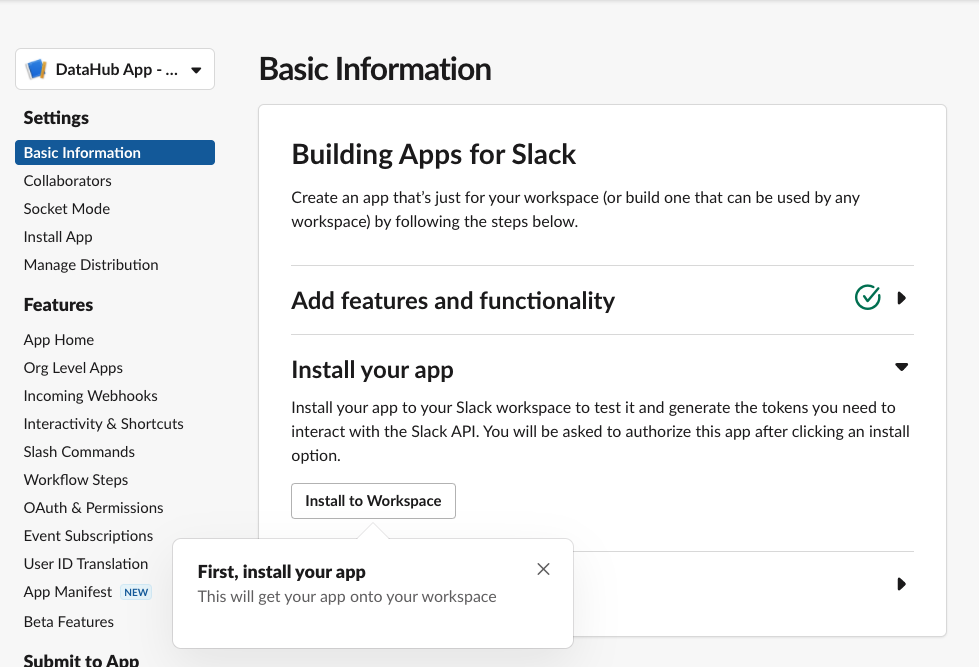

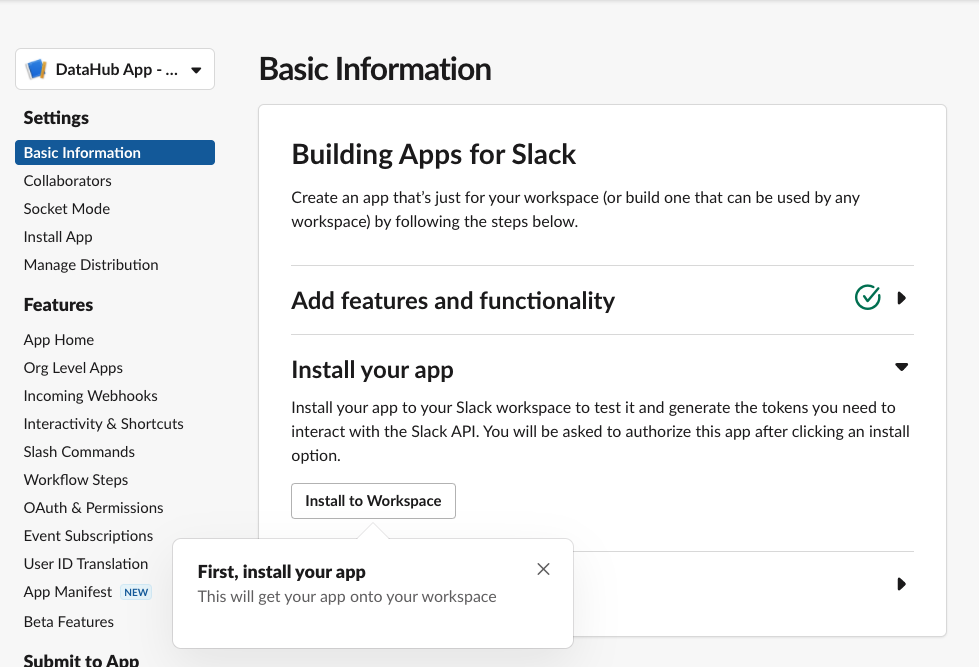

+- Confirm you see the Basic Information Tab

+

+

+

+- Click **Install to Workspace**

+- It will show you permissions the Slack App is asking for, what they mean and a default channel in which you want to add the slack app

+ - Note that the Slack App will only be able to post in channels that the app has been added to. This is made clear by slack’s Authentication screen also.

+- Select the channel you'd like notifications to go to and click **Allow**

+- Go to the DataHub App page

+ - You can find your workspace's list of apps at https://api.slack.com/apps/

+

+#### Getting Credentials and Configuration

+

+Now that you've created your app and installed it in your workspace, you need a few pieces of information before you can activate your Slack action.

+

+#### 1. The Signing Secret

+

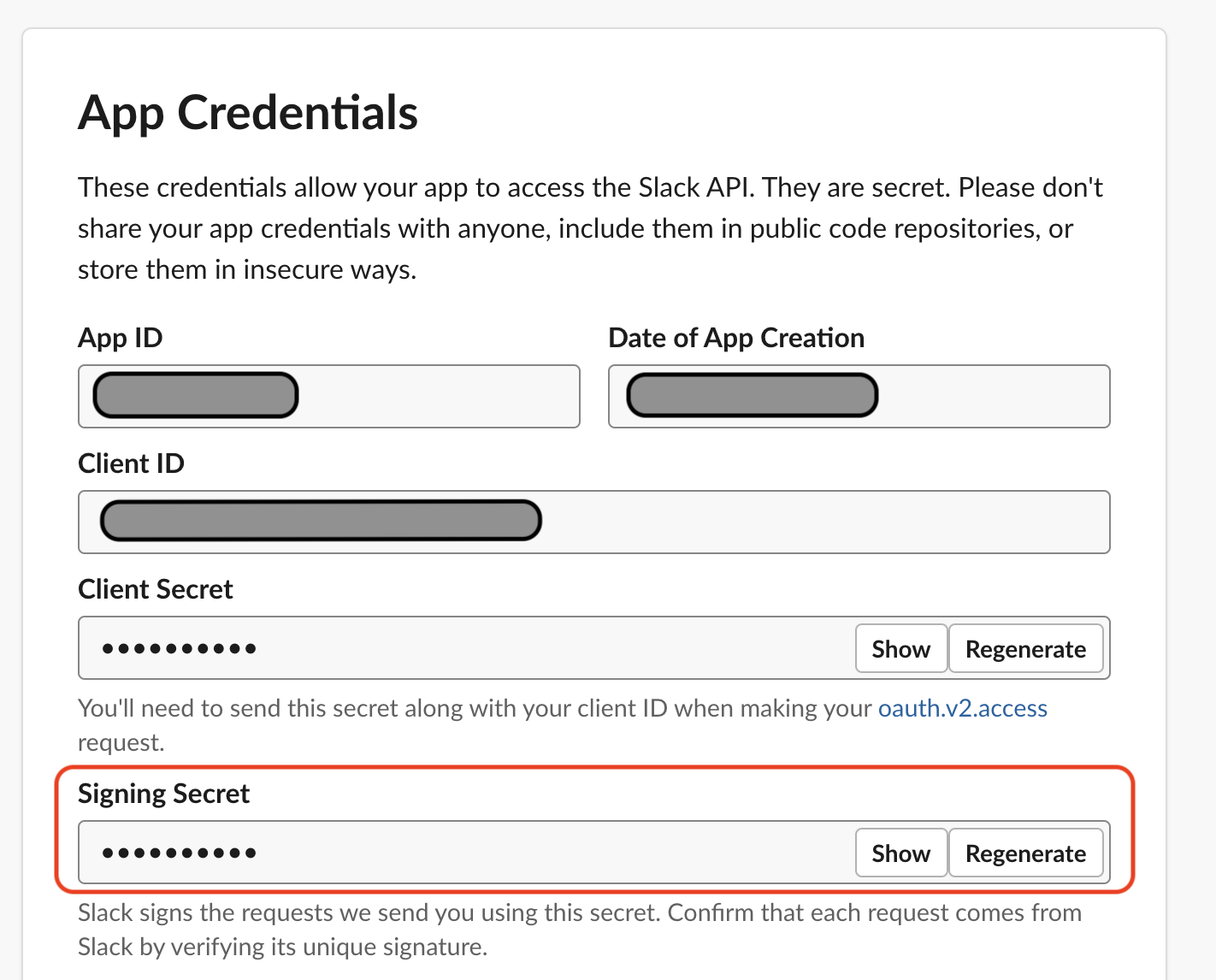

+On your app's Basic Information page, you will see a App Credentials area. Take note of the Signing Secret information, you will need it later.

+

+

+

+

+#### 2. The Bot Token

+

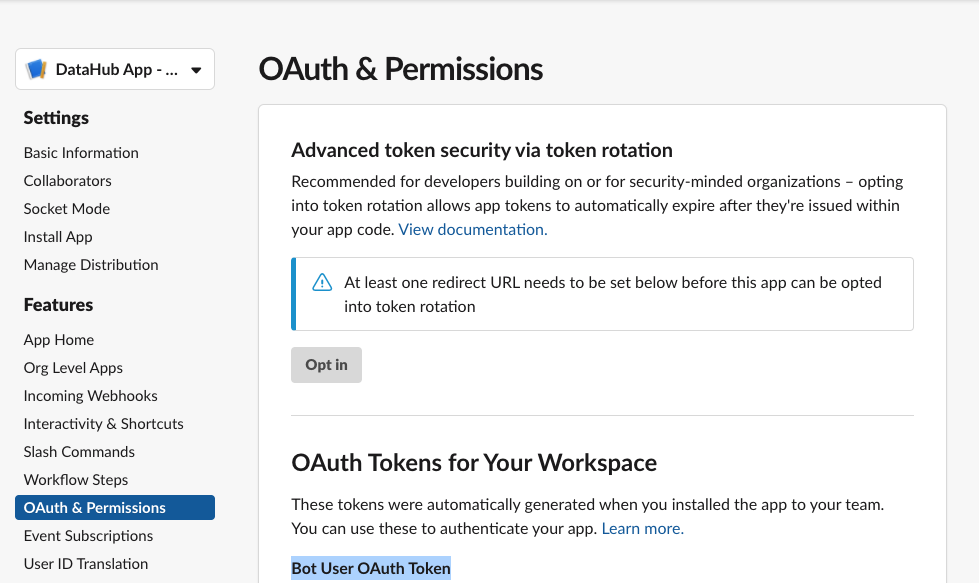

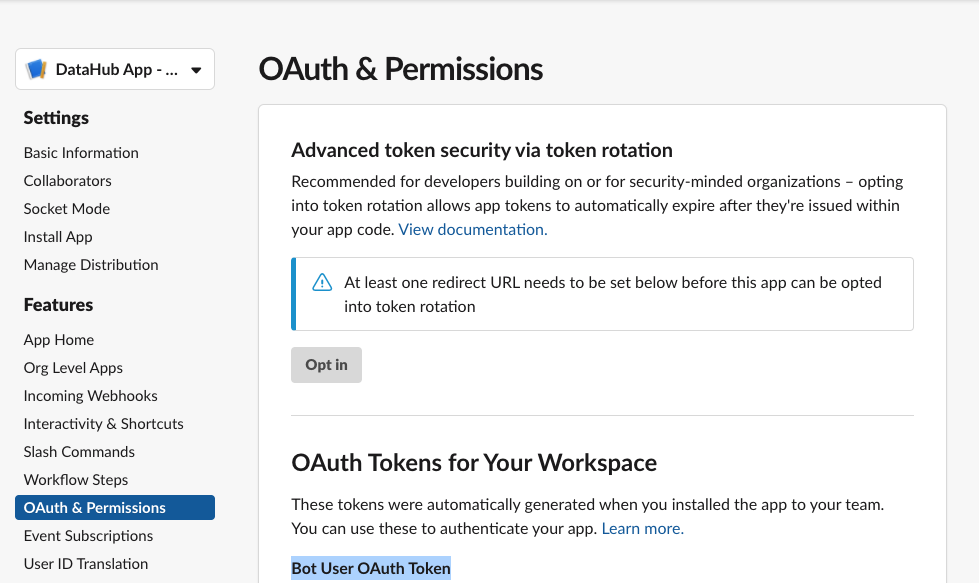

+Navigate to the **OAuth & Permissions** Tab

+

+

+

+Here you'll find a “Bot User OAuth Token” which DataHub will need to communicate with your Slack workspace through the bot.

+

+#### 3. The Slack Channel

+

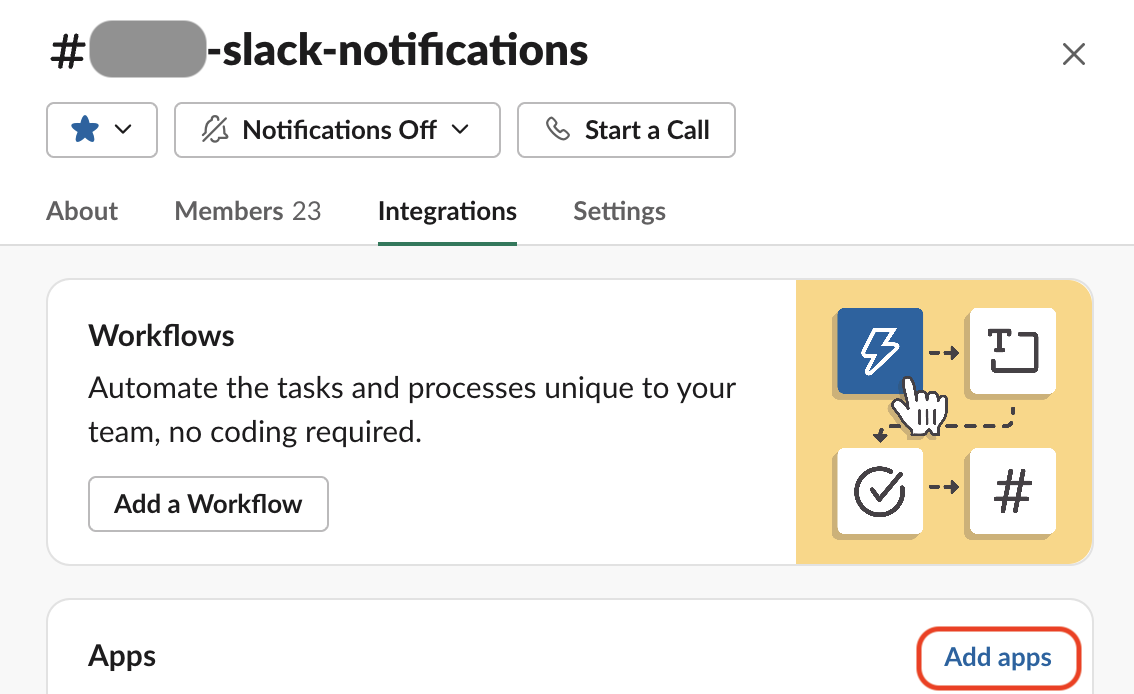

+Finally, you need to figure out which Slack channel you will send notifications to. Perhaps it should be called #datahub-notifications or maybe, #data-notifications or maybe you already have a channel where important notifications about datasets and pipelines are already being routed to. Once you have decided what channel to send notifications to, make sure to add the app to the channel.

+

+

+

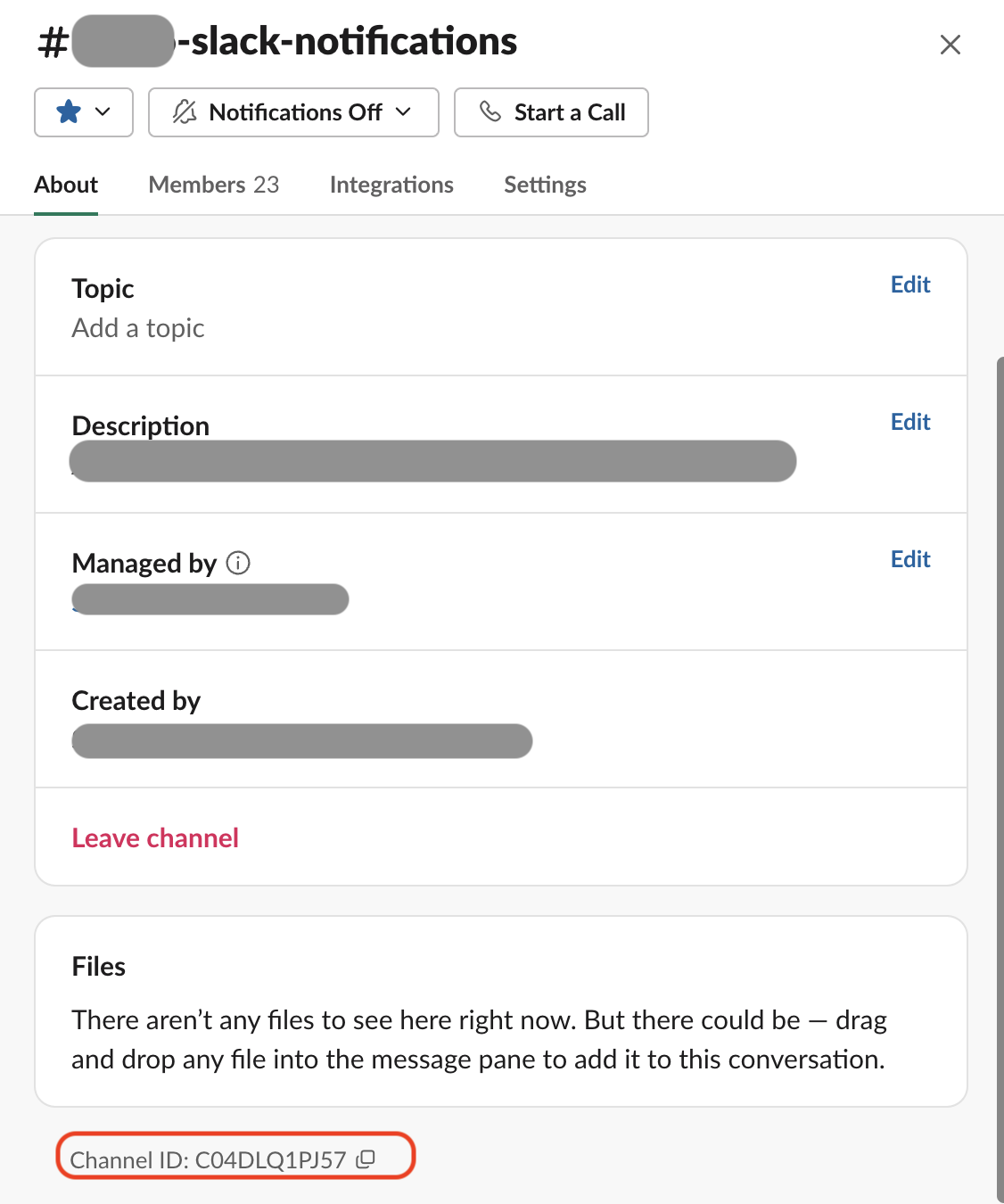

+Next, figure out the channel id for this Slack channel. You can find it in the About section for the channel if you scroll to the very bottom of the app.

+

+

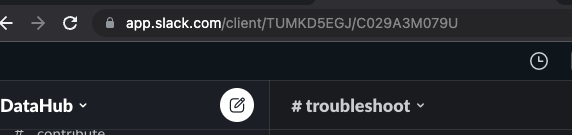

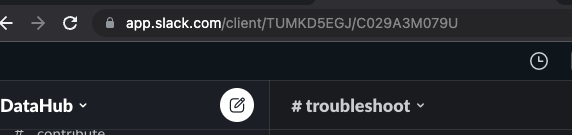

+Alternately, if you are on the browser, you can figure it out from the URL. e.g. for the troubleshoot channel in OSS DataHub slack

+

+

+

+- Notice `TUMKD5EGJ/C029A3M079U` in the URL

+ - Channel ID = `C029A3M079U` from above

+

+

+In the next steps, we'll show you how to configure the Slack Action based on the credentials and configuration values that you have collected.

+

+### Installation Instructions (Deployment specific)

+

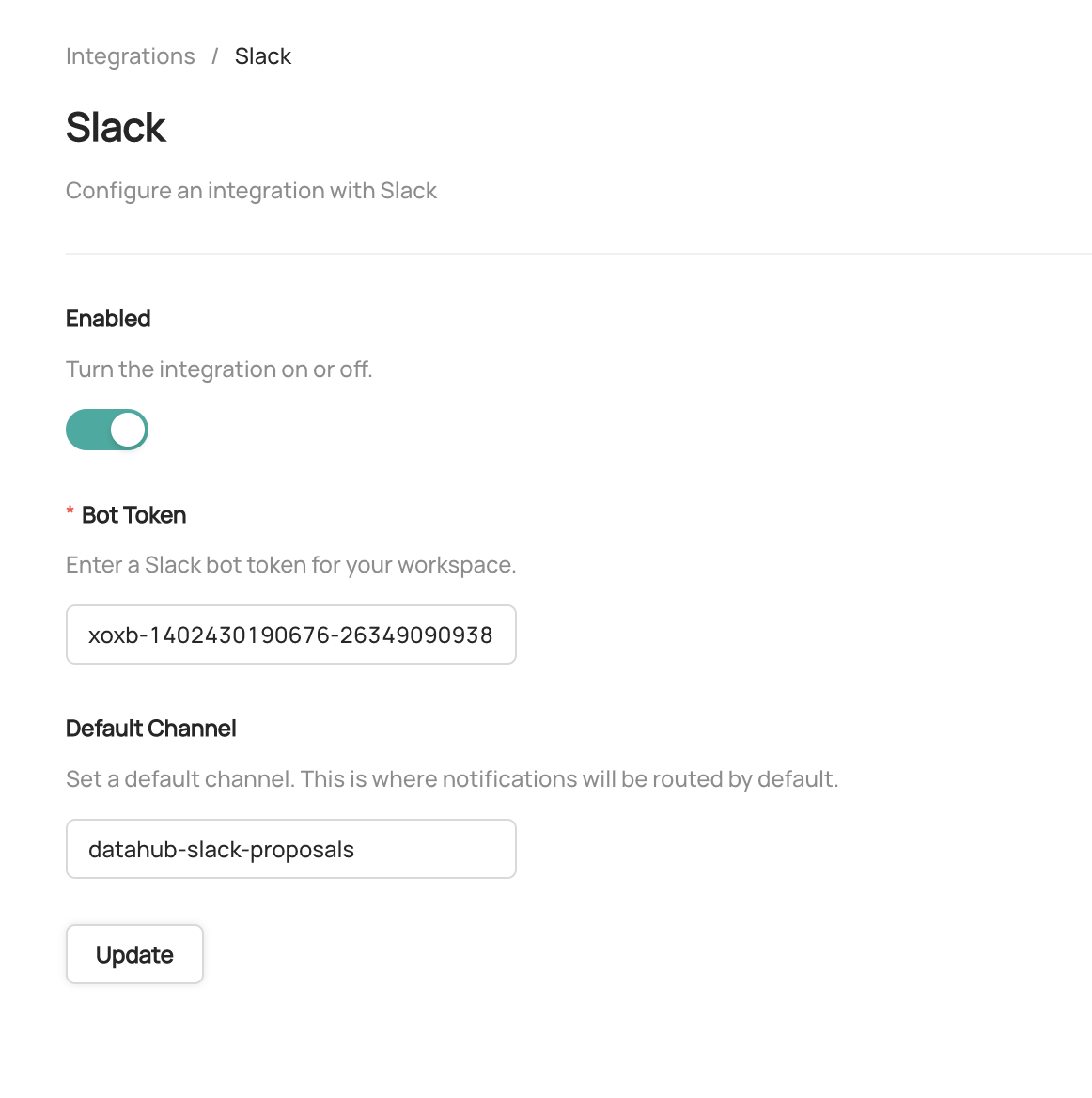

+#### Managed DataHub

+

+Head over to the [Configuring Notifications](../../managed-datahub/saas-slack-setup.md#configuring-notifications) section in the Managed DataHub guide to configure Slack notifications for your Managed DataHub instance.

+

+

+#### Quickstart

+

+If you are running DataHub using the docker quickstart option, there are no additional software installation steps. The `datahub-actions` container comes pre-installed with the Slack action.

+

+All you need to do is export a few environment variables to activate and configure the integration. See below for the list of environment variables to export.

+

+| Env Variable | Required for Integration | Purpose |

+| --- | --- | --- |

+| DATAHUB_ACTIONS_SLACK_ENABLED | ✅ | Set to "true" to enable the Slack action |

+| DATAHUB_ACTIONS_SLACK_SIGNING_SECRET | ✅ | Set to the [Slack Signing Secret](#1-the-signing-secret) that you configured in the pre-requisites step above |

+| DATAHUB_ACTIONS_SLACK_BOT_TOKEN | ✅ | Set to the [Bot User OAuth Token](#2-the-bot-token) that you configured in the pre-requisites step above |

+| DATAHUB_ACTIONS_SLACK_CHANNEL | ✅ | Set to the [Slack Channel ID](#3-the-slack-channel) that you want the action to send messages to |

+| DATAHUB_ACTIONS_DATAHUB_BASE_URL | ❌ | Defaults to "http://localhost:9002". Set to the location where your DataHub UI is running. On a local quickstart this is usually "http://localhost:9002", so you shouldn't need to modify this |

+

+:::note

+

+You will have to restart the `datahub-actions` docker container after you have exported these environment variables if this is the first time. The simplest way to do it is via the Docker Desktop UI, or by just issuing a `datahub docker quickstart --stop && datahub docker quickstart` command to restart the whole instance.

+

+:::

+

+

+For example:

+```shell

+export DATAHUB_ACTIONS_SLACK_ENABLED=true

+export DATAHUB_ACTIONS_SLACK_SIGNING_SECRET=

+....

+export DATAHUB_ACTIONS_SLACK_CHANNEL=

+

+datahub docker quickstart --stop && datahub docker quickstart

+```

+

+#### k8s / helm

+

+Similar to the quickstart scenario, there are no specific software installation steps. The `datahub-actions` container comes pre-installed with the Slack action. You just need to export a few environment variables and make them available to the `datahub-actions` container to activate and configure the integration. See below for the list of environment variables to export.

+

+| Env Variable | Required for Integration | Purpose |

+| --- | --- | --- |

+| DATAHUB_ACTIONS_SLACK_ENABLED | ✅ | Set to "true" to enable the Slack action |

+| DATAHUB_ACTIONS_SLACK_SIGNING_SECRET | ✅ | Set to the [Slack Signing Secret](#1-the-signing-secret) that you configured in the pre-requisites step above |

+| DATAHUB_ACTIONS_SLACK_BOT_TOKEN | ✅ | Set to the [Bot User OAuth Token](#2-the-bot-token) that you configured in the pre-requisites step above |

+| DATAHUB_ACTIONS_SLACK_CHANNEL | ✅ | Set to the [Slack Channel ID](#3-the-slack-channel) that you want the action to send messages to |

+| DATAHUB_ACTIONS_DATAHUB_BASE_URL | ✅| Set to the location where your DataHub UI is running. For example, if your DataHub UI is hosted at "https://datahub.my-company.biz", set this to "https://datahub.my-company.biz"|

+

+

+#### Bare Metal - CLI or Python-based

+

+If you are using the `datahub-actions` library directly from Python, or the `datahub-actions` cli directly, then you need to first install the `slack` action plugin in your Python virtualenv.

+

+```

+pip install "datahub-actions[slack]"

+```

+

+Then run the action with a configuration file that you have modified to capture your credentials and configuration.

+

+##### Sample Slack Action Configuration File

+

+```yml

+name: datahub_slack_action

+enabled: true

+source:

+ type: "kafka"

+ config:

+ connection:

+ bootstrap: ${KAFKA_BOOTSTRAP_SERVER:-localhost:9092}

+ schema_registry_url: ${SCHEMA_REGISTRY_URL:-http://localhost:8081}

+ topic_routes:

+ mcl: ${METADATA_CHANGE_LOG_VERSIONED_TOPIC_NAME:-MetadataChangeLog_Versioned_v1}

+ pe: ${PLATFORM_EVENT_TOPIC_NAME:-PlatformEvent_v1}

+

+## 3a. Optional: Filter to run on events (map)

+# filter:

+# event_type:

+# event:

+# # Filter event fields by exact-match

+#

+

+# 3b. Optional: Custom Transformers to run on events (array)

+# transform:

+# - type:

+# config:

+# # Transformer-specific configs (map)

+

+action:

+ type: slack

+ config:

+ # Action-specific configs (map)

+ base_url: ${DATAHUB_ACTIONS_SLACK_DATAHUB_BASE_URL:-http://localhost:9002}

+ bot_token: ${DATAHUB_ACTIONS_SLACK_BOT_TOKEN}

+ signing_secret: ${DATAHUB_ACTIONS_SLACK_SIGNING_SECRET}

+ default_channel: ${DATAHUB_ACTIONS_SLACK_CHANNEL}

+ suppress_system_activity: ${DATAHUB_ACTIONS_SLACK_SUPPRESS_SYSTEM_ACTIVITY:-true}

+

+datahub:

+ server: "http://${DATAHUB_GMS_HOST:-localhost}:${DATAHUB_GMS_PORT:-8080}"

+

+```

+

+##### Slack Action Configuration Parameters

+

+| Field | Required | Default | Description |

+| --- | --- | --- | --- |

+| `base_url` | ❌| `False` | Whether to print events in upper case. |

+| `signing_secret` | ✅ | | Set to the [Slack Signing Secret](#1-the-signing-secret) that you configured in the pre-requisites step above |

+| `bot_token` | ✅ | | Set to the [Bot User OAuth Token](#2-the-bot-token) that you configured in the pre-requisites step above |

+| `default_channel` | ✅ | | Set to the [Slack Channel ID](#3-the-slack-channel) that you want the action to send messages to |

+| `suppress_system_activity` | ❌ | `True` | Set to `False` if you want to get low level system activity events, e.g. when datasets are ingested, etc. Note: this will currently result in a very spammy Slack notifications experience, so this is not recommended to be changed. |

+

+

+## Troubleshooting

+

+If things are configured correctly, you should see logs on the `datahub-actions` container that indicate success in enabling and running the Slack action.

+

+```shell

+docker logs datahub-datahub-actions-1

+

+...

+[2022-12-04 07:07:53,804] INFO {datahub_actions.plugin.action.slack.slack:96} - Slack notification action configured with bot_token=SecretStr('**********') signing_secret=SecretStr('**********') default_channel='C04CZUSSR5X' base_url='http://localhost:9002' suppress_system_activity=True

+[2022-12-04 07:07:54,506] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_teams_action as it is not enabled

+[2022-12-04 07:07:54,506] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'ingestion_executor' is now running.

+[2022-12-04 07:07:54,507] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'datahub_slack_action' is now running.

+...

+```

+

+

+If the Slack action was not enabled, you would see messages indicating that.

+e.g. the following logs below show that neither the Slack or Teams action were enabled.

+

+```shell

+docker logs datahub-datahub-actions-1

+

+....

+No user action configurations found. Not starting user actions.

+[2022-12-04 06:45:27,509] INFO {datahub_actions.cli.actions:76} - DataHub Actions version: unavailable (installed editable via git)

+[2022-12-04 06:45:27,647] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_slack_action as it is not enabled

+[2022-12-04 06:45:27,649] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_teams_action as it is not enabled

+[2022-12-04 06:45:27,649] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'ingestion_executor' is now running.

+...

+

+```

\ No newline at end of file

diff --git a/docs/actions/actions/teams.md b/docs/actions/actions/teams.md

new file mode 100644

index 00000000000000..55f0c72dff6403

--- /dev/null

+++ b/docs/actions/actions/teams.md

@@ -0,0 +1,184 @@

+import FeatureAvailability from '@site/src/components/FeatureAvailability';

+

+# Microsoft Teams

+

+

+

+| | |

+| --- | --- |

+| **Status** |  |

+| **Version Requirements** |  |

+

+## Overview

+

+This Action integrates DataHub with Microsoft Teams to send notifications to a configured Teams channel in your workspace.

+

+### Capabilities

+

+- Sending notifications of important events to a Teams channel

+ - Adding or Removing a tag from an entity (dataset, dashboard etc.)

+ - Updating documentation at the entity or field (column) level.

+ - Adding or Removing ownership from an entity (dataset, dashboard, etc.)

+ - Creating a Domain

+ - and many more.

+

+### User Experience

+

+On startup, the action will produce a welcome message that looks like the one below.

+

+

+

+On each event, the action will produce a notification message that looks like the one below.

+

+

+Watch the townhall demo to see this in action:

+[](https://www.youtube.com/watch?v=BlCLhG8lGoY&t=2998s)

+

+

+### Supported Events

+

+- `EntityChangeEvent_v1`

+- Currently, the `MetadataChangeLog_v1` event is **not** processed by the Action.

+

+## Action Quickstart

+

+### Prerequisites

+

+Ensure that you have configured an incoming webhook in your Teams channel.

+

+Follow the guide [here](https://learn.microsoft.com/en-us/microsoftteams/platform/webhooks-and-connectors/how-to/add-incoming-webhook) to set it up.

+

+Take note of the incoming webhook url as you will need to use that to configure the Team action.

+

+### Installation Instructions (Deployment specific)

+

+#### Quickstart

+

+If you are running DataHub using the docker quickstart option, there are no additional software installation steps. The `datahub-actions` container comes pre-installed with the Teams action.

+

+All you need to do is export a few environment variables to activate and configure the integration. See below for the list of environment variables to export.

+

+| Env Variable | Required for Integration | Purpose |

+| --- | --- | --- |

+| DATAHUB_ACTIONS_TEAMS_ENABLED | ✅ | Set to "true" to enable the Teams action |

+| DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL | ✅ | Set to the incoming webhook url that you configured in the [pre-requisites step](#prerequisites) above |

+| DATAHUB_ACTIONS_DATAHUB_BASE_URL | ❌ | Defaults to "http://localhost:9002". Set to the location where your DataHub UI is running. On a local quickstart this is usually "http://localhost:9002", so you shouldn't need to modify this |

+

+:::note

+

+You will have to restart the `datahub-actions` docker container after you have exported these environment variables if this is the first time. The simplest way to do it is via the Docker Desktop UI, or by just issuing a `datahub docker quickstart --stop && datahub docker quickstart` command to restart the whole instance.

+

+:::

+

+

+For example:

+```shell

+export DATAHUB_ACTIONS_TEAMS_ENABLED=true

+export DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL=

+

+datahub docker quickstart --stop && datahub docker quickstart

+```

+

+#### k8s / helm

+

+Similar to the quickstart scenario, there are no specific software installation steps. The `datahub-actions` container comes pre-installed with the Teams action. You just need to export a few environment variables and make them available to the `datahub-actions` container to activate and configure the integration. See below for the list of environment variables to export.

+

+| Env Variable | Required for Integration | Purpose |

+| --- | --- | --- |

+| DATAHUB_ACTIONS_TEAMS_ENABLED | ✅ | Set to "true" to enable the Teams action |

+| DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL | ✅ | Set to the incoming webhook url that you configured in the [pre-requisites step](#prerequisites) above |

+| DATAHUB_ACTIONS_DATAHUB_BASE_URL | ✅| Set to the location where your DataHub UI is running. For example, if your DataHub UI is hosted at "https://datahub.my-company.biz", set this to "https://datahub.my-company.biz"|

+

+

+#### Bare Metal - CLI or Python-based

+

+If you are using the `datahub-actions` library directly from Python, or the `datahub-actions` cli directly, then you need to first install the `teams` action plugin in your Python virtualenv.

+

+```

+pip install "datahub-actions[teams]"

+```

+

+Then run the action with a configuration file that you have modified to capture your credentials and configuration.

+

+##### Sample Teams Action Configuration File

+

+```yml

+name: datahub_teams_action

+enabled: true

+source:

+ type: "kafka"

+ config:

+ connection:

+ bootstrap: ${KAFKA_BOOTSTRAP_SERVER:-localhost:9092}

+ schema_registry_url: ${SCHEMA_REGISTRY_URL:-http://localhost:8081}

+ topic_routes:

+ mcl: ${METADATA_CHANGE_LOG_VERSIONED_TOPIC_NAME:-MetadataChangeLog_Versioned_v1}

+ pe: ${PLATFORM_EVENT_TOPIC_NAME:-PlatformEvent_v1}

+

+## 3a. Optional: Filter to run on events (map)

+# filter:

+# event_type:

+# event:

+# # Filter event fields by exact-match

+#

+

+# 3b. Optional: Custom Transformers to run on events (array)

+# transform:

+# - type:

+# config:

+# # Transformer-specific configs (map)

+

+action:

+ type: teams

+ config:

+ # Action-specific configs (map)

+ base_url: ${DATAHUB_ACTIONS_TEAMS_DATAHUB_BASE_URL:-http://localhost:9002}

+ webhook_url: ${DATAHUB_ACTIONS_TEAMS_WEBHOOK_URL}

+ suppress_system_activity: ${DATAHUB_ACTIONS_TEAMS_SUPPRESS_SYSTEM_ACTIVITY:-true}

+

+datahub:

+ server: "http://${DATAHUB_GMS_HOST:-localhost}:${DATAHUB_GMS_PORT:-8080}"

+```

+

+##### Teams Action Configuration Parameters

+

+| Field | Required | Default | Description |

+| --- | --- | --- | --- |

+| `base_url` | ❌| `False` | Whether to print events in upper case. |

+| `webhook_url` | ✅ | Set to the incoming webhook url that you configured in the [pre-requisites step](#prerequisites) above |

+| `suppress_system_activity` | ❌ | `True` | Set to `False` if you want to get low level system activity events, e.g. when datasets are ingested, etc. Note: this will currently result in a very spammy Teams notifications experience, so this is not recommended to be changed. |

+

+

+## Troubleshooting

+

+If things are configured correctly, you should see logs on the `datahub-actions` container that indicate success in enabling and running the Teams action.

+

+```shell

+docker logs datahub-datahub-actions-1

+

+...

+[2022-12-04 16:47:44,536] INFO {datahub_actions.cli.actions:76} - DataHub Actions version: unavailable (installed editable via git)

+[2022-12-04 16:47:44,565] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_slack_action as it is not enabled

+[2022-12-04 16:47:44,581] INFO {datahub_actions.plugin.action.teams.teams:60} - Teams notification action configured with webhook_url=SecretStr('**********') base_url='http://localhost:9002' suppress_system_activity=True

+[2022-12-04 16:47:46,393] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'ingestion_executor' is now running.

+[2022-12-04 16:47:46,393] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'datahub_teams_action' is now running.

+...

+```

+

+

+If the Teams action was not enabled, you would see messages indicating that.

+e.g. the following logs below show that neither the Teams or Slack action were enabled.

+

+```shell

+docker logs datahub-datahub-actions-1

+

+....

+No user action configurations found. Not starting user actions.

+[2022-12-04 06:45:27,509] INFO {datahub_actions.cli.actions:76} - DataHub Actions version: unavailable (installed editable via git)

+[2022-12-04 06:45:27,647] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_slack_action as it is not enabled

+[2022-12-04 06:45:27,649] WARNING {datahub_actions.cli.actions:103} - Skipping pipeline datahub_teams_action as it is not enabled

+[2022-12-04 06:45:27,649] INFO {datahub_actions.cli.actions:119} - Action Pipeline with name 'ingestion_executor' is now running.

+...

+

+```

+

diff --git a/docs/how/updating-datahub.md b/docs/how/updating-datahub.md

index 5c9f13a9c8879c..5b896bc41cbf51 100644

--- a/docs/how/updating-datahub.md

+++ b/docs/how/updating-datahub.md

@@ -12,6 +12,8 @@ This file documents any backwards-incompatible changes in DataHub and assists pe

### Other notable Changes

+- #6611 - Snowflake `schema_pattern` now accepts pattern for fully qualified schema name in format `.` by setting config `match_fully_qualified_names : True`. Current default `match_fully_qualified_names: False` is only to maintain backward compatibility. The config option `match_fully_qualified_names` will be deprecated in future and the default behavior will assume `match_fully_qualified_names: True`."

+

## 0.9.3

### Breaking Changes

diff --git a/docs/imgs/slack/slack_add_token.png b/docs/imgs/slack/slack_add_token.png

deleted file mode 100644

index 6a12dc545ec62c..00000000000000

Binary files a/docs/imgs/slack/slack_add_token.png and /dev/null differ

diff --git a/docs/imgs/slack/slack_basic_info.png b/docs/imgs/slack/slack_basic_info.png

deleted file mode 100644

index a01a1af370442d..00000000000000

Binary files a/docs/imgs/slack/slack_basic_info.png and /dev/null differ

diff --git a/docs/imgs/slack/slack_channel.png b/docs/imgs/slack/slack_channel.png

deleted file mode 100644

index 83645e00d724a4..00000000000000

Binary files a/docs/imgs/slack/slack_channel.png and /dev/null differ

diff --git a/docs/imgs/slack/slack_channel_url.png b/docs/imgs/slack/slack_channel_url.png

deleted file mode 100644

index 7715bf4a51fbe4..00000000000000

Binary files a/docs/imgs/slack/slack_channel_url.png and /dev/null differ

diff --git a/docs/imgs/slack/slack_oauth_and_permissions.png b/docs/imgs/slack/slack_oauth_and_permissions.png

deleted file mode 100644

index 87846e7897f6ed..00000000000000

Binary files a/docs/imgs/slack/slack_oauth_and_permissions.png and /dev/null differ

diff --git a/docs/imgs/slack/slack_user_id.png b/docs/imgs/slack/slack_user_id.png

deleted file mode 100644

index 02d84b326896c8..00000000000000

Binary files a/docs/imgs/slack/slack_user_id.png and /dev/null differ

diff --git a/docs/managed-datahub/saas-slack-setup.md b/docs/managed-datahub/saas-slack-setup.md

index 430f926c08daae..68f947f1717158 100644

--- a/docs/managed-datahub/saas-slack-setup.md

+++ b/docs/managed-datahub/saas-slack-setup.md

@@ -41,7 +41,7 @@ settings:

Confirm you see the Basic Information Tab

-

+

- Click **Install to Workspace**

- It will show you permissions the Slack App is asking for, what they mean and a default channel in which you want to add the slack app

@@ -54,7 +54,7 @@ Confirm you see the Basic Information Tab

- Go to **OAuth & Permissions** Tab

-

+

Here you'll find a “Bot User OAuth Token” which DataHub will need to communicate with your slack through the bot.

In the next steps, we'll show you how to configure the Slack Integration inside of Acryl DataHub.

@@ -71,13 +71,13 @@ To enable the integration with slack

- Enter a **Default Slack Channel** - this is where all notifications will be routed unless

- Click **Update** to save your settings

-

+

To enable and disable specific types of notifications, or configure custom routing for notifications, start by navigating to **Settings > Notifications**.

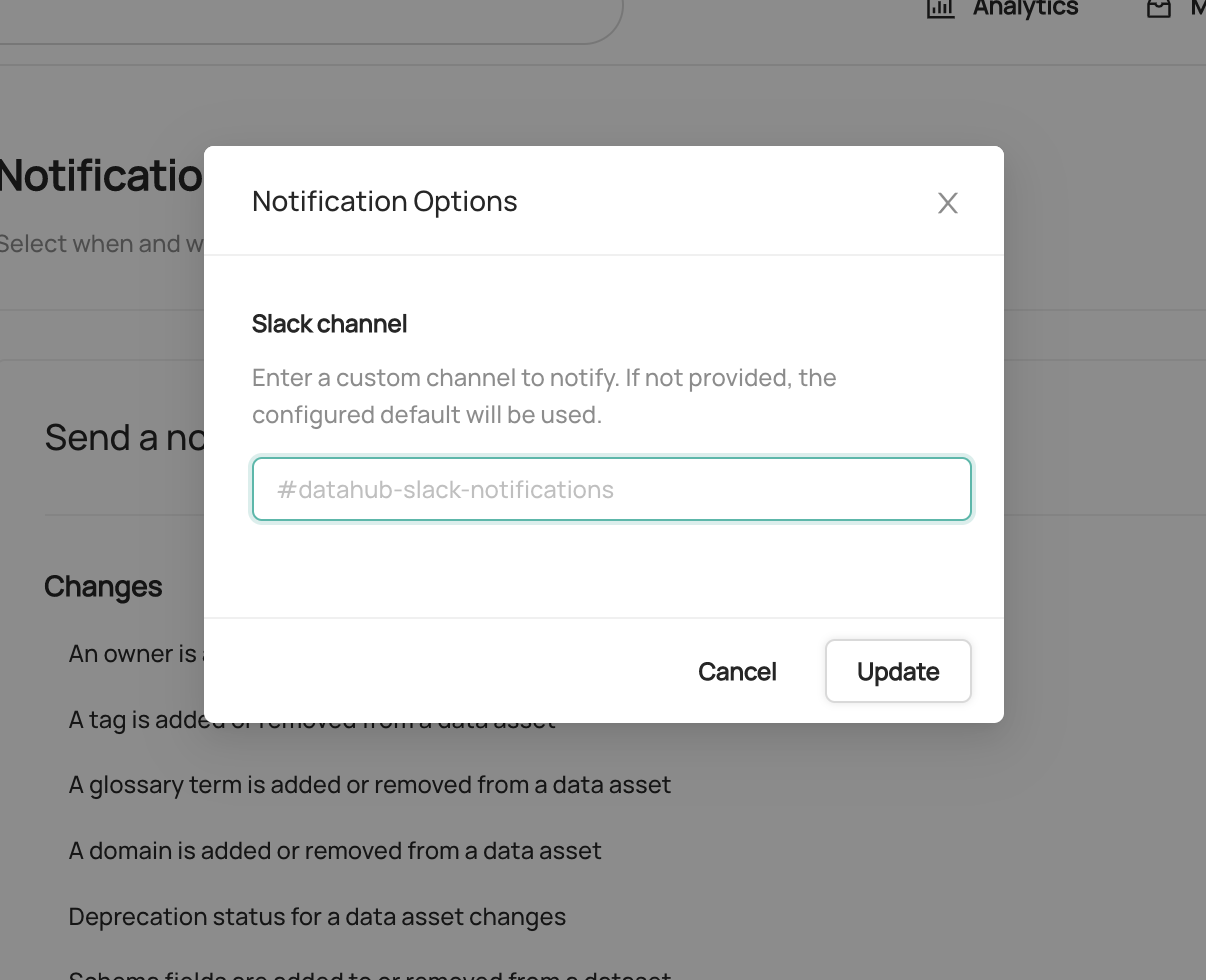

To enable or disable a specific notification type in Slack, simply click the check mark. By default, all notification types are enabled.

To customize the channel where notifications are send, click the button to the right of the check box.

-

+

If provided, a custom channel will be used to route notifications of the given type. If not provided, the default channel will be used.

That's it! You should begin to receive notifications on Slack. Note that it may take up to 1 minute for notification settings to take effect after saving.

@@ -94,7 +94,7 @@ For now we support sending notifications to

- Go to the Slack channel for which you want to get channel ID

- Check the URL e.g. for the troubleshoot channel in OSS DataHub slack

-

+

- Notice `TUMKD5EGJ/C029A3M079U` in the URL

- Team ID = `TUMKD5EGJ` from above

@@ -108,4 +108,4 @@ For now we support sending notifications to

- Click on “More”

- Click on “Copy member ID”

-

+

diff --git a/docs/townhall-history.md b/docs/townhall-history.md

index cea81013f14042..51a8770ef09284 100644

--- a/docs/townhall-history.md

+++ b/docs/townhall-history.md

@@ -2,6 +2,21 @@

A list of previous Town Halls, their planned schedule, and the recording of the meeting.

+## 12/01/2022

+[Full YouTube video](https://youtu.be/BlCLhG8lGoY)

+

+### Agenda

+

+November Town Hall (in December!)

+

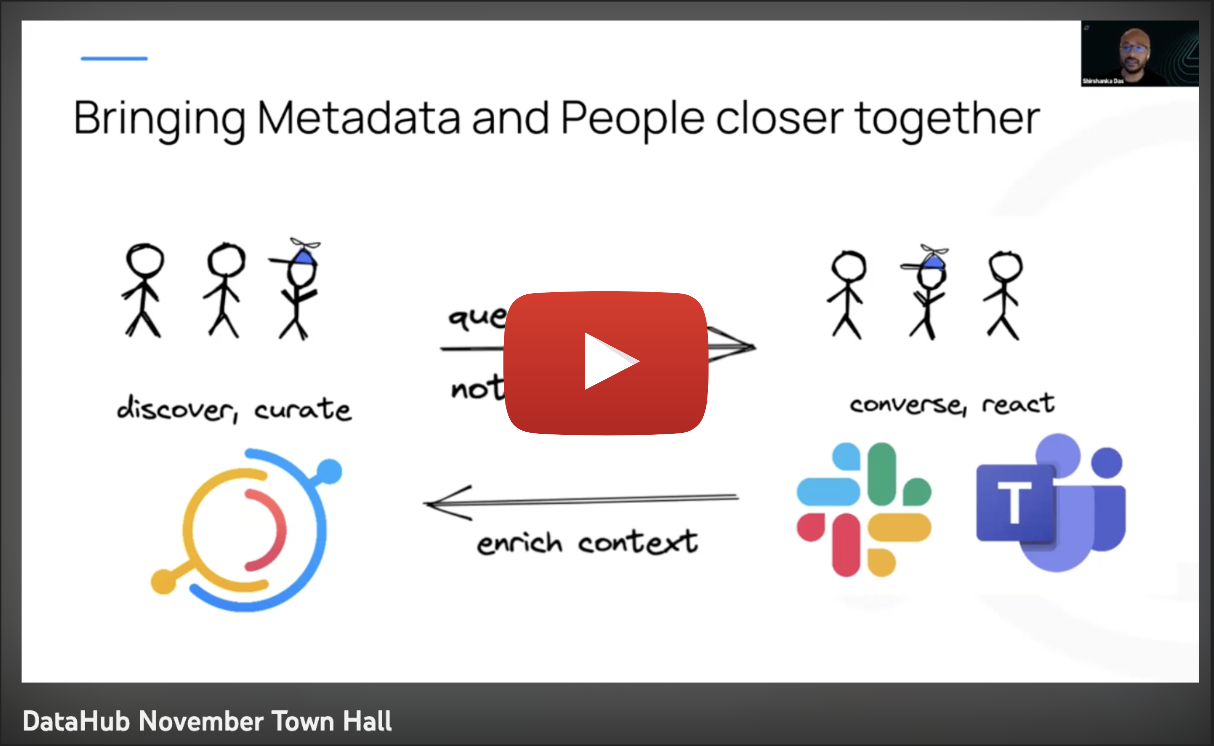

+- Community Case Study - The Pinterest Team will share how they have integrated DataHub + Thrift and extended the Metadata Model with a Data Element entity to capture semantic types.

+- NEW! Ingestion Quickstart Guides - DataHub newbies, this one is for you! We’re rolling out ingestion quickstart guides to help you quickly get up and running with DataHub + Snowflake, BigQuery, and more!

+- NEW! In-App Product Tours - We’re making it easier than ever for end-users to get familiar with all that DataHub has to offer - hear all about the in-product onboarding resources we’re rolling out soon!

+- DataHub UI Navigation and Performance - Learn all about upcoming changes to our user experience to make it easier (and faster!) for end users to work within DataHub.

+- Sneak Peek! Manual Lineage via the UI - The Community asked and we’re delivering! Soon you’ll be able to manually add lineage connections between Entities in DataHub.

+- NEW! Slack + Microsoft Teams Integrations - Send automated alerts to Slack and/or Teams to keep track of critical events and changes within DataHub.

+- Hacktoberfest Winners Announced - We’ll recap this year’s Hacktoberfest and announce three winners of a $250 Amazon gift card & DataHub Swag.

+

## 10/27/2022

[Full YouTube video](https://youtu.be/B74WHxX5EMk)

diff --git a/entity-registry/build.gradle b/entity-registry/build.gradle

index 3594e0440f63d4..af742d240d1e6b 100644

--- a/entity-registry/build.gradle

+++ b/entity-registry/build.gradle

@@ -4,7 +4,8 @@ dependencies {

compile spec.product.pegasus.data

compile spec.product.pegasus.generator

compile project(path: ':metadata-models')

- compile externalDependency.lombok

+ implementation externalDependency.slf4jApi

+ compileOnly externalDependency.lombok

compile externalDependency.guava

compile externalDependency.jacksonDataBind

compile externalDependency.jacksonDataFormatYaml

diff --git a/ingestion-scheduler/build.gradle b/ingestion-scheduler/build.gradle

index 7023ce1208b513..3dec8ee400150a 100644

--- a/ingestion-scheduler/build.gradle

+++ b/ingestion-scheduler/build.gradle

@@ -4,12 +4,12 @@ dependencies {

compile project(path: ':metadata-models')

compile project(path: ':metadata-io')

compile project(path: ':metadata-service:restli-client')

- compile externalDependency.lombok

+ implementation externalDependency.slf4jApi

+ compileOnly externalDependency.lombok

annotationProcessor externalDependency.lombok

testCompile externalDependency.mockito

testCompile externalDependency.testng

- testAnnotationProcessor externalDependency.lombok

constraints {

implementation(externalDependency.log4jCore) {

diff --git a/li-utils/build.gradle b/li-utils/build.gradle

index 6a6971589ae8b0..d11cd86659605c 100644

--- a/li-utils/build.gradle

+++ b/li-utils/build.gradle

@@ -20,6 +20,7 @@ dependencies {

}

compile externalDependency.guava

+ implementation externalDependency.slf4jApi

compileOnly externalDependency.lombok

annotationProcessor externalDependency.lombok

diff --git a/metadata-dao-impl/kafka-producer/build.gradle b/metadata-dao-impl/kafka-producer/build.gradle

index 18b129297f19f6..5b40eb5f322321 100644

--- a/metadata-dao-impl/kafka-producer/build.gradle

+++ b/metadata-dao-impl/kafka-producer/build.gradle

@@ -9,6 +9,7 @@ dependencies {

compile externalDependency.kafkaClients

+ implementation externalDependency.slf4jApi

compileOnly externalDependency.lombok

annotationProcessor externalDependency.lombok

diff --git a/metadata-ingestion/schedule_docs/airflow.md b/metadata-ingestion/schedule_docs/airflow.md

index 03a5930fea1368..e48710964b01c7 100644

--- a/metadata-ingestion/schedule_docs/airflow.md

+++ b/metadata-ingestion/schedule_docs/airflow.md

@@ -2,11 +2,41 @@

If you are using Apache Airflow for your scheduling then you might want to also use it for scheduling your ingestion recipes. For any Airflow specific questions you can go through [Airflow docs](https://airflow.apache.org/docs/apache-airflow/stable/) for more details.

-To schedule your recipe through Airflow you can follow these steps

-- Create a recipe file e.g. `recipe.yml`

-- Ensure the receipe file is in a folder accessible to your airflow workers. You can either specify absolute path on the machines where Airflow is installed or a path relative to `AIRFLOW_HOME`.

-- Ensure [DataHub CLI](../../docs/cli.md) is installed in your airflow environment

-- Create a sample DAG file like [`generic_recipe_sample_dag.py`](../src/datahub_provider/example_dags/generic_recipe_sample_dag.py). This will read your DataHub ingestion recipe file and run it.

+We've provided a few examples of how to configure your DAG:

+

+- [`mysql_sample_dag`](../src/datahub_provider/example_dags/mysql_sample_dag.py) embeds the full MySQL ingestion configuration inside the DAG.

+

+- [`snowflake_sample_dag`](../src/datahub_provider/example_dags/snowflake_sample_dag.py) avoids embedding credentials inside the recipe, and instead fetches them from Airflow's [Connections](https://airflow.apache.org/docs/apache-airflow/stable/howto/connection/index.html) feature. You must configure your connections in Airflow to use this approach.

+

+:::tip

+

+These example DAGs use the `PythonVirtualenvOperator` to run the ingestion. This is the recommended approach, since it guarantees that there will not be any conflicts between DataHub and the rest of your Airflow environment.

+

+When configuring the task, it's important to specify the requirements with your source and set the `system_site_packages` option to false.

+

+```py

+ingestion_task = PythonVirtualenvOperator(

+ task_id="ingestion_task",

+ requirements=[

+ "acryl-datahub[]",

+ ],

+ system_site_packages=False,

+ python_callable=your_callable,

+)

+```

+

+:::

+

+

+Advanced: loading a recipe file

+

+In more advanced cases, you might want to store your ingestion recipe in a file and load it from your task.

+

+- Ensure the recipe file is in a folder accessible to your airflow workers. You can either specify absolute path on the machines where Airflow is installed or a path relative to `AIRFLOW_HOME`.

+- Ensure [DataHub CLI](../../docs/cli.md) is installed in your airflow environment.

+- Create a DAG task to read your DataHub ingestion recipe file and run it. See the example below for reference.

- Deploy the DAG file into airflow for scheduling. Typically this involves checking in the DAG file into your dags folder which is accessible to your Airflow instance.

-Alternatively you can have an inline recipe as given in [`mysql_sample_dag.py`](../src/datahub_provider/example_dags/mysql_sample_dag.py). This runs a MySQL metadata ingestion pipeline using an inlined configuration.

+Example: [`generic_recipe_sample_dag`](../src/datahub_provider/example_dags/generic_recipe_sample_dag.py)

+

+

diff --git a/metadata-ingestion/setup.cfg b/metadata-ingestion/setup.cfg

index b6d4f55a09e3a5..3f0e8ab611b054 100644

--- a/metadata-ingestion/setup.cfg

+++ b/metadata-ingestion/setup.cfg

@@ -27,6 +27,7 @@ plugins =

exclude = ^(venv|build|dist)/

ignore_missing_imports = yes

namespace_packages = no

+implicit_optional = no

strict_optional = yes

check_untyped_defs = yes

disallow_incomplete_defs = yes

@@ -38,8 +39,16 @@ disallow_untyped_defs = no

# try to be a bit more strict in certain areas of the codebase

[mypy-datahub.*]

ignore_missing_imports = no

+[mypy-datahub_provider.*]

+ignore_missing_imports = no

[mypy-tests.*]

ignore_missing_imports = no

+[mypy-google.protobuf.*]

+# mypy sometimes ignores the above ignore_missing_imports = yes

+# See https://github.com/python/mypy/issues/10632 and

+# https://github.com/python/mypy/issues/10619#issuecomment-1174208395

+# for a discussion of why this happens.

+ignore_missing_imports = yes

[mypy-datahub.configuration.*]

disallow_untyped_defs = yes

[mypy-datahub.emitter.*]

diff --git a/metadata-ingestion/setup.py b/metadata-ingestion/setup.py

index 1a744a6fe328a6..5547914236de69 100644

--- a/metadata-ingestion/setup.py

+++ b/metadata-ingestion/setup.py

@@ -221,7 +221,7 @@ def get_long_description():

delta_lake = {

*s3_base,

- "deltalake>=0.6.3",

+ "deltalake>=0.6.3, != 0.6.4",

}

powerbi_report_server = {"requests", "requests_ntlm"}

@@ -385,8 +385,7 @@ def get_long_description():

"types-ujson>=5.2.0",

"types-termcolor>=1.0.0",

"types-Deprecated",

- # Mypy complains with 4.21.0.0 => error: Library stubs not installed for "google.protobuf.descriptor"

- "types-protobuf<4.21.0.0",

+ "types-protobuf>=4.21.0.1",

}

base_dev_requirements = {

@@ -399,10 +398,7 @@ def get_long_description():

"flake8>=3.8.3",

"flake8-tidy-imports>=4.3.0",

"isort>=5.7.0",

- # mypy 0.990 enables namespace packages by default and sets

- # no implicit optional to True.

- # FIXME: Enable mypy 0.990 when our codebase is fixed.

- "mypy>=0.981,<0.990",

+ "mypy==0.991",

# pydantic 1.8.2 is incompatible with mypy 0.910.

# See https://github.com/samuelcolvin/pydantic/pull/3175#issuecomment-995382910.

# Restricting top version to <1.10 until we can fix our types.

@@ -465,8 +461,9 @@ def get_long_description():

dev_requirements = {

*base_dev_requirements,

+ # Extra requirements for Airflow.

"apache-airflow[snowflake]>=2.0.2", # snowflake is used in example dags

- "snowflake-sqlalchemy<=1.2.4", # make constraint consistent with extras

+ "virtualenv", # needed by PythonVirtualenvOperator

}

full_test_dev_requirements = {

diff --git a/metadata-ingestion/src/datahub/api/graphql/operation.py b/metadata-ingestion/src/datahub/api/graphql/operation.py

index 5e1575e6f75dd2..9cb40ce5815a56 100644

--- a/metadata-ingestion/src/datahub/api/graphql/operation.py

+++ b/metadata-ingestion/src/datahub/api/graphql/operation.py

@@ -122,8 +122,6 @@ def query_operations(

"operationType": operation_type,

"partition": partition,

}

- if filter

- else None

),

},

)

diff --git a/metadata-ingestion/src/datahub/cli/cli_utils.py b/metadata-ingestion/src/datahub/cli/cli_utils.py

index 5bd8841e6755a8..d6ece814c9c47a 100644

--- a/metadata-ingestion/src/datahub/cli/cli_utils.py

+++ b/metadata-ingestion/src/datahub/cli/cli_utils.py

@@ -581,6 +581,11 @@ def post_entity(

curl_command,

)

response = session.post(url, payload)

+ if not response.ok:

+ try:

+ log.info(response.json()["message"].strip())

+ except Exception:

+ log.info(f"post_entity failed: {response.text}")

response.raise_for_status()

return response.status_code

diff --git a/metadata-ingestion/src/datahub/configuration/common.py b/metadata-ingestion/src/datahub/configuration/common.py

index e134a5a8495b91..95d852bbe7b606 100644

--- a/metadata-ingestion/src/datahub/configuration/common.py

+++ b/metadata-ingestion/src/datahub/configuration/common.py

@@ -80,7 +80,7 @@ class OperationalError(PipelineExecutionError):

message: str

info: dict

- def __init__(self, message: str, info: dict = None):

+ def __init__(self, message: str, info: Optional[dict] = None):

self.message = message

self.info = info or {}

diff --git a/metadata-ingestion/src/datahub/configuration/pattern_utils.py b/metadata-ingestion/src/datahub/configuration/pattern_utils.py

new file mode 100644

index 00000000000000..313e68c41812f0

--- /dev/null

+++ b/metadata-ingestion/src/datahub/configuration/pattern_utils.py

@@ -0,0 +1,13 @@

+from datahub.configuration.common import AllowDenyPattern

+

+

+def is_schema_allowed(

+ schema_pattern: AllowDenyPattern,

+ schema_name: str,

+ db_name: str,

+ match_fully_qualified_schema_name: bool,

+) -> bool:

+ if match_fully_qualified_schema_name:

+ return schema_pattern.allowed(f"{db_name}.{schema_name}")

+ else:

+ return schema_pattern.allowed(schema_name)

diff --git a/metadata-ingestion/src/datahub/ingestion/api/source.py b/metadata-ingestion/src/datahub/ingestion/api/source.py

index 70e5ce7db7e821..9f3740aa9f3eea 100644

--- a/metadata-ingestion/src/datahub/ingestion/api/source.py

+++ b/metadata-ingestion/src/datahub/ingestion/api/source.py

@@ -120,7 +120,12 @@ class Source(Closeable, metaclass=ABCMeta):

@classmethod

def create(cls, config_dict: dict, ctx: PipelineContext) -> "Source":

- pass

+ # Technically, this method should be abstract. However, the @config_class

+ # decorator automatically generates a create method at runtime if one is

+ # not defined. Python still treats the class as abstract because it thinks

+ # the create method is missing. To avoid the class becoming abstract, we

+ # can't make this method abstract.

+ raise NotImplementedError('sources must implement "create"')

@abstractmethod

def get_workunits(self) -> Iterable[WorkUnit]:

diff --git a/metadata-ingestion/src/datahub/ingestion/api/workunit.py b/metadata-ingestion/src/datahub/ingestion/api/workunit.py

index 522bcd9fbdbf7e..53a77798f756c8 100644

--- a/metadata-ingestion/src/datahub/ingestion/api/workunit.py

+++ b/metadata-ingestion/src/datahub/ingestion/api/workunit.py

@@ -1,5 +1,5 @@

from dataclasses import dataclass

-from typing import Iterable, Union, overload

+from typing import Iterable, Optional, Union, overload

from datahub.emitter.mcp import MetadataChangeProposalWrapper

from datahub.ingestion.api.source import WorkUnit

@@ -42,9 +42,9 @@ def __init__(

def __init__(

self,

id: str,

- mce: MetadataChangeEvent = None,

- mcp: MetadataChangeProposalWrapper = None,

- mcp_raw: MetadataChangeProposal = None,

+ mce: Optional[MetadataChangeEvent] = None,

+ mcp: Optional[MetadataChangeProposalWrapper] = None,

+ mcp_raw: Optional[MetadataChangeProposal] = None,

treat_errors_as_warnings: bool = False,

):

super().__init__(id)