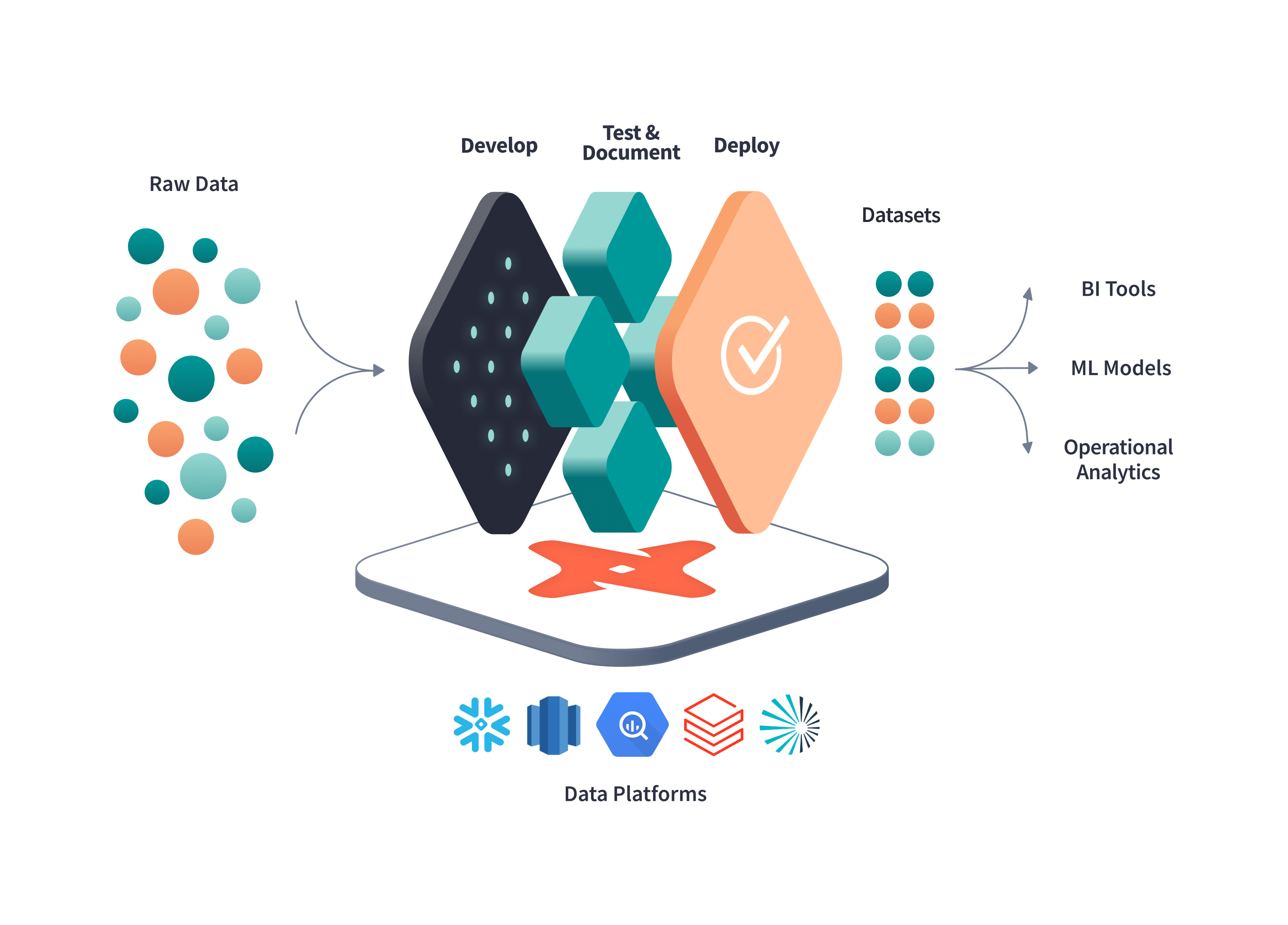

In this HashiQube DevOps lab, you'll get hands-on experience with dbt (Data Build Tool) - a transformation tool that enables data analysts and engineers to transform, test, and document data in cloud data warehouses.

Before provisioning, review the dbt and adapter versions located in common.sh.

You can control which adapter and version you want to install with dbt by changing the DBT_WITH variable to one of these values:

DBT_WITH=postgres

# AVAILABLE OPTIONS:

# postgres - PostgreSQL adapter

# redshift - Amazon Redshift adapter

# bigquery - Google BigQuery adapter

# snowflake - Snowflake adapter

# mssql - SQL Server and Synapse adapter

# spark - Apache Spark adapter

# all - Install all adapters (excluding mssql)bash docker/docker.sh

bash database/postgresql.sh

bash dbt/dbt.shvagrant up --provision-with basetools,docsify,docker,postgresql,dbtdocker compose exec hashiqube /bin/bash

bash hashiqube/basetools.sh

bash docker/docker.sh

bash docsify/docsify.sh

bash database/postgresql.sh

bash dbt/dbt.shThe provisioner automatically sets up the Jaffle Shop example project from dbt Labs.

-

Run the Provision step above.

-

The example project from https://github.com/dbt-labs/jaffle_shop is already cloned into

/vagrant/dbt/jaffle_shop. -

Enter the HashiQube environment:

vagrant ssh

-

Navigate to the example project:

cd /vagrant/dbt/jaffle_shop -

Explore the project structure and follow the tutorial at https://github.com/dbt-labs/jaffle_shop#running-this-project.

-

Enter HashiQube SSH session:

vagrant ssh

-

If you have an existing dbt project under your home directory, you can access it via the

/osdatavolume, which is mapped to your home directory. -

Update your

profiles.ymlwith the correct credentials for your target database. -

Test your connection:

dbt debug

-

Run your dbt project:

dbt run

Once provisioning is complete, you can access the dbt web interface:

dbt supports multiple database adapters, allowing you to connect to various data warehouses.

These adapters require a specific version of dbt:

Core:

- installed: 1.1.0

- latest: 1.2.1 - Update available!

Plugins:

- postgres: 1.1.0 - Update available!

- synapse: 1.1.0 - Up to date!

- sqlserver: 1.1.0 - Up to date!

When using other adapters, you'll see something like:

Core:

- installed: 1.2.1

- latest: 1.2.1 - Up to date!

Plugins:

- spark: 1.2.0 - Up to date!

- postgres: 1.2.1 - Up to date!

- snowflake: 1.2.0 - Up to date!

- redshift: 1.2.1 - Up to date!

- bigquery: 1.2.0 - Up to date!

As your dbt project grows, dbt run and dbt test commands can become time-consuming. Here are some optimization strategies:

Store artifacts to reuse in future runs:

# Run only new or modified models

dbt run --select [...] --defer --state path/to/artifacts

# Test only new or modified models

dbt test --select [...] --defer --state path/to/artifactsThis approach:

- Executes only what's new or changed in your code

- Reuses previously compiled artifacts

- Significantly reduces execution time for large projects

- Is perfect for CI/CD pipelines and pull request validation

The dbt environment is set up using these scripts:

#!/bin/bash

# Set versions

DBT_VERSION="1.2.1"

DBT_SQL_SERVER_VERSION="1.1.0"

DBT_SQL_SYNAPSE_VERSION="1.1.0"

DBT_WITH=postgres

# Available adapter options:

# postgres

# redshift

# bigquery

# snowflake

# mssql

# spark

# all#!/bin/bash

# This script provisions the global dbt environment...