-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

High speed alignment of depth and color frames #8362

Comments

|

Hi @fisakhan Please try setting the color FPS to 60 instead of 30. This may help to reduce blurring on the RGB image due to the RGB sensor on the D435 having a slower rolling shutter than the faster global shutter of the depth sensor on the D435. The recent D455 camera model is the first RealSense camera to have a fast global shutter on both the RGB and depth sensors. |

|

It looks like the depth image has a much shorter exposure time than the color image, which has significant motion blur. Based on your code I'm assuming you are using auto exposure. If you need the frames to be precisely aligned I would recommend manually setting the exposure of both the color and depth cameras to the same duration. Also keep in mind that the exposure values for color and depth cameras are at different scales in the SDK: |

|

@MartyG-RealSense the difference between rolling and global shutters is not speed. Rolling shutters start exposing line-by-line and can sometimes cause a skew effect with fast movement, where global shutters expose the entire sensor at the same time. Neither has an effect on motion blur, which is determined by exposure time. |

|

@MartyG-RealSense I guess within the context of the SDK that makes sense. Increasing the framerate limits the maximum time the auto exposure will expose for. But a frame with an exposure of 16ms at 60fps has the exact same amount of motion blur as a frame with an exposure of 16ms at 30fps. If someone also has resolution and data bandwidth requirements, running at 60fps may not be possible. Also setting the framerate to limit the auto exposure duration still does not guarantee that the color and depth cameras start and stop their exposures at the same time, which looks to be the main issue with the example photos. |

|

Thanks @MartyG-RealSense and @sam598 for your responses. First, I don't think rolling or global shutter is the problem. I found this problem with D415 as well. Second, blur on color image is not a problem for me but alignment is (I need nearly perfect alignment). As suggested by @sam598 , exposure might be the reason. Let me play with the exposure and lets see if that can help. |

|

sensor.get_option(rs.option.exposure) is 8500.0 for depth sensor and 166.0 for color sensor. Should I increase 166 to 8500 for color sensor? |

|

Hi @fisakhan The exposure settings for the depth and color sensors have different scales (large values for depth and small values for color). So I would not recommend setting the color exposure to 8500. The image below illustrates color exposure at 166 (upper) and 8500 (lower): |

|

@MartyG-RealSense yes, increasing the exposure increases the blur and whitish effect but doesn't solve the problem. Increasing exposure above 500 makes the problem even worst. Setting FPS to 30 or 60 also doesn't work. |

@fisakhan Is I mentioned before the exposure values for color and depth cameras are at different scales in the SDK: The depth camera exposure is set in microseconds, and for some reason the color camera is exposure is set in hundreds of microseconds. So if you wanted both exposures to take the same amount of time, it would be 8500 for the depth sensor, and 85 for the color sensor. That is why increasing the color exposure time looked worse. Please keep in mind that this is only if you want the exposures to be the exact same amount of time. If you want to follow @MartyG-RealSense 's suggestion and control auto exposure using the frame rate, you MUST NOT set the exposure time manually, as this will disable auto exposure. |

|

Suggestion of @MartyG-RealSense doesn't work. Exposure of 85 for color sensor makes the color image black and I can't process the color image now. |

|

@fisakhan an exposure of 85 was meant as an example what the equivalent exposure would be. For example you could also set the depth camera exposure time to 16600 and the color camera exposure to 166. |

|

Hi @fisakhan Do you require further assistance with this case, please? Thanks! |

|

I'm still struggling with that problem. Changing FPS or exposure didn't solve it. |

|

@fisakhan Were you able to test the earlier suggestion of enforcing a constant FPS? |

|

i tested for 30 and 15 FPS but doesn't work. |

|

Hi @fisakhan i went over your script carefully again. I see that it is essentially the align_depth2color example script with comments removed and a couple of edits at the end. So it may be useful to focus on the edits in your own script.

cv2.namedWindow('Align Example', cv2.WINDOW_NORMAL)

And this single line is inserted underneath the cv2.namedWindow instruction:

If the original align_depth2color example script is able to function without blurring during fast motion, this would suggest that the problem is occurring within one of the sections that was modified. |

|

Thanks @MartyG-RealSense, After running the original align_depth2color example without any change I can see a bit of improvement for low to medium movement of the object. But fast motion still introduces some blur and align problems. |

|

As long as the RGB sensor on the D435 model has a rolling shutter that is slower than the fast global shutter that the depth image has available then achieving depth to color alignment with fast motion may be problematic. The YouTube video linked to below, of a D435 attached to a vehicle moving at full speed, demonstrates the capture speed that is supported when using the depth stream only. https://www.youtube.com/watch?v=OwJmCyAn3JQ Perhaps you could try aligning the depth image to the infrared image, since infrared and depth originate from the same sensor. Point 2 of the section of Intel's camera tuning guide linked to below provides more information about the benefits of using the left infrared sensor due to its natural alignment with depth. Alternatively, drop use of color and have a pure depth image if the color element is not vital to the image. As alignment is a CPU-intensive operaton, you could also check in Ubuntu whether there is high CPU usage during alignment with the htop command. https://en.wikipedia.org/wiki/Htop If you could add an Nvidia graphics GPU to your Ubuntu computer if it is a desktop machine (and if it does not already have an Nvidia GPU) then librealsense could take advantage of it to provide acceleration of alignment operations via CUDA support. |

|

Hi @fisakhan Do you require further assistance with this case, please? |

|

Case closed due to no further comments received. |

Before opening a new issue, we wanted to provide you with some useful suggestions (Click "Preview" above for a better view):

All users are welcomed to report bugs, ask questions, suggest or request enhancements and generally feel free to open new issue, even if they haven't followed any of the suggestions above :)

Issue Description

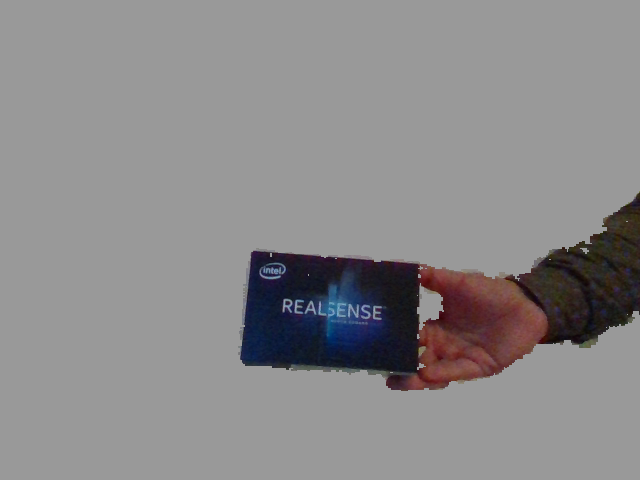

During fast movement of an object, realsense 435 fails to accurately align color and depth frames. With the given Python code, I can successfully align color and depth frames for a static or slowly moving object. However, for a fast moving object (camera fixed), the color frame seems to be slow or with lag and the depth frame faster. The first picture shows aligned frames while the object is static. The next three pictures show upward, downward and left movements, respectively. It is apparent that display/processing of color frame is slow or with lag. How can solve this alignment problem?

The text was updated successfully, but these errors were encountered: