-

Notifications

You must be signed in to change notification settings - Fork 2.1k

FileStreamResult very slow #6045

Comments

|

On a local box you are doing both send and receive and 17MB/s is a network transfer rate of 136Mbit/s and both disk save and load. Are you running in release mode Is the file being virus scanned #6042 (comment) both on load and save? Are you downloading direct, or via browser where its doing its own secondary scanning etc |

|

Direct through kestrel and tried IIS and IIS Express all the same results. Disabled all antivirus Tried debug and release mode Tried command line and VS Tried on a Windows 10 machine (64GB RAM, i7 6950X, SSD) and a Windows Server 2016 watercooled beast) Tried via browser as that's how the users will download from a website. Firefox. Tested SSD, manually copy/paste the 1GB file takes less than a second. If I read it into memory first: Then I get 100MB/s if the file is < 900MB. Above that and it goes back to 17MB/s or worse (probably due to haveing over 1GB of stuff in RAM. Still, it should download at well over that. I can download files from the other side of the world on a loaded server at 210MB/s, so locally I should be seeing practically the SSD speeds, or at worst 200MB/s, not 17. |

|

Does setting up the |

|

I'm also using the very latest VS and dotnet 1.1.1 |

|

Setting it up as: new FileStream(path, FileMode.Open, FileAccess.Read, FileShare.ReadWrite, 1, FileOptions.Asynchronous | FileOptions.SequentialScan); Slows it down to 7MB/s Increase buffer to 10240 increases to 39MB/s So we are getting somewhere. It is how its reading the file from the disk, yet reading the entire file from that same stream using CopyTo is instant (as fast as reading it from the SSD), but passing that same stream into FileStreamResult gives much worse performance |

|

Just to get the max throughput metrics of your loopback could you run NTttcp You want to extract the file exe then in two command windows enter the Then for client And you should get an output similar to |

|

On windows 10 machine Thread Time(s) Throughput(KB/s) Avg B / Compl Totals:Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s) Throughput(Buffers/s) Cycles/Byte Buffers DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr) Packets Sent Packets Received Retransmits Errors Avg. CPU % On server 2016 Thread Time(s) Throughput(KB/s) Avg B / Compl Totals:Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s) Throughput(Buffers/s) Cycles/Byte Buffers DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr) Packets Sent Packets Received Retransmits Errors Avg. CPU % Both max download at 92MB/s with the 102400 buffer on the stream. Yet the server is capable of 730MB/s |

|

Running through the web (over a fiber line though) the speed is insanely slow. 1MB/s. So it gets worse when going over the internet too. |

|

What if you go a bit more wild? // using System.IO.MemoryMappedFiles

new FileExtensionContentTypeProvider().TryGetContentType("test.m4v", out string contentType);

Response.Headers["Content-Disposition"] = "attachment; filename=\"movie.m4v\"";

var mmf = MemoryMappedFile.CreateFromFile(@"C:\somemovie.m4v");

Response.OnCompleted(

(state) => {

((MemoryMappedFile)state).Dispose();

return Task.CompletedTask;

}, mmf);

return new FileStreamResult(mmf.CreateViewStream(), contentType); |

|

Runs at around 70MB/s for 1-2 seconds, then pauses for 3-4 seconds, then does it again, over and over. Similar to the large buffer issue. So averages back out to the same speed |

|

You have server GC on? |

|

Out of the box web template from VS. How do I enable/disable it? |

|

Just for curiosity, why is the buffer size 4KB in FileStreamResultExecutor and 64KB in StaticFileContext? |

|

Ok so I found a culprit. Kaspersky created a network adapter it was routing everything through. Removed that and I see improvements. Original code: 38MB/s However, on the Windows server machines with faster loopbacks and running Server 2016, I get far less improvement. They didn't have Kaspersky on, and I disabled Defender, but that changed nothing. The servers get worse: Original code: 14MB/s I've tested this on 2 different Windows 2016 servers with identical results, and my faster Windows 10 (when using memory mapped or async file) I have only tested on 1 windows 10 PC. I'll test on another tomorrow. So even on my Windows 10 dev machine, 127MB/s is half the speed the local loop is capable of, and on the servers its 21x slower than the loop is capable of. Also the other bug with the MemoryMapped is Response.OnCompleted never fires, at all. So after running it once, the file is locked with the previous memory mapped file and obviously we get memory usage too. |

|

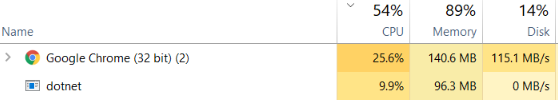

You can try using the TwoGBFileStream to test without File IO and see what that does. Also OverlappedFileStreamResult from AspNetCore.Ben.Mvc to see what overlapping reads and writes does with a larger buffer size (usage in samples) Though I didn't see much difference between them, with Chrome being the main bottleneck (at 100MB/s) on loopback: But it may help isolate the issue... |

|

Using wrk from WSL (Bash on Ubuntu on Windows 10) Both types come out about the same for me. 159MB/s being about 1.27 GBit/s on the network/loopback |

|

Short test on Windows 10 machine so far... wrk for bash doesnt seem to be there any more. Got developer mode on, installed bash, can run it, but typing wrk gives invalid operation as its not a command, says: luke@LUKE-M:/mnt/c/Windows/System32$ wrk I spun up a ubuntu docker instead but that doesnt have wrk either I'll do the tests with OverlappedFileStream and both on Windows server machines (which had much worse performance originally) and see what I get. |

|

So on the servers totally different result: Original With 102400 buffer With TwoGBFileStream With overlapped: Now run all this again without it going through IIS (so kestrel direct from command line) Original With 102400 buffer With TwoGBFileStream With overlapped: So it seems on the server 2016 we have totally different bottlenecks, however no matter any of the tests so far the server 2016 (which is what it will run on) cannot get above 40-50MB/s even when not reading from disk. I ran this test on a local watercooled server, and a server on the amazon AWS, two totally different specs, both very powerful, and identical results. I did notice though on the servers, the bottleneck seemed to be the IIS Worker and the browsers both reaching 35% CPU. Go straight through kestrel and the bottleneck is still the browser. Yet the same setup running on Windows 10 the download goes at 210MB/s and the browsers CPU is only 8% What's worse is accessing those server 2016 websites from anything but local (so over the internet) the download speed is between 300kb and 1MB max. The servers processes show now CPU usage (3-4% max) and the client doesn't either, so there is another bottleneck in that process too. |

You have to install it per the linux steps https://github.com/wg/wrk/wiki/Installing-Wrk-on-Linux

That's more likely due to the nature of TCP protocol and the round trip time If you want to optimize for large file transfer, on a single connection, with round-trip latency at the expense of more memory consumed you will have to tune a few things. If you are on the Windows Server 2016 Anniversary Update you'll already have the Initial Congestion Window at 10 MSS, otherwise you may want to increase it with the following 3 powershell commands (WinServer 2012+) New-NetTransportFilter -SettingName Custom -LocalPortStart 80 -LocalPortEnd 80 -RemotePortStart 0 -RemotePortEnd 65535

New-NetTransportFilter -SettingName Custom -LocalPortStart 443 -LocalPortEnd 443 -RemotePortStart 0 -RemotePortEnd 65535

Set-NetTCPSetting -SettingName Custom -InitialCongestionWindow 10 -CongestionProvider CTCPOn Windows Server 2016 you should be able to go up to -InitialCongestionWindow 64However you probably don't want to go too high. You can also configure the amount Kestrel will put on the wire before awaiting (defaults to 64 KB) e.g. .UseKestrel(options =>

{

// 3 * 1MB = 3MB

options.Limits.MaxResponseBufferSize = 3 * 1024 * 1024;

})Then you want to check the network adapter settings and bump up anything like: You way also want to adjust other settings like your TCP receive window (assuming you've done the eariler ones) e.g. Set-NetTCPSetting -SettingName Custom -AutoTuningLevelLocal Experimental -InitialCongestionWindow 10 -CongestionProvider CTCP But this is more about TCP tuning than the http server itself... |

|

Ok I'll try all that, but we still have the underlying issue that the speed is slow when actually reading a file? |

|

As @Yves57 pointed out earlier the buffer size is likely too small at 4KB per read; Kestrel will only put 3 uncompleted writes on the wire so that may be 12KB on the wire max using Also it uses |

|

Re: garbage collection; if you are using a project.json project, ensure it has And if you are using the newer csproj then the |

|

Also you can try |

|

Send file no longer works on IIS since it isn't in proc. It does work with WebListener though |

|

@angelsix as an aside to whether Other options to consider to serve the files are add ResponseCaching if you have memory on the go; the StaticFileMiddleware; or get IIS to serve the static files directly. The latter two are covered in @RickStrahl's blog: More on ASP.NET Core Running under IIS |

|

More generally, I was asking myself why all |

Yeah was thinking that when making the test overlapped version; its methods should either be virtual or it should resister with interface e.g. |

|

Ok so with increasing the file stream buffer to 64k using new FileStream(path, FileMode.Open, FileAccess.Read, FileShare.ReadWrite, 65536, FileOptions.Asynchronous | FileOptions.SequentialScan); I get an acceptable speed. Around 120-150MB/s stable on Windows 10 I'd like to see more, but I think that will do for now. Changing the max response buffer in Kestrel did nothing. All the other suggestions didn't improve on this speed either so this seems the fastest speed possible right now. Doing the TwoGBFile stream direct from RAM reached the local link limit on Windows 10 so I think the bottleneck in the current situation is still the reading of the file stream, but all suggestions so far have not gained us more than 150MB/s and I know the network can at least reach the 230MB/s we see with the TwoGBFile stream. I'm moving onto trying to fix the Windows Server 2016 issues now. Nothing I've done so far can get the files to transfer faster than 58MB/s locally, and I cannot even get files to transfer faster than 1MB over the internet, even on a fresh Amazon AWS 2016 server and my fiber internet, so there is definitely issues there. |

|

The changes for TCP/Kestrel response buffers would be for network rather than loopback |

|

Ah ok I'll add that back and do some internet speed testing too |

|

Also any suggestions on improving the localhost/127.0.0.1 loopback speed on Windows 10? Out of the box all my machines top out at 180MB/s. I read that Windows 7 had NetDMA which could easily reach 800MB/s but it was removed in Windows 8 and later, with Fast TCP, which isn't natively supported it has to be software supported, so regular TCP loopback tests still top out at 180MB/s? I think thats the limit I am reaching on windows 10 when directly running kestrel. Nevermind, I just realised this would be kind of pointless anyway as the end-purpose will be over real ethernet, at best 1GB, which is 125MB/s anyway so I'm above the speed I need to be on the Windows 10 machines at least. Just need to solve the issue with the 2016 server machines. |

|

IIS is still limited to 10 simultaneous connections on Windows. It won’t blow up and throw errors when it runs over as it used to but it will not create more than 10 connections from what I understand. You can generate a ton of traffic with those 10 connections, but there’s an upper limit. I’m using WebSurge and topping out at 30,000 requests with IIS, unless I get into kernel cashed content where I can get to 75,000 plus on the local machine (because that somehow appears to bypass IIS or perhaps it’s just serving that much faster from Kernel Cache).

+++ Rick ---

From: angelsix [mailto:[email protected]]

Sent: Sunday, April 2, 2017 9:05 AM

To: aspnet/Mvc <[email protected]>

Cc: Rick Strahl <[email protected]>; Mention <[email protected]>

Subject: Re: [aspnet/Mvc] FileStreamResult very slow (#6045)

Ok so although the server is still slower, I don't know why it didn't dawn on me before but I've never checked running the site through full IIS on the Windows 10 machine thats quicker. I've just done that and IIS on Windows 10 is also slow. Not quite as slow as the server but still slow. So I'd say IIS itslef is making things a lot slower. I've played with all settings I can find and no change.

Windows 10 / Windows Server (MB/s)

Kestrel 174 / 48

IIS 79 / 38

I've tried:

* Toggling TCP Fast Open

* Toggling ECN

* Changing buffer size for kestrel response

* Change number of worker processes in app pool

Any ideas why IIS is so much slower and how to improve it?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub <#6045 (comment)> , or mute the thread <https://github.com/notifications/unsubscribe-auth/ABT3PeUfa_Jy2zvHCsJaUOXq6U9Clr2Lks5rr_FzgaJpZM4MtgTR> . <https://github.com/notifications/beacon/ABT3PayOyDF7pKhonqoNMG07yxPM8HV6ks5rr_FzgaJpZM4MtgTR.gif>

|

|

I don't think that relates to my issue though. I run the kestrel through IIS on a new 2016 server, no connections, just one, to download a file, and it maxes out at 38MB/s on a machine that tests capable of TCP traffic up to 125MB/s Also the exact same code runs on Windows 10 in IIS at 75MB/s so totally different results. Then remove IIS on windows 10 and you get 125MB/s, and 58MB/s on server 2016 |

|

@jbagga - can you take a look? |

|

On Windows 10 x64, I downloaded video content of size 987MB using the code here. I tried ASP.NET Core 1.1 and the current dev branch (there have been recent changes as part of this PR which I thought may affect the results; like use of Note: When Conclusions:

cc @Eilon |

|

/cc @pan-wang @shirhatti |

|

A few takeaways from the experiment done by @jbagga

|

|

#6347 results in expected speed of ~60 MB/s for IIS and ~134 MB/s for Kestrel for the same resource as my comment above |

|

I think what we have here is a great improvement, so I think it's ok to close this bug. @angelsix - please let us know if you have any further feedback on this change. |

|

Thanks I will test this coming week but I expect to see the same improvements everyone else has. Thanks for looking into this |

|

Is there any chance of this fix getting into ASP.NET Core 2.0? I am doing a middleware which looks at Content-Length after a response has finished and logs some statistics of some file serving requests into a database - Content-Length not being there except for Range requests (which explicitly sets it as part of handling ranges) limits the usability of this. (In addition, it would speed up the site when run with Visual Studio Code attached as a debugger, where sending a 26 MB file with FileStreamResult in my testing is on the order of ~30 seconds for my scenario, compared to 0.6 seconds (50x faster) when still running the unoptimized Debug version with Development "environment" but without a debugger attached. This is not a primary reason and this is probably more Visual Studio Code's or the C# extension's fault, but I can't deny that it would help with this too.) |

|

@JesperTreetop https://github.com/aspnet/Mvc/blob/dev/src/Microsoft.AspNetCore.Mvc.Core/Internal/FileResultExecutorBase.cs#L91 |

|

@jbagga That's exactly the line I want in 2.0. The same file in 2.0.0 preview 2 (which I'm currently using) doesn't have it (SetContentLength is only called from SetRangeHeaders which of course is only called for range requests) and therefore also does not have this fix. As long as 2.0 is being spun from the dev branch, I'm guessing this fix will make its way in, but I don't know if 2.0 comes from the dev branch or by manually porting fixes to some sort of continuation of the 2.0.0-preview2 tag, which seems like something Microsoft would do with higher bug bars ahead of releases. |

|

@pranavkm Wonderful - thanks! |

|

@jbagga I have tried to changes buffer size and other + changes .net version 1.1 to 2.0 but i am getting max 20 MB/sec speed to download file. |

|

@chintan3100 I don't have a repo, but I did see massive slowdown when running with a debugger attached from VS Code. Running without a debugger attached ( |

|

@JesperTreetop : I have checked on Azure with release mode with S3 plan. Tested on Azure VM. |

|

@chintan3100 there are other factors also; how fast can you read from the disk in that setup? Is it faster than 20MB/s? Can you save to disk faster than 20MB/s on the client. 20MB/s is 160 Mbps; is the bandwidth capped either by receiver or server? etc Also what is the RTT latency between client and server as that will determine the maximum throughput due to the TCP Bandwidth-delay product |

|

I tested same api with .net framework 4.0 it is having 150 MB/sec download speed whereas in .net core it is 20 MB/sec. |

|

@chintan3100 Posting the code you're using for the action in both ASP.NET MVC 5 and ASP.NET Core 2 would probably help diagnosing this, at least how the action result is constructed. |

public ActionResult Index()

{

var stream = new FileStream(@"You File Path", FileMode.Open, FileAccess.Read, FileShare.ReadWrite, 65536, FileOptions.Asynchronous | FileOptions.SequentialScan);

return File(stream, "application/octet-stream", "aa.zip");

}Please run same code in new .net core project and asp.net project. This is what i am using in both asp.net MVC 5. and .net core 2.0. |

On a local machine, create brand new ASP Net Core website.

In any controller action do:

This will popup a download save dialog on request. The local SSD can do 1800MB/s. The ASP Net Core download tops out at 17MB/s.

The text was updated successfully, but these errors were encountered: