-

Notifications

You must be signed in to change notification settings - Fork 750

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Updated documentation for models section #1567

base: master

Are you sure you want to change the base?

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,110 +1,41 @@ | ||

| # Models | ||

|

|

||

| ## 1. Concept | ||

| The model is the brain of the intelligent agent, responsible for processing all input and output data. By calling different models, the agent can execute operations such as text analysis, image recognition, or complex reasoning according to task requirements. CAMEL offers a range of standard and customizable interfaces, as well as seamless integrations with various components, to facilitate the development of applications with Large Language Models (LLMs). In this part, we will introduce models currently supported by CAMEL and the working principles and interaction methods with models. | ||

|

|

||

| All the codes are also available on colab notebook [here](https://colab.research.google.com/drive/18hQLpte6WW2Ja3Yfj09NRiVY-6S2MFu7?usp=sharing). | ||

|

|

||

|

|

||

| ## 2. Supported Model Platforms | ||

|

|

||

| The following table lists currently supported model platforms by CAMEL. | ||

|

|

||

| | Model Platform | Available Models| Multi-modality | | ||

| | ----- | ----- | ----- | | ||

| | OpenAI | gpt-4o | Y | | ||

| | OpenAI | gpt-4o-mini | Y | | ||

| | OpenAI | o1 | Y | | ||

| | OpenAI | o1-preview | N | | ||

| | OpenAI | o1-mini | N | | ||

| | OpenAI | gpt-4-turbo | Y | | ||

| | OpenAI | gpt-4 | Y | | ||

| | OpenAI | gpt-3.5-turbo | N | | ||

| | Azure OpenAI | gpt-4o | Y | | ||

| | Azure OpenAI | gpt-4-turbo | Y | | ||

| | Azure OpenAI | gpt-4 | Y | | ||

| | Azure OpenAI | gpt-3.5-turbo | Y | | ||

| | OpenAI Compatible | Depends on the provider | ----- | | ||

| | Mistral AI | mistral-large-2 | N | | ||

| | Mistral AI | pixtral-12b-2409 | Y | | ||

| | Mistral AI | ministral-8b-latest | N | | ||

| | Mistral AI | ministral-3b-latest | N | | ||

| | Mistral AI | open-mistral-nemo | N | | ||

| | Mistral AI | codestral | N | | ||

| | Mistral AI | open-mistral-7b | N | | ||

| | Mistral AI | open-mixtral-8x7b | N | | ||

| | Mistral AI | open-mixtral-8x22b | N | | ||

| | Mistral AI | open-codestral-mamba | N | | ||

| | Moonshot | moonshot-v1-8k | N | | ||

| | Moonshot | moonshot-v1-32k | N | | ||

| | Moonshot | moonshot-v1-128k | N | | ||

| | Anthropic | claude-3-5-sonnet-latest | Y | | ||

| | Anthropic | claude-3-5-haiku-latest | N | | ||

| | Anthropic | claude-3-haiku-20240307 | Y | | ||

| | Anthropic | claude-3-sonnet-20240229 | Y | | ||

| | Anthropic | claude-3-opus-latest | Y | | ||

| | Anthropic | claude-2.0 | N | | ||

| | Gemini | gemini-2.0-flash-exp | Y | | ||

| | Gemini | gemini-1.5-pro | Y | | ||

| | Gemini | gemini-1.5-flash | Y | | ||

| | Gemini | gemini-exp-1114 | Y | | ||

| | Lingyiwanwu | yi-lightning | N | | ||

| | Lingyiwanwu | yi-large | N | | ||

| | Lingyiwanwu | yi-medium | N | | ||

| | Lingyiwanwu | yi-large-turbo | N | | ||

| | Lingyiwanwu | yi-vision | Y | | ||

| | Lingyiwanwu | yi-medium-200k | N | | ||

| | Lingyiwanwu | yi-spark | N | | ||

| | Lingyiwanwu | yi-large-rag | N | | ||

| | Lingyiwanwu | yi-large-fc | N | | ||

| | Qwen | qwq-32b-preview | N | | ||

| | Qwen | qwen-max | N | | ||

| | Qwen | qwen-plus | N | | ||

| | Qwen | qwen-turbo | N | | ||

| | Qwen | qwen-long | N | | ||

| | Qwen | qwen-vl-max | Y | | ||

| | Qwen | qwen-vl-plus | Y | | ||

| | Qwen | qwen-math-plus | N | | ||

| | Qwen | qwen-math-turbo | N | | ||

| | Qwen | qwen-coder-turbo | N | | ||

| | Qwen | qwen2.5-coder-32b-instruct | N | | ||

| | Qwen | qwen2.5-72b-instruct | N | | ||

| | Qwen | qwen2.5-32b-instruct | N | | ||

| | Qwen | qwen2.5-14b-instruct | N | | ||

| | DeepSeek | deepseek-chat | N | | ||

| | DeepSeek | deepseek-reasoner | N | | ||

| | ZhipuAI | glm-4 | Y | | ||

| | ZhipuAI | glm-4v | Y | | ||

| | ZhipuAI | glm-4v-flash | Y | | ||

| | ZhipuAI | glm-4v-plus-0111 | Y | | ||

| | ZhipuAI | glm-4-plus | N | | ||

| | ZhipuAI | glm-4-air | N | | ||

| | ZhipuAI | glm-4-air-0111 | N | | ||

| | ZhipuAI | glm-4-airx | N | | ||

| | ZhipuAI | glm-4-long | N | | ||

| | ZhipuAI | glm-4-flashx | N | | ||

| | ZhipuAI | glm-zero-preview | N | | ||

| | ZhipuAI | glm-4-flash | N | | ||

| | ZhipuAI | glm-3-turbo | N | | ||

| | InternLM | internlm3-latest | N | | ||

| | InternLM | internlm3-8b-instruct | N | | ||

| | InternLM | internlm2.5-latest | N | | ||

| | InternLM | internlm2-pro-chat | N | | ||

| | Reka | reka-core | Y | | ||

| | Reka | reka-flash | Y | | ||

| | Reka | reka-edge | Y | | ||

| | Nvidia | https://docs.api.nvidia.com/nim/reference/llm-apis | ----- | | ||

| | SambaNova| https://community.sambanova.ai/t/supported-models/193 | ----- | | ||

| | Groq | https://console.groq.com/docs/models | ----- | | ||

| | Ollama | https://ollama.com/library | ----- | | ||

| | vLLM | https://docs.vllm.ai/en/latest/models/supported_models.html | ----- | | ||

| | Together AI | https://docs.together.ai/docs/chat-models | ----- | | ||

| | LiteLLM | https://docs.litellm.ai/docs/providers | ----- | | ||

| | SGLang | https://sgl-project.github.io/references/supported_models.html | ----- | | ||

|

|

||

| ## 3. Using Models by API calling | ||

|

|

||

| Here is an example code to use a specific model (gpt-4o-mini). If you want to use another model, you can simply change these three parameters: `model_platform`, `model_type`, `model_config_dict` . | ||

| The model is the brain of the intelligent agent, responsible for intelligent agent, processing all input and output data to execute tasks such as text analysis, image recognition, and complex reasoning. With customizable interfaces and multiple integration options, CAMEL AI enables rapid development with leading LLMs. | ||

|

|

||

| > **Explore the Code:** Check out our [Colab Notebook](https://colab.research.google.com/drive/18hQLpte6WW2Ja3Yfj09NRiVY-6S2MFu7?usp=sharing) for a hands-on demonstration. | ||

|

|

||

|

|

||

| ## 2. Supported Model Platforms in CAMEL | ||

|

|

||

| CAMEL supports a wide range of models, including [OpenAI’s GPT series](https://platform.openai.com/docs/models), [Meta’s Llama models](https://www.llama.com/), [DeepSeek's R1](https://www.deepseek.com/), and more. The table below lists all supported model platforms: | ||

|

|

||

| | Model Platform | Model Type(s) | | ||

| |---------------|--------------| | ||

| | **OpenAI** | gpt-4o, gpt-4o-mini, o1, o1-preview, o1-mini, o3-mini, gpt-4-turbo, gpt-4, gpt-3.5-turbo | | ||

| | **Azure OpenAI** | gpt-4o, gpt-4-turbo, gpt-4, gpt-3.5-turbo | | ||

| | **Mistral AI** | mistral-large-latest, pixtral-12b-2409, ministral-8b-latest, ministral-3b-latest, open-mistral-nemo, codestral-latest, open-mistral-7b, open-mixtral-8x7b, open-mixtral-8x22b, open-codestral-mamba | | ||

| | **Anthropic** | claude-2.1, claude-2.0, claude-instant-1.2, claude-3-opus-latest, claude-3-sonnet-20240229, claude-3-haiku-20240307, claude-3-5-sonnet-latest, claude-3-5-haiku-latest | | ||

| | **Gemini** | gemini-1.5-flash, gemini-1.5-pro, gemini-exp-1114 | | ||

| | **Lingyiwanwu** | yi-lightning, yi-large, yi-medium, yi-large-turbo, yi-vision, yi-medium-200k, yi-spark, yi-large-rag, yi-large-fc | | ||

| | **Qwen** | qwq-32b-preview, qwen-max, qwen-plus, qwen-turbo, qwen-long, qwen-vl-max, qwen-vl-plus, qwen-math-plus, qwen-math-turbo, qwen-coder-turbo, qwen2.5-coder-32b-instruct, qwen2.5-72b-instruct, qwen2.5-32b-instruct, qwen2.5-14b-instruct | | ||

| | **DeepSeek** | deepseek-chat, deepseek-reasoner | | ||

| | **ZhipuAI** | glm-4v, glm-4, glm-3-turbo | | ||

| | **InternLM** | internlm3-latest, internlm3-8b-instruct, internlm2.5-latest, internlm2-pro-chat | | ||

| | **Reka** | reka-core, reka-flash, reka-edge | | ||

| | **GROQ** | llama-3.1-8b-instant, llama-3.3-70b-versatile, llama-3.3-70b-specdec, llama3-8b-8192, llama3-70b-8192, mixtral-8x7b-32768, gemma2-9b-it | | ||

| | **TOGETHER** | meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo, meta-llama/Meta-Llama-3.1-70B-Instruct-Turbo, meta-llama/Meta-Llama-3.1-405B-Instruct-Turbo, meta-llama/Llama-3.3-70B-Instruct-Turbo, mistralai/Mixtral-8x7B-Instruct-v0.1, mistralai/Mistral-7B-Instruct-v0.1 | | ||

| | **SAMBA** | Meta-Llama-3.1-8B-Instruct, Meta-Llama-3.1-70B-Instruct, Meta-Llama-3.1-405B-Instruct | | ||

| | **SGLANG** | meta-llama/Meta-Llama-3.1-8B-Instruct, meta-llama/Meta-Llama-3.1-70B-Instruct, meta-llama/Meta-Llama-3.1-405B-Instruct, meta-llama/Llama-3.2-1B-Instruct, mistralai/Mistral-Nemo-Instruct-2407, mistralai/Mistral-7B-Instruct-v0.3, Qwen/Qwen2.5-7B-Instruct, Qwen/Qwen2.5-32B-Instruct, Qwen/Qwen2.5-72B-Instruct | | ||

|

Comment on lines

+28

to

+29

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. for the list it's not comprehensive, some models we didn't list under enum list but user can call the model by directly passing a string value to |

||

| | **NVIDIA** | nvidia/nemotron-4-340b-instruct, nvidia/nemotron-4-340b-reward, 01-ai/yi-large, mistralai/mistral-large, mistralai/mixtral-8x7b-instruct, meta/llama3-70b, meta/llama-3.1-8b-instruct, meta/llama-3.1-70b-instruct, meta/llama-3.1-405b-instruct, meta/llama-3.2-1b-instruct, meta/llama-3.2-3b-instruct, meta/llama-3.3-70b-instruct | | ||

| | **COHERE** | command-r-plus, command-r, command-light, command, command-nightly | | ||

|

|

||

|

|

||

| ## 3. How to Use Models via API Calls | ||

|

|

||

| Easily integrate your chosen model with CAMEL AI using straightforward API calls. For example, to use the **gpt-4o-mini** model: | ||

|

|

||

| > If you want to use another model, you can simply change these three parameters: `model_platform`, `model_type`, `model_config_dict` . | ||

|

|

||

| ```python | ||

| from camel.models import ModelFactory | ||

|

|

@@ -127,7 +58,7 @@ system_msg = "You are a helpful assistant." | |

| ChatAgent(system_msg, model=model) | ||

| ``` | ||

|

|

||

| And if you want to use an OpenAI-compatible API, you can replace the `model` with the following code: | ||

| > And if you want to use an OpenAI-compatible API, you can replace the `model` with the following code: | ||

|

|

||

| ```python | ||

| model = ModelFactory.create( | ||

|

|

@@ -140,12 +71,12 @@ model = ModelFactory.create( | |

| ``` | ||

|

|

||

| ## 4. Using On-Device Open Source Models | ||

| In the current landscape, for those seeking highly stable content generation, OpenAI’s gpt-4o-mini, gpt-4o are often recommended. However, the field is rich with many other outstanding open-source models that also yield commendable results. CAMEL can support developers to delve into integrating these open-source large language models (LLMs) to achieve project outputs based on unique input ideas. | ||

| CAMEL AI also supports local deployment of open-source LLMs. Choose the setup that suits your project: | ||

|

|

||

| ### 4.1 Using Ollama to Set Llama 3 Locally | ||

|

|

||

| 1. Download [Ollama](https://ollama.com/download). | ||

| 2. After setting up Ollama, pull the Llama3 model by typing the following command into the terminal: | ||

| 2. After setting up Ollama, pick a model like Llama3 for your project: | ||

|

|

||

| ```bash | ||

| ollama pull llama3 | ||

|

|

@@ -271,20 +202,21 @@ assistant_response = agent.step(user_msg) | |

| print(assistant_response.msg.content) | ||

| ``` | ||

|

|

||

| ## 5. About Model Speed | ||

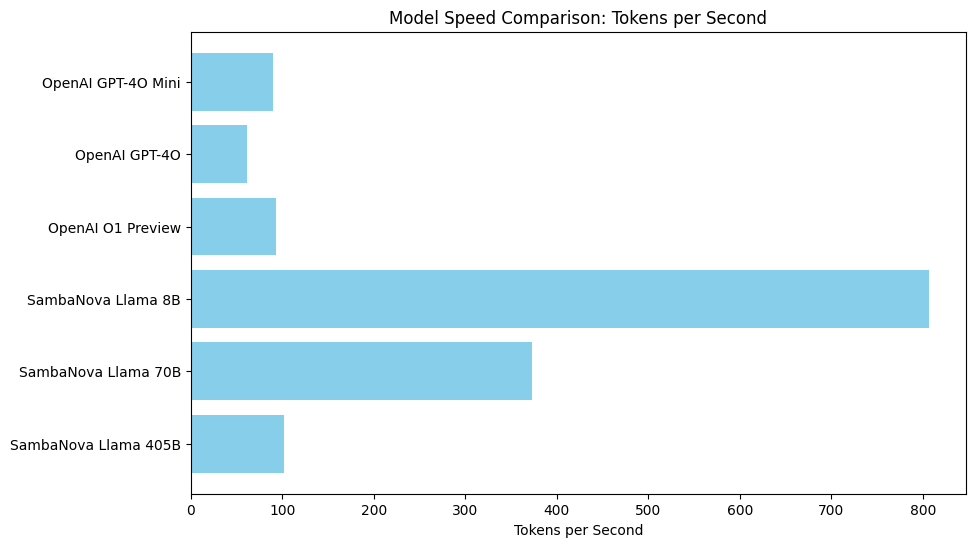

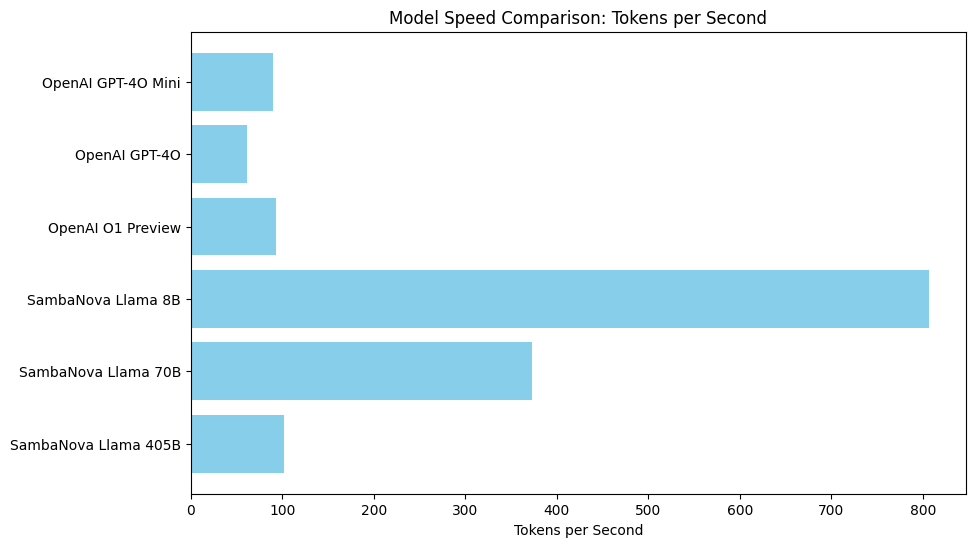

| Model speed is a crucial factor in AI application performance. It affects both user experience and system efficiency, especially in real-time or interactive tasks. In [this notebook](../cookbooks/model_speed_comparison.ipynb), we compared several models, including OpenAI’s GPT-4O Mini, GPT-4O, O1 Preview, and SambaNova's Llama series, by measuring the number of tokens each model processes per second. | ||

| ## 5 Model Speed and Performance | ||

| Performance is critical for interactive AI applications. CAMEL-AI benchmarks tokens processed per second across various models: | ||

|

|

||

| In [this notebook](../cookbooks/model_speed_comparison.ipynb), we compared several models, including OpenAI’s GPT-4O Mini, GPT-4O, O1 Preview, and SambaNova's Llama series, by measuring the number of tokens each model processes per second. | ||

|

|

||

| - OpenAI GPT-4O Mini: Fast and efficient. | ||

| - SambaNova’s Llama 405B: High capacity but slower response. | ||

| - Local Inference: SGLang reached a peak of 220.98 tokens per second, compared to vLLM’s 107.2 tokens per second. | ||

|

Comment on lines

+210

to

+212

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. please keep the original Key Insight part, we didn't aim to compare the speed of different model platforms, the insight more highlighted the relationship between model size and inference time |

||

|

|

||

| Key Insights: | ||

| Smaller models like SambaNova’s Llama 8B and OpenAI's GPT-4O Mini typically offer faster responses. | ||

| Larger models like SambaNova’s Llama 405B, while more powerful, tend to generate output more slowly due to their complexity. | ||

| OpenAI models demonstrate relatively consistent performance, while SambaNova's Llama 8B significantly outperforms others in speed. | ||

| The chart below illustrates the tokens per second achieved by each model during our tests: | ||

|  | ||

|

|

||

|  | ||

|

|

||

| For local inference, we conducted a straightforward comparison locally between vLLM and SGLang. SGLang demonstrated superior performance, with `meta-llama/Llama-3.2-1B-Instruct` reaching a peak speed of 220.98 tokens per second, compared to vLLM, which capped at 107.2 tokens per second. | ||

|

|

||

| ## 6. Conclusion | ||

| In conclusion, CAMEL empowers developers to explore and integrate these diverse models, unlocking new possibilities for innovative AI applications. The world of large language models offers a rich tapestry of options beyond just the well-known proprietary solutions. By guiding users through model selection, environment setup, and integration, CAMEL bridges the gap between cutting-edge AI research and practical implementation. Its hybrid approach, combining in-house implementations with third-party integrations, offers unparalleled flexibility and comprehensive support for LLM-based development. Don't just watch this transformation that is happening from the sidelines. | ||

| ## 6. Next Steps | ||

| You've now learned how to integrate various models into CAMEL AI. | ||

|

|

||

| Dive into the CAMEL documentation, experiment with different models, and be part of shaping the future of AI. The era of truly flexible and powerful AI is here - are you ready to make your mark? | ||

| Next, check out our guide covering basics of creating and converting [messages with BaseMessage.](https://docs.camel-ai.org/key_modules/messages.html) | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

for deepseek not only R1 is supported