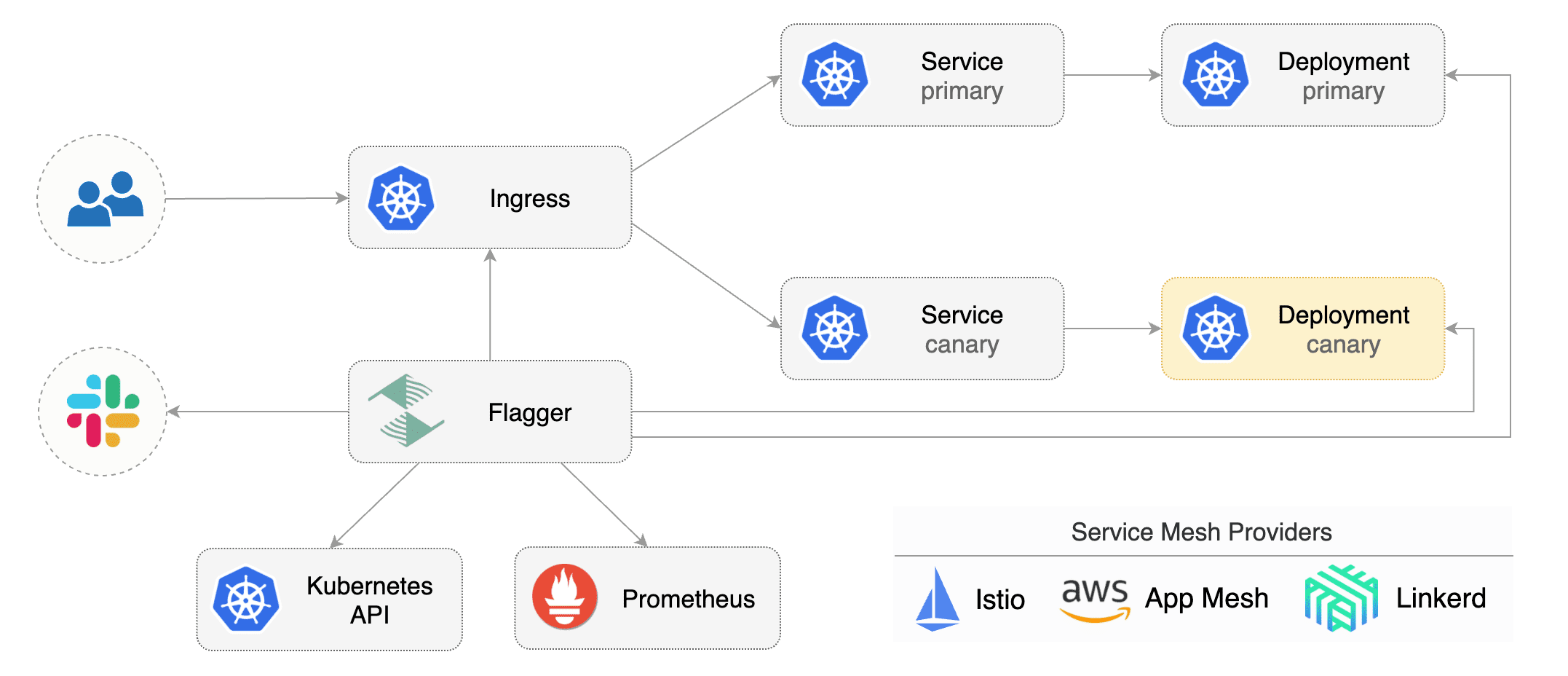

Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis. The canary analysis can be extended with webhooks for running acceptance tests, load tests or any other custom validation.

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators like HTTP requests success rate, requests average duration and pods health. Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

Flagger documentation can be found at docs.flagger.app

- Install

- How it works

- Usage

- Tutorials

Before installing Flagger make sure you have Istio setup up with Prometheus enabled. If you are new to Istio you can follow my Istio service mesh walk-through.

Deploy Flagger in the istio-system namespace using Helm:

# add the Helm repository

helm repo add flagger https://flagger.app

# install or upgrade

helm upgrade -i flagger flagger/flagger \

--namespace=istio-system \

--set metricsServer=http://prometheus.istio-system:9090 Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA), then creates a series of objects (Kubernetes deployments, ClusterIP services and Istio virtual services). These objects expose the application on the mesh and drive the canary analysis and promotion.

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change. When promoting a workload in production, both code (container images) and configuration (config maps and secrets) are being synchronised.

For a deployment named podinfo, a canary promotion can be defined using Flagger's custom resource:

apiVersion: flagger.app/v1alpha3

kind: Canary

metadata:

name: podinfo

namespace: test

spec:

# deployment reference

targetRef:

apiVersion: apps/v1

kind: Deployment

name: podinfo

# the maximum time in seconds for the canary deployment

# to make progress before it is rollback (default 600s)

progressDeadlineSeconds: 60

# HPA reference (optional)

autoscalerRef:

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

name: podinfo

service:

# container port

port: 9898

# Istio gateways (optional)

gateways:

- public-gateway.istio-system.svc.cluster.local

# Istio virtual service host names (optional)

hosts:

- podinfo.example.com

# HTTP match conditions (optional)

match:

- uri:

prefix: /

# HTTP rewrite (optional)

rewrite:

uri: /

# timeout for HTTP requests (optional)

timeout: 5s

# retry policy when a HTTP request fails (optional)

retries:

attempts: 3

# promote the canary without analysing it (default false)

skipAnalysis: false

# define the canary analysis timing and KPIs

canaryAnalysis:

# schedule interval (default 60s)

interval: 1m

# max number of failed metric checks before rollback

threshold: 10

# max traffic percentage routed to canary

# percentage (0-100)

maxWeight: 50

# canary increment step

# percentage (0-100)

stepWeight: 5

# Istio Prometheus checks

metrics:

# builtin Istio checks

- name: istio_requests_total

# minimum req success rate (non 5xx responses)

# percentage (0-100)

threshold: 99

interval: 1m

- name: istio_request_duration_seconds_bucket

# maximum req duration P99

# milliseconds

threshold: 500

interval: 30s

# custom check

- name: "kafka lag"

threshold: 100

query: |

avg_over_time(

kafka_consumergroup_lag{

consumergroup=~"podinfo-consumer-.*",

topic="podinfo"

}[1m]

)

# external checks (optional)

webhooks:

- name: load-test

url: http://flagger-loadtester.test/

timeout: 5s

metadata:

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"For more details on how the canary analysis and promotion works please read the docs.

- Add A/B testing capabilities using fixed routing based on HTTP headers and cookies match conditions

- Integrate with other service mesh technologies like AWS AppMesh and Linkerd v2

- Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

Flagger is Apache 2.0 licensed and accepts contributions via GitHub pull requests.

When submitting bug reports please include as much details as possible:

- which Flagger version

- which Flagger CRD version

- which Kubernetes/Istio version

- what configuration (canary, virtual service and workloads definitions)

- what happened (Flagger, Istio Pilot and Proxy logs)

If you have any questions about Flagger and progressive delivery:

- Read the Flagger docs.

- Invite yourself to the Weave community slack and join the #flagger channel.

- Join the Weave User Group and get invited to online talks, hands-on training and meetups in your area.

- File an issue.

Your feedback is always welcome!