-

Notifications

You must be signed in to change notification settings - Fork 82

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Speed Up info_locale #154

Speed Up info_locale #154

Conversation

Carterpersall

commented

Carterpersall

commented

Sep 13, 2022

•

edited

Loading

edited

- Made info_locale speedy

- Takes the registry entries at HKCU:Control Panel\International to get region code and language code, and passes them into two very large hash tables to get the accompanying region and language values.

- Maybe: Find a way to circumvent the very large table

- 3251 ms -> 12.43 ms

- 262x Faster

- Made info_locale speedy

- Takes the registy entries at HKCU:Control Panel\International to get region code and language code, and passes them into two very large hash tables to get the accompanying region and language values.

- TODO: Make it work when user has more than one language

- Maybe: Find a way to circumvent the very large table

- 3251 ms -> 12.43 ms

- 262x Faster

- Should fix any issues with missing languages as this was ripped directly from Microsoft's documentation

|

@rashil2000 the most recent commit should fix the issue with its missing languages. The only issue is that now the language table by itself is over 800 lines long. And while I'm really not a fan of having a massive table like that, I can't think of any other way to accomplish this. The only major issue I have with the massive tables is the size increase to the script (about double) and the annoyance of having to scroll over it when modifying the script. But the size increase doesn't seem to cause any detectable performance loss to the new implementation. |

|

Perhaps we can make the table occupy less lines by having 2 or 3 entries per line |

Yeah, good idea. Would cause a decrease in readability but I don't think anyone going to be reading through the entire table anyway |

- Makes it easier to scroll through

- Instead of getting the value of "Languages" of HKCU:Control Panel\International\User Profile, iterates through the multi-key in case multiple languages are configured

|

Unless you or I think of anything else, I think this pull request is ready for review. |

|

Could you arrange the columns (with appropriate spaces) so that they're readable (even when cluttered)? Right now it looks like a giant blob of text. Also do that for the region table. |

- Added even spacing between the table's values to make much easier to read

@rashil2000 Done |

|

Can you post before and after numbers for time taken, on both PowerShell 5.1 and 7.2.6 on your machine. One more thing I noticed: looks like the diff --git a/winfetch.ps1 b/winfetch.ps1

index 6a37af4..210c76f 100644

--- a/winfetch.ps1

+++ b/winfetch.ps1

@@ -963,18 +963,7 @@ function info_battery {

# ===== LOCALE =====

function info_locale {

- # `Get-WinUserLanguageList` has a regression bug on PowerShell Core

- # https://github.com/PowerShell/PowerShellModuleCoverage/issues/18

- # A slight increase in response time is incurred as a result

-

- $contentstring = $null

- if ($PSVersionTable.PSVersion.Major -gt 5) {

- Import-Module International -UseWindowsPowerShell -WarningAction SilentlyContinue

- $contentstring = "$((Get-WinHomeLocation).HomeLocation) - $((Get-WinUserLanguageList)[0].LocalizedName)"

- Remove-Module International

- } else {

- $contentstring = "$((Get-WinHomeLocation).HomeLocation) - $((Get-WinUserLanguageList)[0].LocalizedName)"

- }

+ $contentstring = "$((Get-WinHomeLocation).HomeLocation) - $((Get-WinUserLanguageList)[0].LocalizedName)"

return @{

title = "Locale" |

|

On my machine: If I remove the International module workaround (which I will do in the master branch shortly), then there's no performance difference between that and the implementation in this PR, for both PS 5.1 and 7.2.6 |

|

Order is Old -> New -> Pull Request Here are the files of the results I got: The files in the ZIP can be imported to https://www.speedscope.app/ which I use to profile scripts using https://github.com/nohwnd/Profiler Speeds of info_locale only:

This shows that this pull request is still significantly faster even after the International Module Workaround was removed |

|

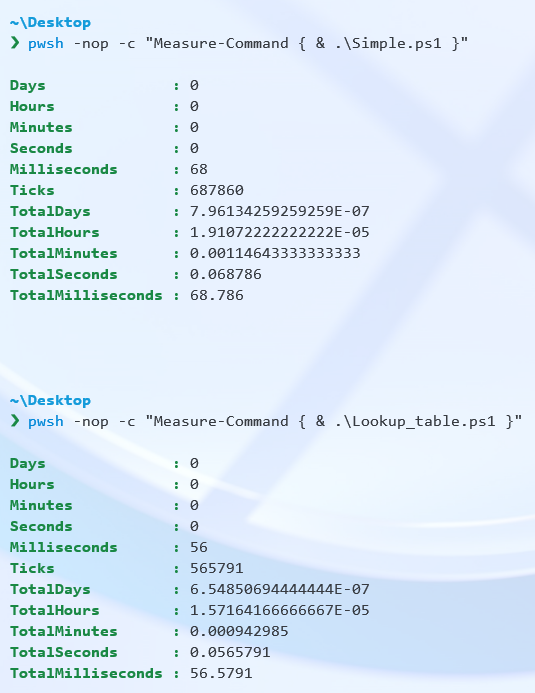

Unfortunately, I'm still not able to reproduce any difference. I isolated the These are the files, you can run the same commands as I did. My machine is a 2018 laptop with i7-8750H (6 core) processor, with power cable plugged in. |

I somewhat agree, but in this case, we use the The reason I did not use PowerShell's built-in

In this PR the gains are pretty small to notice already (after the removal of International module, that is, which was the main bottleneck) - and I'm not sure the added complexity in the script (w.r.t. readability and length) is worth it. |

Even after warmup, the launch speed of PowerShell can vary by 30-40 ms, which is small but can make a difference

By running

For me, according to the profiler I got 10x better speeds(134.0106 ms -> 12.43 ms), and according to hyperfine I got 33% better speeds, which I think is fairly significant. As for the readability impacts, while I do agree the size of the tables are annoying, I don't think readability is impacted too much as most people will read the comments and scroll past the tables. |

|

I ran the files through the Profiler and got these results:

And with Measure-Command: Honestly, I'm confused again |

|

@jcwillox could you also run the files mentioned in #154 (comment) and post your results? |

|

Yeah, I've found that when PowerShell is running at a very fast speed, speed gains seem to become negligible. But when PowerShell runs on a slow processor, some things just start running significantly slower for whatever reason. But the commands that do run slower are consistently slower and can be optimized by replacing those commands with fast alternatives. |

The problem here is that there isn't one command that always runs slower. Because of invariant factors (shell startup, cmdlet caching or whatever), I see that any of them can be slower (at least on my machine). I repeated the experiments by unplugging the power cable and enabling the aggressive power-saver mode (which severely restricts CPU performance), my results are the same - sometimes Simple.ps1 wins, sometimes Lookup_table.ps1 wins. Which leads me to the conclusion that on an average, they're equally performant. |

|

Maybe the speed gains vary from computer to computer due to many factors from how slow the computer is to driver and BIOS overhead. But for me, certain commands are always much slower (specifically anything CIM or WMI) making this implementation almost always faster for me and significantly so. |

|

Alright, let's merge it then |

Co-authored-by: Rashil Gandhi <[email protected]>