forked from ShishirPatil/gorilla

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Colored logging configuration + displaying progress in logs (ShishirP…

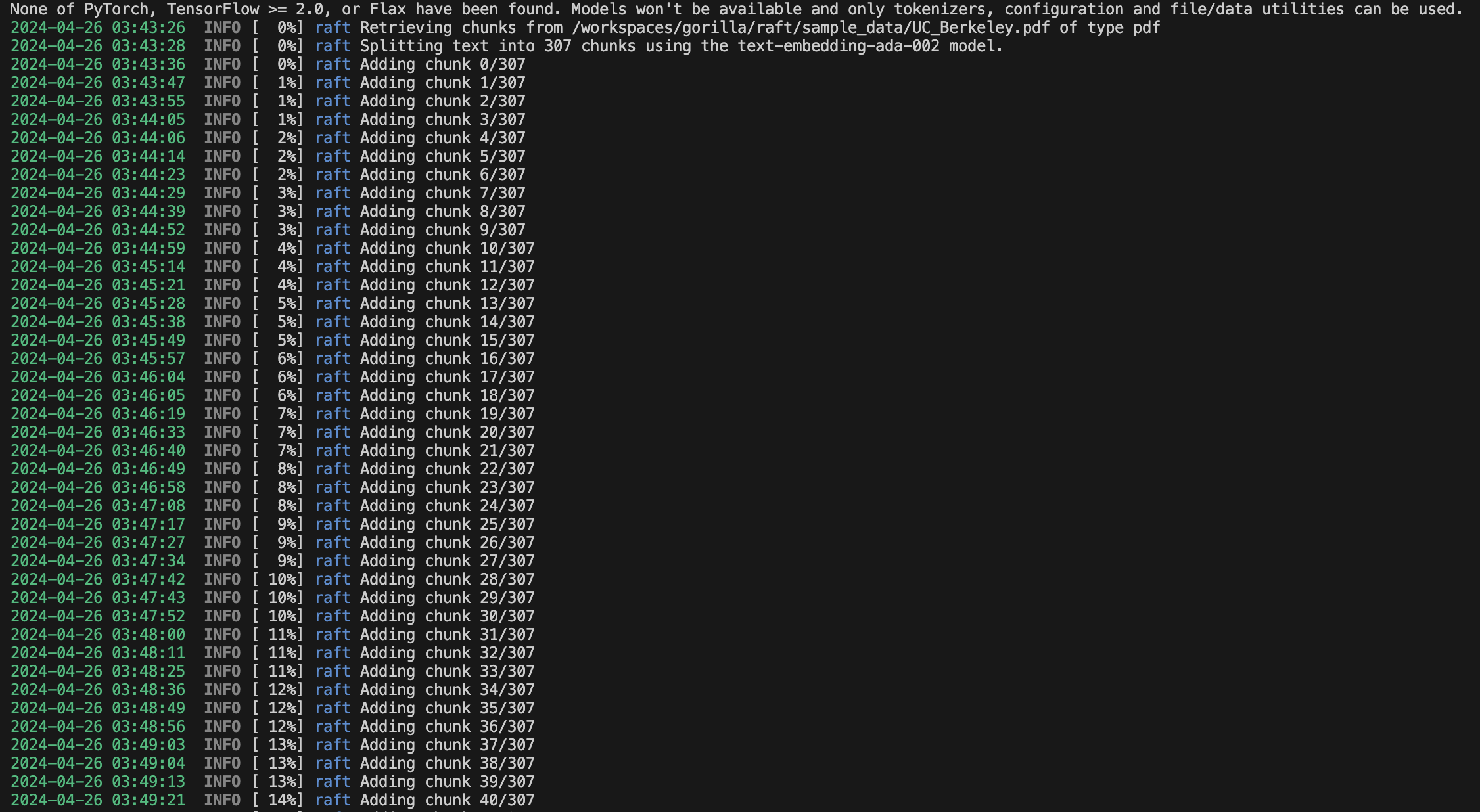

…atil#384) **Logging config** - Colored logging using `coloredlogs` package - Logging configuration loaded from YAML config file, by default `logging.yaml` - Logging configuration YAML file location overridable with `LOGGING_CONFIG` env var **Displaying progress in logs** - Added mdc dependency - Progress attached to MDC and included in logging format message - A default progress empty value is provided to avoid a KeyError when the progress field is not set, such as when logging from a different thread Here is what it looks like when we're hitting quota limits and getting some retries, it displays progress and colored logs:  Here is what it looks like when it runs smoothly:  **Tests** - Tested non regression with OpenAI API - Tested with Azure AI Resource Real Time `gpt-3.5-turbo` and `text-embedding-ada-002` deployment **Note**: this PR depends on ShishirPatil#381

- Loading branch information

1 parent

6acc440

commit 0d632da

Showing

5 changed files

with

107 additions

and

13 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,35 @@ | ||

| import logging | ||

| import logging.config | ||

| import os | ||

| import yaml | ||

|

|

||

| def log_setup(): | ||

| """ | ||

| Set up basic console logging. Root logger level can be set with ROOT_LOG_LEVEL environment variable. | ||

| """ | ||

|

|

||

| # Load the config file | ||

| with open(os.getenv('LOGGING_CONFIG', 'logging.yaml'), 'rt') as f: | ||

| config = yaml.safe_load(f.read()) | ||

|

|

||

| # Configure the logging module with the config file | ||

| logging.config.dictConfig(config) | ||

|

|

||

| install_default_record_field(logging, 'progress', '') | ||

|

|

||

|

|

||

| def install_default_record_field(logging, field, value): | ||

| """ | ||

| Wraps the log record factory to add a default progress field value | ||

| Required to avoid a KeyError when the progress field is not set | ||

| Such as when logging from a different thread | ||

| """ | ||

| old_factory = logging.getLogRecordFactory() | ||

|

|

||

| def record_factory(*args, **kwargs): | ||

| record = old_factory(*args, **kwargs) | ||

| if not hasattr(record, field): | ||

| record.progress = value | ||

| return record | ||

|

|

||

| logging.setLogRecordFactory(record_factory) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,34 @@ | ||

| version: 1 | ||

| disable_existing_loggers: False | ||

|

|

||

| formatters: | ||

| simple: | ||

| format: '%(asctime)s %(levelname)5s [%(progress)4s] %(name)s %(message)s' | ||

| colored: | ||

| format: "%(asctime)s %(levelname)5s [%(progress)4s] %(name)s %(message)s" | ||

| class: coloredlogs.ColoredFormatter | ||

|

|

||

| handlers: | ||

| console: | ||

| class: logging.StreamHandler | ||

| level: INFO | ||

| formatter: colored | ||

| stream: ext://sys.stdout | ||

|

|

||

| file: | ||

| class: logging.FileHandler | ||

| level: DEBUG | ||

| formatter: simple | ||

| filename: raft.log | ||

|

|

||

| root: | ||

| level: INFO | ||

| handlers: [console, file] | ||

|

|

||

| loggers: | ||

| raft: | ||

| level: INFO | ||

| langchain_community.utils.math: | ||

| level: INFO | ||

| httpx: | ||

| level: WARN |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Binary file not shown.