-

Notifications

You must be signed in to change notification settings - Fork 147

Conversation

| predicted = trained_model.predict(dicom_paths[0], nodule_locations, model_path) | ||

| assert predicted | ||

| assert 0 <= predicted[0]['p_concerning'] <= 1 | ||

|

|

||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm wondering if this will pass travis or hit the memory error issue... I think we may need to move this hunk over to #307.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

According to the current Travis log and previous one from PR #298 whit commented test, classification model is not responsible for the memory errosrs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hmmm, looks like they all failed with a memory error...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

But it worth to move Makes relevant scale factor for simple_3d_model over to #307

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

FWIW, the latest commit also failed as well... so at least we know the error isn't due to these PRs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@reubano, thank you for the remarks, I've checked locally and turns out that the issue was not only in DATA_SHAPE but also in memory leak when using tensorflow, thus I've added clear_session() as it was suggested and it corrects the situation for me.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

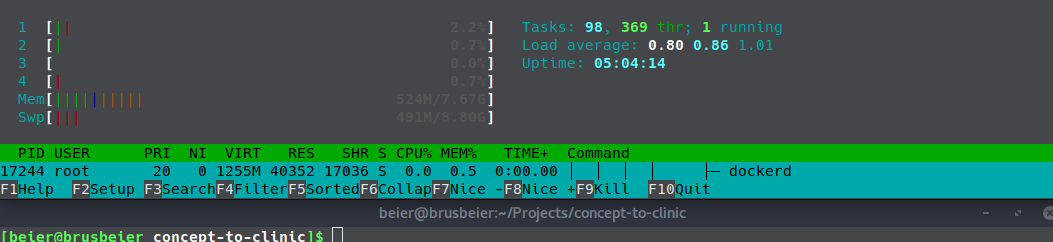

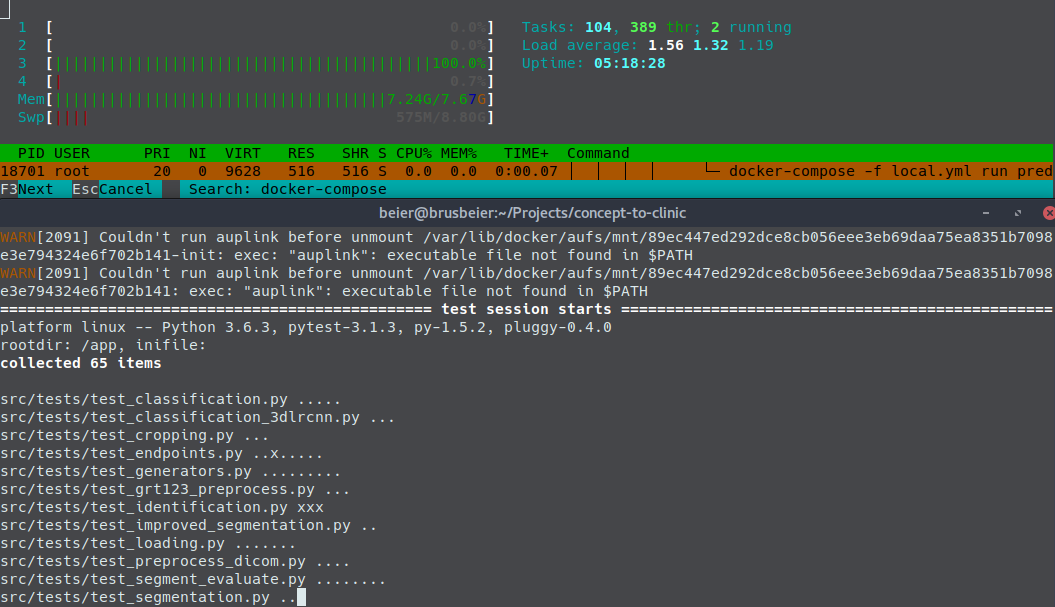

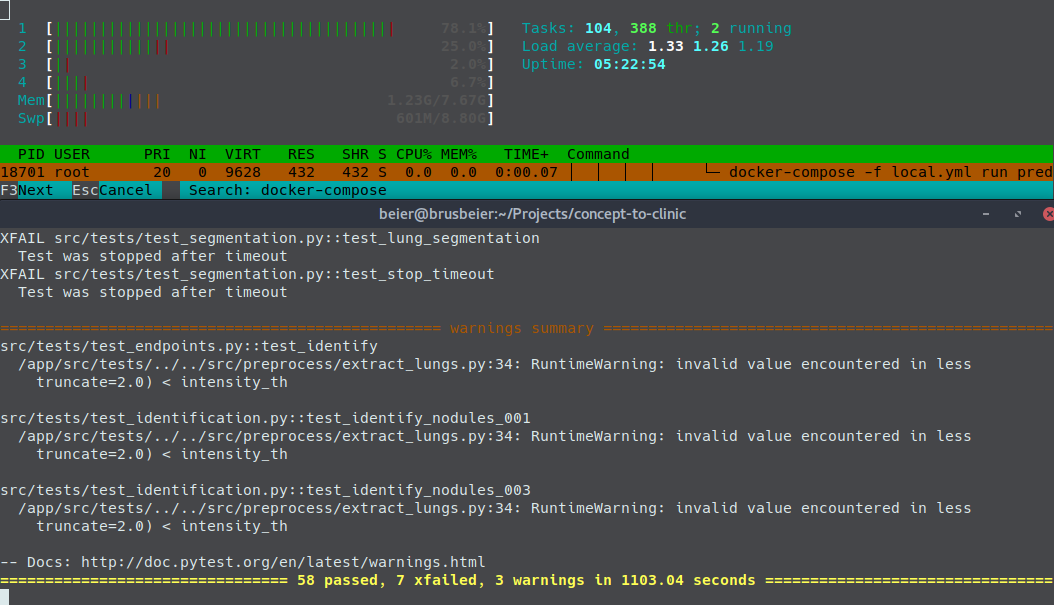

I also include an ability to clear model along with pull_ct and pull_patches which may be useful if feed will be called more than once for the same Model instance. Though, those proprieties can't be responsible for the memory errors. I'll rerun locally with htop to provide the information of RAM load.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As you can see the main impact in memory error was made from the segmentation itself.

dacd9e5 to

2df7b6c

Compare

|

|

||

| correct_detection = compare_results(detections, annotations) | ||

| print 'number of correct_detection:', correct_detection |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Odd, how did this work before? I mean, Py2 vs Py3 etc.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It didn't :) Even more, I don't understand the purpose of evaluate_detection.py, 'cause in PR #301:

We extend the IoU calculation from 2D to 3D but in order to be simple, we only deal with 3D boxes / cubes. Although the detections we have are 3D spheres output from the grt123 system. The threshold we currently use is 0.5 but can easily be changed (‘correct_detection_threshold’ in evaluate_detection.py). We also did some experiments using different thresholds, please check the evaluation results below.

The thing is that do the same for 3D spheres even easier 'cause you have to deal with centroids and diameters only (which are given) no need to compute bboxes. That evaluation was proposed by the LUNA16 authors and was adjusted in PR #292

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@lamby, Codestyle of evaluate_detection.py commit moved to PR #307 as was requested by @reubano

|

All rebased after #307, tests have been passed. |

Refactoring of

evaluate_detection.pyand uncommented tests intest_classification.py, as was mentioned in this comment. Remove redundantself.scale_factorwhich is neither dependend on DATA_SHAPE nor onself.input_shapeby this and this. Remove redundantself.scale_factorwhich was neither dependend on DATA_SHAPE nor onself.input_shapeby this and this.CLA