-

Notifications

You must be signed in to change notification settings - Fork 17.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

runtime: "program exceeds 50-thread limit" in test of os package on darwin-arm-mg912baios #32326

Comments

|

@MichaelTJones also experienced this on his new 18-core Xeon W iMac Pro / 256 GB RAM Machine as per https://groups.google.com/forum/#!topic/golang-dev/NUlf99mA6YM |

|

Likely dup of #31264. |

|

#31264 is fixed but this problem still occurs as reported at https://groups.google.com/forum/?utm_medium=email&utm_source=footer#!msg/golang-nuts/sWmvkEDjkrk/6LOQgFDdAwAJ |

|

Ah, for that problem we likely need to reduce |

|

Change https://golang.org/cl/191277 mentions this issue: |

|

Hello @MichaelTJones, thank you for the patience! We are working on trying to diagnose why it is that by the time that the test executes, that your machine has already created close to 50 threads. To get started, please help me with: package main

import (

"archive/zip"

"context"

"fmt"

"io"

"io/ioutil"

"log"

"os"

"os/exec"

"path/filepath"

"time"

)

func main() {

nPipes := []int{0, 1, 5, 10, 20, 50, 75, 100}

tmpDir, err := ioutil.TempDir("", "th")

if err != nil {

log.Fatalf("Failed to create temporary directory: %v", err)

}

defer os.RemoveAll(tmpDir)

mainGoPath := filepath.Join(tmpDir, "main.go")

if err := ioutil.WriteFile(mainGoPath, []byte(sourceCode), 0644); err != nil {

log.Printf("writing main file %q: %v", mainGoPath, err)

return

}

for _, n := range nPipes {

if err := runIt(tmpDir, mainGoPath, n); err != nil {

log.Printf("Error building for %d: %v\n", n, err)

}

}

fz, err := os.Create("contents.zip")

if err != nil {

log.Printf("Failed to create contents.zip file: %v", err)

return

}

defer fz.Close()

zw := zip.NewWriter(fz)

defer zw.Close()

defer zw.Flush()

err = filepath.Walk(tmpDir, func(path string, fi os.FileInfo, err error) error {

if fi.IsDir() {

return nil

}

if err != nil {

return err

}

zfh, err := zip.FileInfoHeader(fi)

if err != nil {

return err

}

w, err := zw.CreateHeader(zfh)

if err != nil {

return err

}

f, err := os.Open(path)

if err != nil {

return err

}

defer f.Close()

_, err = io.Copy(w, f)

return err

})

if err != nil {

log.Fatalf("filepath.Walk error: %v", err)

}

}

func runIt(baseDir, mainGoPath string, n int) error {

// Now run it and save it to the file.

ctx, cancel := context.WithTimeout(context.Background(), 10*time.Second)

defer cancel()

cmd := exec.CommandContext(ctx, "go", "run", mainGoPath, "-dir", baseDir, "-n", fmt.Sprintf("%d", n))

output, err := cmd.CombinedOutput()

if err != nil {

err = fmt.Errorf("exec error: %v\nOutput: %s\n", err, output)

}

return err

}

const sourceCode = `

package main

import (

"flag"

"fmt"

"log"

"os"

"path/filepath"

"runtime/trace"

)

func osPipesIO(n int) {

r := make([]*os.File, n)

w := make([]*os.File, n)

for i := 0; i < n; i++ {

rp, wp, err := os.Pipe()

if err != nil {

for j := 0; j < i; j++ {

r[j].Close()

w[j].Close()

}

log.Fatal(err)

}

r[i] = rp

w[i] = wp

}

creading := make(chan bool, n)

cdone := make(chan bool, n)

for i := 0; i < n; i++ {

go func(i int) {

var b [1]byte

creading <- true

if _, err := r[i].Read(b[:]); err != nil {

log.Printf("r[%d].Read: %v", i, err)

}

if err := r[i].Close(); err != nil {

log.Printf("r[%d].Close: %v", i, err)

}

cdone <- true

}(i)

}

for i := 0; i < n; i++ {

<-creading

}

for i := 0; i < n; i++ {

if _, err := w[i].Write([]byte{0}); err != nil {

log.Printf("w[%d].Read: %v", i, err)

}

if err := w[i].Close(); err != nil {

log.Printf("w[%d].Close: %v", i, err)

}

<-cdone

}

}

func main() {

baseDir := flag.String("dir", "", "the base directory to place execution traces")

n := flag.Int("n", 0, "the number of *os.Pipe to create")

flag.Parse()

f, err := os.Create(filepath.Join(*baseDir, fmt.Sprintf("trace-%d.txt", *n)))

if err != nil {

log.Fatalf("Failed to create trace file: %v", err)

}

defer f.Close()

trace.Start(f)

defer trace.Stop()

osPipesIO(*n)

}`c) Please share the created zip file and in there you'll see the various execution traces with n reads from [0, 1, 5, 10, 20, 50, 75, 100] which will perhaps shine a light on what's going on. If you don't feel comfortable sharing c), please feel free to email it to me and if you don't feel comfortable sharing the zip of the execution traces with me, not a problem, just please help me run Thank you! |

|

My apologies for the late reply, I was on a long haul flight back home and I finally got time to investigate and see what's up. My apologies for any typos or non-coherent speech but here we go. TL;DR something is up with the internal/poller but due to the large heuristic value of maxThreads being 50 yet on machines with say GOMAXPROCS=16, the max number of threads if the poller created a new thread per read is 36, we never caught this bug. By examining the data from a lot of traces and observing the execution behavior, I deduced a formula to calculate how many max threads are needed for this run P := runtime.GOMAXPROCS(0)

n := threads

maxThreads := 0

if n/P-1 >= P {

maxThreads = 2 + 4*P

} else {

maxThreads = 1 + (7 * P / 4) + (n / P)

}and for @MichaelTJones' test failing, with GOMAXPROCS=32 it is 61, with GOMAXPROCS=36 it is 67, hence why we never caught this bug until he ran it on his machine with Go1.13rc*. Long wind@MichaelTJones responded at https://groups.google.com/d/msg/golang-nuts/sWmvkEDjkrk/vHAPjISIBQAJ with

and then the zip of his trace profiles when running the code above as per and also the trace.txt file after adding this patch to his os_test.go file diff --git a/src/os/os_test.go b/src/os/os_test.go

index c101281258..bc02b07b74 100644

--- a/src/os/os_test.go

+++ b/src/os/os_test.go

@@ -6,6 +6,7 @@ package os_test

import (

"bytes"

+ "context"

"errors"

"flag"

"fmt"

@@ -18,6 +19,7 @@ import (

"reflect"

"runtime"

"runtime/debug"

+ "runtime/trace"

"sort"

"strings"

"sync"

@@ -2253,62 +2255,77 @@ func TestPipeThreads(t *testing.T) {

t.Skip("skipping on js; no support for os.Pipe")

}

- threads := 100

-

- // OpenBSD has a low default for max number of files.

- if runtime.GOOS == "openbsd" {

- threads = 50

+ f, err := Create("trace.txt")

+ if err != nil {

+ t.Fatalf("Failed to create trace file: %v", err)

}

+ defer f.Close()

- r := make([]*File, threads)

- w := make([]*File, threads)

- for i := 0; i < threads; i++ {

- rp, wp, err := Pipe()

- if err != nil {

- for j := 0; j < i; j++ {

- r[j].Close()

- w[j].Close()

+ trace.Start(f)

+ defer trace.Stop()

+ ctx := context.Background()

+ trace.WithRegion(ctx, "thetest", func() {

+ threads := 100

+

+ // OpenBSD has a low default for max number of files.

+ if runtime.GOOS == "openbsd" {

+ threads = 50

+ }

+

+ r := make([]*File, threads)

+ w := make([]*File, threads)

+ for i := 0; i < threads; i++ {

+ rp, wp, err := Pipe()

+ if err != nil {

+ for j := 0; j < i; j++ {

+ r[j].Close()

+ w[j].Close()

+ }

+ t.Fatal(err)

}

- t.Fatal(err)

+ r[i] = rp

+ w[i] = wp

}

- r[i] = rp

- w[i] = wp

- }

- defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))

+ // Disabling this line in order to examine

+ // the number of threads altogether.

+ if false {

+ defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))

+ }

- creading := make(chan bool, threads)

- cdone := make(chan bool, threads)

- for i := 0; i < threads; i++ {

- go func(i int) {

- var b [1]byte

- creading <- true

- if _, err := r[i].Read(b[:]); err != nil {

+ creading := make(chan bool, threads)

+ cdone := make(chan bool, threads)

+ for i := 0; i < threads; i++ {

+ go func(i int) {

+ var b [1]byte

+ creading <- true

+ if _, err := r[i].Read(b[:]); err != nil {

+ t.Error(err)

+ }

+ if err := r[i].Close(); err != nil {

+ t.Error(err)

+ }

+ cdone <- true

+ }(i)

+ }

+

+ for i := 0; i < threads; i++ {

+ <-creading

+ }

+

+ // If we are still alive, it means that the 100 goroutines did

+ // not require 100 threads.

+

+ for i := 0; i < threads; i++ {

+ if _, err := w[i].Write([]byte{0}); err != nil {

t.Error(err)

}

- if err := r[i].Close(); err != nil {

+ if err := w[i].Close(); err != nil {

t.Error(err)

}

- cdone <- true

- }(i)

- }

-

- for i := 0; i < threads; i++ {

- <-creading

- }

-

- // If we are still alive, it means that the 100 goroutines did

- // not require 100 threads.

-

- for i := 0; i < threads; i++ {

- if _, err := w[i].Write([]byte{0}); err != nil {

- t.Error(err)

+ <-cdone

}

- if err := w[i].Close(); err != nil {

- t.Error(err)

- }

- <-cdone

- }

+ })

}

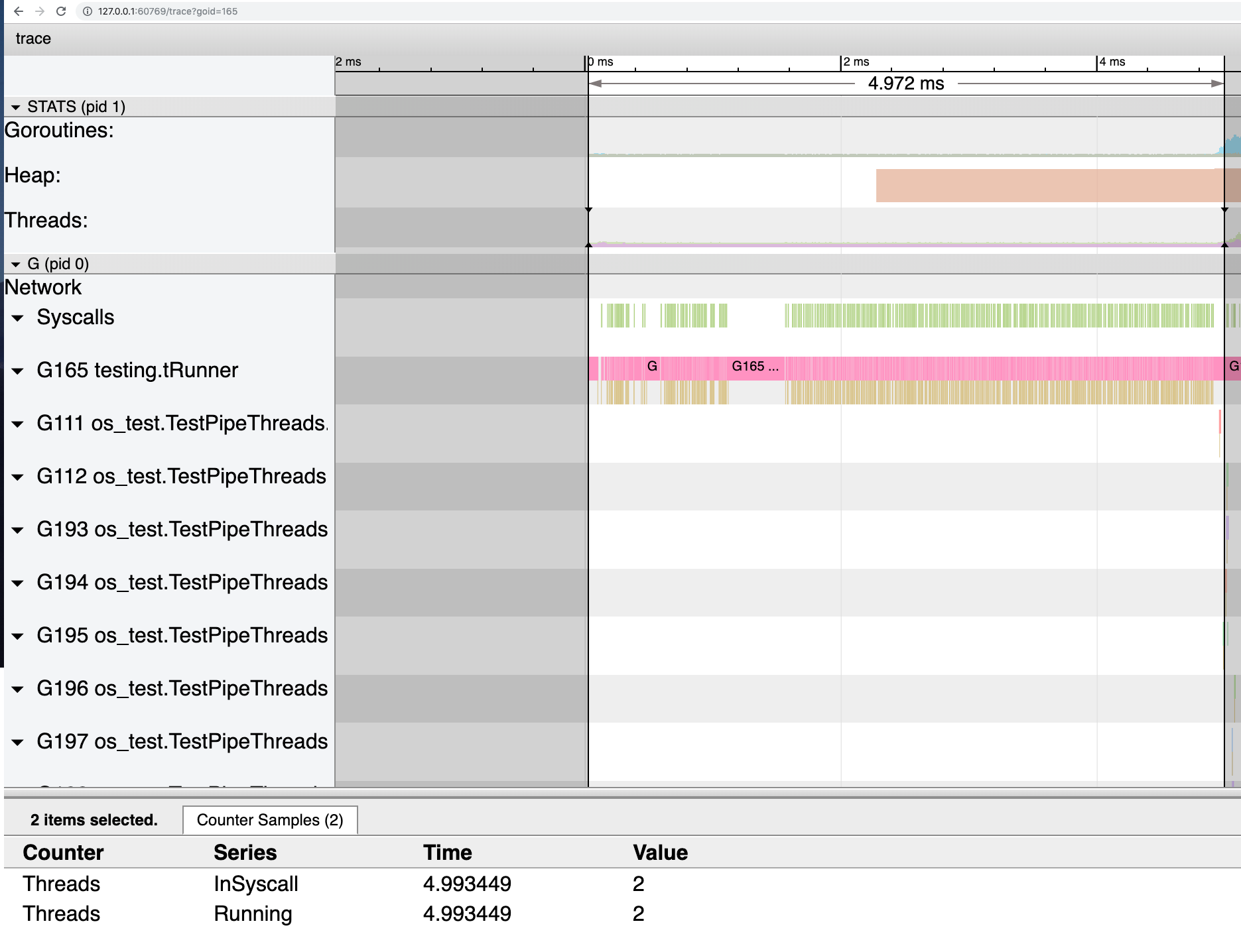

func testDoubleCloseError(t *testing.T, path string) {and initially I had a hypothesis that the number of threads before that test was run was already high when he ran the test via ./all.bash and that those threads were in the idle state before invoking mexit. However that hypothesis was proven wrong when I examined his execution trace from running ./all.bash and if we examine the number of threadsthreads blocked in syscall we see a big number almost equal to ncpu/GOMAXPROCS I then re-read the runtime scheduler code which confirmed some suspicions: due to fairness and load balancing on the number of Ps, each P will be able to run a goroutine so in his case if P=GOMAXPROCS=36, we'll have 36 simultaneous goroutines going for Lines 2283 to 2294 in d0eaec7

and as we know a read makes a syscall  which requires an M. If the most fair scheduler case, each P handling n/P goroutines 1 + 1 + P = 2 + P // The number of Ms used -- (a)and P Ms are making syscalls with the (*os.File).Read when blocked, but somehow the internal/poller doesn't notify us that a read is ready and instead the syscall goes through. Since each P (in the most load balanced case) can take n/P goroutines, now each P has (n/P - 1) goroutines in a runnable state. If the (*os.File).Read blocks for a bit, which it does and the scheduler spinning time/work stealing is faster than the next M is back from syscalls, we now (n-P)/P goroutines to schedule. Since the (*os.File).Read blocks, we also create more Ms as the next (n-P)/P goroutines are ready to run. In total that makes the number of threads: 2 + P + (n-P)/P // -- (b)If we account for random phenomena such as variable read times and spinning threads that come out of a syscall right as the scheduler couldn't find any available, let's tack on a factor say X , so adding X onto (b) 2 + P + (n-P)/P + X and let's say due to that coincidence maybe X threads = 3P/4, being the number of threads that mistime each other. Which gives us finally 2 + P + (n-P)/P + 3P/4 = 1 + (7P/4) + (n/P) -- (c)but if after the first 2 + P + aP // -- where a is a factor, I've found heuristically to be 3and finally the formula becomes: if n/P-1 >= P {

maxThreads = 2 + 4*P

} else {

maxThreads = 1 + (7 * P / 4) + (n / P)

}where: Running grep goroutine on the stackdump from https://groups.google.com/d/msg/golang-nuts/sWmvkEDjkrk/6LOQgFDdAwAJ reveals $ grep 'goroutine .\+ \[' stack | cut -d'[' -f2 | sort | uniq | while read F;do count=$(grep "$F" stack | wc -l);echo "$F $count";done

IO wait]: 56

chan receive]: 1

runnable]: 7

syscall]: 39and from examining his trace-100.txt file with go tool trace trace-100.txtat the peak of the number of threads seen has RecommendationThus instead of hardcoding the maximum number of threads to 50, which I believe was a ballpark from running tests when we added the code in the failing test. If we use that final formula in (c), we'll see that it should pass on Michael's that value is 66 which interestingly he too found #31264 (comment). On say a 4 core machine, that value will be 18, but the formula will produce the values. diff --git a/src/os/os_test.go b/src/os/os_test.go

index c101281258..a1d86179d9 100644

--- a/src/os/os_test.go

+++ b/src/os/os_test.go

@@ -2275,7 +2275,15 @@ func TestPipeThreads(t *testing.T) {

w[i] = wp

}

- defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))

+ P := runtime.GOMAXPROCS(0)

+ n := threads

+ maxThreads := 0

+ if n/P-1 >= P {

+ maxThreads = 2 + 4*P

+ } else {

+ maxThreads = 1 + (7 * P / 4) + (n / P)

+ }

+ defer debug.SetMaxThreads(debug.SetMaxThreads(maxThreads))

creading := make(chan bool, threads)

cdone := make(chan bool, threads)Perhaps we should now examine what expectations are between the maximum number of threads created by syscalls from the poller and those by the scheduler. @MichaelTJones could you please confirm that with the original code as was, Go fails to build on Go1.10, Go1.11, Go1.12 too? For Go1.13 we could either disable that test or apply the formula I provided deduced from my finds, and then move this issue to Go1.14 to investigate the poller's behavior and expectations. Kindly pinging @ianlancetaylor @aclements @dvyukov. Thank you. |

|

will do

…On Thu, Aug 29, 2019 at 10:37 AM Emmanuel T Odeke ***@***.***> wrote:

My apologies for the late reply, I was on a long haul flight back home and

I finally got time to investigate and see what's up. My apologies for any

typos or non-coherent speech but here we go.

*TL;DR* something is up with the internal/poller but due to the large

heuristic value of maxThreads being 50 yet on machines with say

GOMAXPROCS=16, the max number of threads if the poller created a new thread

per read is 36, so we never caught this bug. I created a formula to

calculate how many max threads are needed for this run

P := runtime.GOMAXPROCS(0)

n := threads

maxThreads := 0

if n/P-1 >= P {

maxThreads = 2 + 4*P

} else {

maxThreads = 1 + (7 * P / 4) + (n / P)

}

and for @MichaelTJones <https://github.com/MichaelTJones>' test failing,

with GOMAXPROCS=32 it is 61, with GOMAXPROCS=36 it is 67, hence why we

never caught this bug until he ran this.

@MichaelTJones <https://github.com/MichaelTJones> responded at

https://groups.google.com/d/msg/golang-nuts/sWmvkEDjkrk/vHAPjISIBQAJ with

Happy to help!

plum:testtest mtj$ sysctl hw

hw.ncpu: 36

hw.byteorder: 1234

hw.memsize: 274877906944

hw.activecpu: 36

hw.physicalcpu: 18

hw.physicalcpu_max: 18

hw.logicalcpu: 36

hw.logicalcpu_max: 36

hw.cputype: 7

hw.cpusubtype: 8

hw.cpu64bit_capable: 1

hw.cpufamily: 939270559

hw.cacheconfig: 36 2 2 36 0 0 0 0 0 0

hw.cachesize: 274877906944 32768 1048576 25952256 0 0 0 0 0 0

hw.pagesize: 4096

hw.pagesize32: 4096

hw.busfrequency: 100000000

hw.busfrequency_min: 100000000

hw.busfrequency_max: 100000000

hw.cpufrequency: 2300000000

hw.cpufrequency_min: 2300000000

hw.cpufrequency_max: 2300000000

hw.cachelinesize: 64

hw.l1icachesize: 32768

hw.l1dcachesize: 32768

hw.l2cachesize: 1048576

hw.l3cachesize: 25952256

hw.tbfrequency: 1000000000

hw.packages: 1

hw.optional.floatingpoint: 1

hw.optional.mmx: 1

hw.optional.sse: 1

hw.optional.sse2: 1

hw.optional.sse3: 1

hw.optional.supplementalsse3: 1

hw.optional.sse4_1: 1

hw.optional.sse4_2: 1

hw.optional.x86_64: 1

hw.optional.aes: 1

hw.optional.avx1_0: 1

hw.optional.rdrand: 1

hw.optional.f16c: 1

hw.optional.enfstrg: 1

hw.optional.fma: 1

hw.optional.avx2_0: 1

hw.optional.bmi1: 1

hw.optional.bmi2: 1

hw.optional.rtm: 1

hw.optional.hle: 1

hw.optional.adx: 1

hw.optional.mpx: 0

hw.optional.sgx: 0

hw.optional.avx512f: 1

hw.optional.avx512cd: 1

hw.optional.avx512dq: 1

hw.optional.avx512bw: 1

hw.optional.avx512vl: 1

hw.optional.avx512ifma: 0

hw.optional.avx512vbmi: 0

hw.targettype: Mac

hw.cputhreadtype: 1

and then the zip of his trace profiles when running the code above as per

contents.zip <https://github.com/golang/go/files/3553698/contents.zip>

and also the trace.txt file after adding this patch to his os_test.go file

diff --git a/src/os/os_test.go b/src/os/os_test.go

index c101281258..bc02b07b74 100644--- a/src/os/os_test.go+++ b/src/os/os_test.go@@ -6,6 +6,7 @@ package os_test

import (

"bytes"+ "context"

"errors"

"flag"

"fmt"@@ -18,6 +19,7 @@ import (

"reflect"

"runtime"

"runtime/debug"+ "runtime/trace"

"sort"

"strings"

"sync"@@ -2253,62 +2255,77 @@ func TestPipeThreads(t *testing.T) {

t.Skip("skipping on js; no support for os.Pipe")

}

- threads := 100-- // OpenBSD has a low default for max number of files.- if runtime.GOOS == "openbsd" {- threads = 50+ f, err := Create("trace.txt")+ if err != nil {+ t.Fatalf("Failed to create trace file: %v", err)

}+ defer f.Close()

- r := make([]*File, threads)- w := make([]*File, threads)- for i := 0; i < threads; i++ {- rp, wp, err := Pipe()- if err != nil {- for j := 0; j < i; j++ {- r[j].Close()- w[j].Close()+ trace.Start(f)+ defer trace.Stop()+ ctx := context.Background()+ trace.WithRegion(ctx, "thetest", func() {+ threads := 100++ // OpenBSD has a low default for max number of files.+ if runtime.GOOS == "openbsd" {+ threads = 50+ }++ r := make([]*File, threads)+ w := make([]*File, threads)+ for i := 0; i < threads; i++ {+ rp, wp, err := Pipe()+ if err != nil {+ for j := 0; j < i; j++ {+ r[j].Close()+ w[j].Close()+ }+ t.Fatal(err)

}- t.Fatal(err)+ r[i] = rp+ w[i] = wp

}- r[i] = rp- w[i] = wp- }

- defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))+ // Disabling this line in order to examine+ // the number of threads altogether.+ if false {+ defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))+ }

- creading := make(chan bool, threads)- cdone := make(chan bool, threads)- for i := 0; i < threads; i++ {- go func(i int) {- var b [1]byte- creading <- true- if _, err := r[i].Read(b[:]); err != nil {+ creading := make(chan bool, threads)+ cdone := make(chan bool, threads)+ for i := 0; i < threads; i++ {+ go func(i int) {+ var b [1]byte+ creading <- true+ if _, err := r[i].Read(b[:]); err != nil {+ t.Error(err)+ }+ if err := r[i].Close(); err != nil {+ t.Error(err)+ }+ cdone <- true+ }(i)+ }++ for i := 0; i < threads; i++ {+ <-creading+ }++ // If we are still alive, it means that the 100 goroutines did+ // not require 100 threads.++ for i := 0; i < threads; i++ {+ if _, err := w[i].Write([]byte{0}); err != nil {

t.Error(err)

}- if err := r[i].Close(); err != nil {+ if err := w[i].Close(); err != nil {

t.Error(err)

}- cdone <- true- }(i)- }-- for i := 0; i < threads; i++ {- <-creading- }-- // If we are still alive, it means that the 100 goroutines did- // not require 100 threads.-- for i := 0; i < threads; i++ {- if _, err := w[i].Write([]byte{0}); err != nil {- t.Error(err)+ <-cdone

}- if err := w[i].Close(); err != nil {- t.Error(err)- }- <-cdone- }+ })

}

func testDoubleCloseError(t *testing.T, path string) {

and initially I had a hypothesis that the number of threads before that

test was run was already high when he ran the test via ./all.bash and that

those threads were in the idle state before invoking mexit. However that

hypothesis was proven wrong when I examined his execution trace from

running ./all.bash

[image: Screen Shot 2019-08-28 at 9 29 51 PM]

<https://user-images.githubusercontent.com/4898263/63910314-07f49100-c9db-11e9-9d80-de2e11f657e5.png>

from_all.bash-trace.txt

<https://github.com/golang/go/files/3556707/from_all.bash-trace.txt>

and if we examine the number of threadsthreads blocked in syscall we see a

big number almost equal to ncpu/GOMAXPROCS

[image: Screen Shot 2019-08-28 at 9 37 35 PM]

<https://user-images.githubusercontent.com/4898263/63910556-12fbf100-c9dc-11e9-893f-68ed035168ca.png>

I then re-read the runtime scheduler code which confirmed some suspicions:

due to fairness and load balancing on the number of Ps, each P will be able

to run a goroutine so in his case if P=GOMAXPROCS=36, we'll have 36

simultaneous goroutines going for

https://github.com/golang/go/blob/d0eaec79f95c2ad04d58e42c8e7cb123200cdfd5/src/os/os_test.go#L2283-L2294

and as we know a read makes a syscall

[image: Screen Shot 2019-08-28 at 9 57 26 PM]

<https://user-images.githubusercontent.com/4898263/63911376-da114b80-c9de-11e9-8413-387fd361a235.png>

which requires an M.

If the most fair scheduler case, each P handling n/P goroutines

So far as we can see to begin with we've got, this number of Ms:

1 (Initial M) + 1 (since on line 2266 we perform a syscall with os.Pipe()

yet we MUST have one M at least available in case we go into C calls) + P

(simultaneous Ms) executing. i.e.

1 + 1 + P = 2 + P // The number of Ms used -- (a)

and P Ms are making syscalls with the (*os.File).Read when blocked, but

somehow the internal/poller doesn't notify us that a read is ready and

instead the syscall goes through.

Since each P (in the most load balanced case) can take n/P goroutines, now

each P has (n/P - 1) goroutines in a runnable state. If the (*os.File).Read

blocks for a bit, which it does and the scheduler spinning time/work

stealing is faster than the next M is back from syscalls, we now (n-P)/P

goroutines to schedule. Since the (*os.File).Read blocks, we also create

more Ms as the next (n-P)/P goroutines are ready to run. In total that

makes the number of threads:

2 + P + (n-P)/P // -- (b)

If we account for random phenomena such as variable read times and

spinning threads that come out of a syscall right as the scheduler couldn't

find any available, let's tack on a factor say X , so adding X onto (b)

Gives us the formula to calculate the number of max threads that we should

expect given syscalls as

2 + P + (n-P)/P + X

and let's say due to that coincidence maybe X threads = 3P/4, being the

number of threads that mistime each other. Which gives us finally

2 + P + (n-P)/P + 3P/4 = 1 + (7P/4) + (n/P) -- (c)

but if after the first 2 + P threads are created, if (n-P)/P >= P, we can

still schedule more Gs and thereby if the reads continue blocking, there

will be P * <A_FACTOR> goroutines blocking, thus for that case, we can do

2 + P + aP // -- where a is a factor, I've found heuristically to be 3

and finally the formula becomes:

[image: Screen Shot 2019-08-29 at 10 17 12 AM]

<https://user-images.githubusercontent.com/4898263/63961679-2f347800-ca46-11e9-95b2-99685bdd7c92.png>

if n/P-1 >= P {

maxThreads = 2 + 4*P

} else {

maxThreads = 1 + (7 * P / 4) + (n / P)

}

where:

P -- GOMAXPROCS e.g. 32 for Michael's case

n -- Number of threads e.g. 100

Running grep goroutine on the stackdump from

https://groups.google.com/d/msg/golang-nuts/sWmvkEDjkrk/6LOQgFDdAwAJ

reveals

``shell

$ grep 'goroutine .+ [' stack | cut -d'[' -f2 | sort | uniq | while read

F;do count=$(grep "$F" stack | wc -l);echo "$F $count";done

IO wait]: 56

chan receive]: 1

runnable]: 7

syscall]: 39

and from examining his trace-100.txt file with

```shell

go tool trace trace-100.txt

at the peak of the number of threads seen has

[image: Screen Shot 2019-08-28 at 11 17 46 PM]

<https://user-images.githubusercontent.com/4898263/63915149-18f8ce80-c9ea-11e9-92d2-6da57cb3d21a.png>

41 threads currently allocated and plugging into the formula in (b)

P = 36 cores

n = 100 threads

gives: 2 + 36 + (100-36)/P = ~40 threads

and that validates my hypothesis of the formula and also looking at the

various trace-<N_VALUE>.txt files that were produced from Michael's runs,

via the trace viewer produces similar results

Recommendation

Thus instead of hardcoding the maximum number of threads to 50, which I

believe was a ballpark from running tests when we added the code in the

failing test. If we use that final formula in (c), we'll see that it should

pass on Michael's that value is 66 which interestingly he too found #31264

(comment)

<#31264 (comment)>. On

say a 4 core machine, that value will be 18, but the formula will produce

the values.

This diff will get the code to pass

diff --git a/src/os/os_test.go b/src/os/os_test.go

index c101281258..a1d86179d9 100644--- a/src/os/os_test.go+++ b/src/os/os_test.go@@ -2275,7 +2275,15 @@ func TestPipeThreads(t *testing.T) {

w[i] = wp

}

- defer debug.SetMaxThreads(debug.SetMaxThreads(threads / 2))+ P := runtime.GOMAXPROCS(0)+ n := threads+ maxThreads := 0+ if n/P-1 >= P {+ maxThreads = 2 + 4*P+ } else {+ maxThreads = 1 + (7 * P / 4) + (n / P)+ }+ defer debug.SetMaxThreads(debug.SetMaxThreads(maxThreads))

creading := make(chan bool, threads)

cdone := make(chan bool, threads)

Perhaps we should now examine what expectations are between the maximum

number of threads created by syscalls from the poller and those by the

scheduler. @MichaelTJones <https://github.com/MichaelTJones> could you

please confirm that with the original code as was, Go fails to build on

Go1.10, Go1.11, Go1.12 too?

For Go1.13 we could either disable that test or apply the formula I

provided deduced from my finds, and then move this issue to Go1.14 to

investigate the poller's behavior and expectations.

Kindly pinging @ianlancetaylor <https://github.com/ianlancetaylor>

@aclements <https://github.com/aclements> @dvyukov

<https://github.com/dvyukov>.

Thank you.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#32326?email_source=notifications&email_token=AB4DFJI56KDEFIQYZRUG3ETQHACL7A5CNFSM4HRE6N4KYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD5PHW6Y#issuecomment-526285691>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AB4DFJMDXNODCNEYYYEYLC3QHACL7ANCNFSM4HRE6N4A>

.

--

*Michael T. [email protected] <[email protected]>*

|

|

Change https://golang.org/cl/192342 mentions this issue: |

|

Works on 1.13, 1.12, 1.11.

No trace.WithRegion() on 1.10, so no test result there.

Summary: fix works on my machine.

…On Thu, Aug 29, 2019 at 11:48 AM GopherBot ***@***.***> wrote:

Change https://golang.org/cl/192342 mentions this issue: os: skip

TestPipeThreads on GOOS=darwin

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#32326?email_source=notifications&email_token=AB4DFJJSIT7BTWP5HXSRD2TQHAKWNA5CNFSM4HRE6N4KYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD5POK4A#issuecomment-526312816>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AB4DFJPJMUEY53NN3PZKZ6TQHAKWNANCNFSM4HRE6N4A>

.

--

*Michael T. [email protected] <[email protected]>*

|

Updates #32326. Updates #33953. Change-Id: I97a1cbe682becfe9592e19294d4d94f5e5b16c21 Reviewed-on: https://go-review.googlesource.com/c/go/+/192342 Run-TryBot: Emmanuel Odeke <[email protected]> TryBot-Result: Gobot Gobot <[email protected]> Reviewed-by: Ian Lance Taylor <[email protected]>

|

Change https://golang.org/cl/192757 mentions this issue: |

Updates golang#32326. Updates golang#33953. Change-Id: I97a1cbe682becfe9592e19294d4d94f5e5b16c21 Reviewed-on: https://go-review.googlesource.com/c/go/+/192342 Run-TryBot: Emmanuel Odeke <[email protected]> TryBot-Result: Gobot Gobot <[email protected]> Reviewed-by: Ian Lance Taylor <[email protected]>

|

I found the commit that introduced this problem, commit a3b0144#diff-38e21adab3460688e3ce0b71fa9422d0 aka CL https://go-review.googlesource.com/c/141639 which is a part of Go1.12. This program should be able to run alright with GOMAXPROCS=8 and a max of 8 threads before that commit package main

import (

"log"

"os"

"runtime/debug"

"sync"

"syscall"

"time"

)

func main() {

n := 100

var rl, wl []*os.File

for i := 0; i < n; i++ {

prc, pwc, err := os.Pipe()

if err != nil {

// Cleanup.

for j := 0; j < i; j++ {

wl[i].Close()

rl[i].Close()

}

}

rl = append(rl, prc)

wl = append(rl, pwc)

}

defer debug.SetMaxThreads(debug.SetMaxThreads(8))

var wg sync.WaitGroup

for i := 0; i < n; i++ {

wg.Add(1)

go func(i int) {

bs := make([]byte, 4)

wg.Done()

prc := rl[i]

if _, err := prc.Read(bs); err != nil {

log.Fatalf("Failed to read %d: %v\n", i, err)

}

}(i)

}

wg.Wait()

println("Waiting now")

for {

<-time.After(5 * time.Second)

if true {

return

}

proc, _ := os.FindProcess(os.Getpid())

proc.Signal(syscall.SIGQUIT)

}

for i := 0; i < n; i++ {

if _, err := wl[i].Write([]byte("Hello")); err != nil {

log.Fatalf("Write #%d failed: %v", err)

}

}

}Kindly pinging @randall77. |

Updates #32326. Updates #33953. Change-Id: I97a1cbe682becfe9592e19294d4d94f5e5b16c21 Reviewed-on: https://go-review.googlesource.com/c/go/+/192342 Run-TryBot: Emmanuel Odeke <[email protected]> TryBot-Result: Gobot Gobot <[email protected]> Reviewed-by: Ian Lance Taylor <[email protected]> (cherry picked from commit bac5b3f) Reviewed-on: https://go-review.googlesource.com/c/go/+/192757 Run-TryBot: Andrew Bonventre <[email protected]> Reviewed-by: Dmitri Shuralyov <[email protected]>

Updates golang#32326. Updates golang#33953. Change-Id: I97a1cbe682becfe9592e19294d4d94f5e5b16c21 Reviewed-on: https://go-review.googlesource.com/c/go/+/192342 Run-TryBot: Emmanuel Odeke <[email protected]> TryBot-Result: Gobot Gobot <[email protected]> Reviewed-by: Ian Lance Taylor <[email protected]>

|

Some great news, @minux's CL https://go-review.googlesource.com/c/go/+/197938 fixed this issue in that change from entersyscallblock() to entersyscall() so we'll need to backport Minux's CL to both Go1.12 and Go1.13 as per https://groups.google.com/d/msg/golang-dev/UYDTDWHJsC8/a1_4KiKnCwAJ. @gopherbot please backport this issue to Go1.12 and Go1.13. |

|

Backport issue(s) opened: #34711 (for 1.12), #34712 (for 1.13). Remember to create the cherry-pick CL(s) as soon as the patch is submitted to master, according to https://golang.org/wiki/MinorReleases. |

|

Change https://golang.org/cl/199477 mentions this issue: |

https://build.golang.org/log/0c288516fcfe7498321f7698052e39b089d14f09

CC @mknyszek @aclements @ianlancetaylor

The text was updated successfully, but these errors were encountered: